Volume 12, No. 1, Art. 17 – January 2011

Tales From the Bleeding Edge: The Qualitative Analysis of Complex Video Data Using Transana

David K. Woods & Paul G. Dempster

Abstract: This paper explores the analysis of complex multi-media data that highlights the latest advances in the kinds of data that can be analyzed using qualitative analytic software. The data examined consists of multiple simultaneous video streams with different video and audio content. The researchers found that multiple simultaneous transcripts can be useful in making sense of the very fast-moving content of these complex media files. The data capture and analysis techniques described provide the researcher with a tremendous level of access to a huge amount of simultaneous data, but the authors suggest that researchers in the analytic environment described are able to function well in the task of managing, understanding, and analyzing very complex data that captures very complex occurrences in the real world.

Key words: qualitative Analysis; CAQDAS; Transana; video data

Table of Contents

1. Introduction

2. Literature Review

2.1 Responses to the complexity of research

2.2 Visualizing the bleeding edge

2.3 Researching video games

3. Data and Analysis

3.1 Data collection and capture

3.2 Analysis in Transana

3.2.1 Multiple researchers

3.2.2 Multiple media files

3.2.3 Multiple transcripts

3.2.4 Clips and coding

3.3 A sophisticated analytic environment

4. Conclusions

The primary emphasis of the KWALON 2010 conference was the question "Is Qualitative Analysis Software really comparable?" As part of the conference, teams associated with five different software packages looked at a common data set, and all completed different and distinct analyses.1) All of the teams demonstrated that one could explore much of the data set with their software, with no team including all the data at the conference. The conference organizers, of course, took pains to assemble a data set that all of the software packages involved could analyze, despite the fact that different packages support different types of data (see Ann LEWINS & Christina SILVER, 2007). [1]

The authors felt that the conference data analysis addressed only part of the main conference question, and that another important question lurks behind the software comparison experiment, a question which the conference data set partially obscures. "Are there types of data," one might ask, "that can be analyzed better or more easily by some software packages than by others?" The emphasis of this question looks for differences between software packages rather than looking for commonalities. To really compare software packages, it helps to look at both how they are the same and at how they are different. [2]

Transana is a qualitative analysis software package specifically designed for the analysis of video and audio data, with a development emphasis on facilitating the analysis of data that cannot be analyzed easily by other software packages. Transana's developers strive to address new analytic challenges at the "bleeding edge" of research and technology. They work with researchers to try to find new ways to work with data to allow researchers to perform analyses that cannot be accomplished using other software packages. [3]

This paper explores the analysis of a data set that utilizes several of the latest developments in Transana. It describes the analysis of a small but complex, multi-layered dataset with multiple overlapping video and audio threads. This paper describes a new level of complexity in the data researchers can capture and analyze successfully. [4]

This brief literature review considers the complexity of qualitative research and how researchers have sought to use technology to aid them in their work. It considers visual research and highlights the need for more work to be done in the area where research, video, and software meet. Having defined what is meant by "bleeding edge" we explore the continually developing world of video game research. [5]

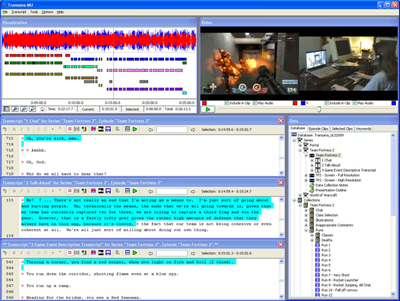

2.1 Responses to the complexity of research

By its very nature, explaining the social world qualitatively tends to be complicated (ALASUUTARI, 2004; BERGER & LUCKMANN, 1966). John CRESWELL (2009, p.4) argues that "those who engage in this form of inquiry support a way of looking at research that honours an inductive style, a focus on individual meaning, and the importance of rendering the complexity of a situation." While analyzing text data can be complicated, the analysis of visual data adds a further level of complexity to qualitative research. [6]

The ability of qualitative researchers to deal with complexity (CRESWELL, 2007; PESHKIN, 1988) and the idiosyncrasies which are often inherent in the data can sometimes result in very large data sets (DI GREGORIO & DAVIDSON, 2008) which become unwieldy. Papers and how-to textbooks talk about variations on the theme of "drowning in data" (MORSE & RICHARDS, 2002; RICHARDS, 1998, 2005; RICHARDS & RICHARDS, 1994). [7]

In response to the need to manage large amounts of information, a range of software products have been developed (see FIELDING & LEE, 1998, 1991; KELLE, 2007, 2004, 1995; WEITZMAN & MILES, 1995; WEITZMAN, 1999). Software however should not be seen as the great panacea,

"[a]lthough the technology facilitates the clerical handling of voluminous textual material, this does not necessarily translate into a quicker or easier analysis. Instead, there's a real danger of being overwhelmed by the sheer volume of information that becomes available when using computer technology" (KELLE & LAURIE, 1995, p.24). [8]

Clearly technology brings with it different sets of challenges. See for example Raymond LEE's excellent work on the history of the interview, which highlights changes that have permeated research as a result of the adoption of technology (LEE, 2004). [9]

2.2 Visualizing the bleeding edge

Visual studies have seen a renaissance and development, (BANKS, 2007; BEILIN, 2005; EMMISON & SMITH, 2000; PINK, 2007; PROSSER, 1998; WAGNER, 2002) with journals such as Visual Studies and the Journal of Visual Culture providing a range of scholarly debate. There is also a wider acceptance of video as part of mainstream culture. See, for example, http://www.youtube.com/ and, in research terms, see Martin BAUER and George GASKELL (2000), Howard BECKER (1986) and Norman DENZIN (2004). There is however a paucity of literature around a number of areas such as the links between research, video and software; and new methodologies which incorporate technical developments. It is difficult to find examples where researchers are engaging with the bleeding edge of technology, see Erica HALVERSON, Michelle BASS and David WOODS (Under review) for one example. [10]

The term "bleeding edge" refers to the very latest of innovations, those innovations that are often brand new or still under development. This is contrasted with the technologically safer term "leading edge" (PENTLAND, 1997) which refers to technology that, while new, has been better tested and may be more widely spread. This term takes its form from the metaphors and language associated with biological imagery (ROZIN, HAIDT & McCAULEY, 1993). Indeed according to Gabriel IGNATOW and John JOST (2000, p.4), the computer industry has a propensity to using, inventing, or borrowing considerably from the biological world, possibly as a way of compensating for the sterile environments in which they work. "Biological metaphors can be understood as providing the kind of socio-linguistic environmental compensation, queuing mental images and attendant emotions that serve to partially recreate a more natural, lively, and complex stimulus environment." (For a more detailed account of their methodology see Gabriel IGNATOW, 2003.) Bleeding edge is considered to be technically more complex and state of the art but possibly less proven than leading edge or cutting edge technologies (PENTLAND, 1997).2) [11]

The data set described in this paper is video of a Massive Multi-player Online Game, or MMOG. James GEE (2005, p.1) defines video games as "the sorts of commercial games people play on computers and game platforms." Video and computer games have adapted and changed as technology has developed (AOYAMA & IZUSHI, 2003; GAUME, 2006). Their growing influence (DORMAN, 1997) is well established, with around 67% of homes in America owning either a console or PC to run entertainment software (ESA, 2010). There is also a growing community of players who interact online, something that WEIBEL, WISSMATH, HABEGGER, STEINER and GRONER (2008) argue was non-existent ten years ago, making this a growing area of research (STEINKUEHLER, 2006; TAYLOR, 2006; YEE, 2006). Games have also developed and changed as technology has allowed greater user control within them, changing from low level passivity to much higher levels of interaction (LOGUIDICE & BARTON, 2009; YEE, 2006). Thus, "games allow players to be producers and not just consumers" (GEE, 2003, p.2). [12]

The simulated world of games can be studied in a variety of ways. The technical sophistication of games means that they are much more than objects to be studied textually or simple collections of rules and interactions (SQUIRE, 2006). Educationalists have been tapping into gaming potentiality for some time, with great success (HALVERSON, SHAFFER, SQUIRE & STEINKUEHLER, 2006); see also work on prosocial behaviors by Tobias GREITEMEYER and Silvia OSSWALD (2010) and the use of games to improve surgery by Jeremy LYNCH, Paul AUGHWANE and Toby HAMMOND (2010). Alongside the positives, however, there is a considerable literature that focuses on violence in gaming, (including ANDERSON et al., 2010; BENSLEY & VAN EENWYK, 2001; DILL & DILL, 1998; FERGUSON & RUEDA, 2009; GENTILE, LYNCH, LINDER & WALSH, 2004; GRIFFITHS, 1999; MARKEY & MARKEY, 2010). The results are however varying and not always conclusive (DORMAN, 1997). [13]

What seems to be apparent within the literature is that games are developing in terms of complexity. This means that researchers have to apply increasing levels of sophistication in terms of analysis if they wish to fully uncover and understand what is occurring during modern game play. This makes video game research an ideal choice for exploring the collection and analysis of complex data. [14]

At the University of Wisconsin, Kurt SQUIRE, Constance STEINKUEHLER, and their research group are doing very interesting work in studying the education value of video games (GEE, 2003, 2004, 2005, 2008; HALVERSON et al., 2006; SHAFFER, SQUIRE, HALVERSON & GEE, 2005). This area is growing rapidly, with a yearly conference showcasing work in the field (http://www.glsconference.org/). [15]

For the conference above, Dr. WOODS collected data of a single subject playing a video game called Team Fortress 2, a massive multi-player online game in the first person shooter genre in which the stated object of the game is to "capture the flag." Approximately 25 minutes of game play data was captured. The point of this data collection was to demonstrate what was analytically possible, to capture multiple streams of information simultaneously in a meaningful way that could be "worked on" in a rigorous manner within a qualitative data analysis software package. [16]

It is important to emphasize that we are looking at this data on two levels, the data itself and the methodological and analytic process being applied to that data. It is this second level that we are most interested in for this paper. The researchers were interested in studying complexity within the methodological and analytic processes and, as such, the actual outcome of the data being examined was in this sense entirely secondary. However, to understand the processes being explored, to get at the issues of the subtlety and complexity, we must start with a description of the data that was collected and analyzed. [17]

3.1 Data collection and capture

Data collection consisted of capturing two inter-dependent video streams. First, a video camera captured an over-the-shoulder shot of the observed subject and his computer. The visual portion of this stream captured the player, his computer equipment, and his interaction with the equipment, including player gestures such as pointing to the screen, as well as his manipulation of the computer equipment during game play. As shown in Figure 1, the computer screen is visible in the camera shot, but it is difficult to see much detail on the screen because of the small size of the screen within the video frame and because the level of contrast between the screen and the background environment left the screen washed out and hard to see.

Figure 1: Video capture of the subject playing the game [18]

The audio track for this video consisted of all sounds made by the observed player, both in-game chat directed (through his headset microphone) at other game players and talk-aloud commentary directed at the researcher. The subject and the researcher interacted during some of the game play, with the researcher asking occasional questions about the game context and the play decisions the player was making. No audio from the game itself or chat from other game players within the game's chat channel was captured by the camera, as the observed player was wearing headphones rather than having game sound come through his computer speakers. [19]

A second video stream consisting of a screen-capture recording of the game play on the observed player's computer was created using a computer program called FRAPS3). As Figure 2 shows, this program captured a high resolution recording of the player's screen, as well as capturing all audio that was being output by the computer to the player headphones. Thus, the audio included in-game environment sounds as well as the game chat contributions of all players except the observed player. Note that FRAPS did not capture the observed player's microphone input as part of its audio recording. If we had not collected the camera video stream as well, there would have been a critical gap in the audio data that was captured. The observed player's contribution to the online chat would not have been captured. This is a common technical difficulty with the capture of computer-based audio communication.

Figure 2: Video screen capture of game play [20]

Thus, the data set being examined consisted of two simultaneously-recorded, overlapping video files, each of which captured a different set of details about the game play being studied. One video file shows the player and his computer, while the other shows just the computer display in much greater detail. The two video files have totally separate audio tracks, with one file including all sounds made by the observed player and the researcher, and the other file including all sounds produced by the game and all in-game chat contributions made by all players except the observed player. The game environment was too complex for either method to capture all the important data; it is only through examining both media streams that we get a full picture of this player's in-game experience. [21]

For analytic purposes, two copies of the screen capture video stream were created using video editing and encoding software. One copy was a very high resolution version, where all screen text was clearly legible and all game details were clearly visible. Technically speaking, this copy of the video was of a much higher resolution than typical digital video, even HD digital video. That is because computer screens often operate at higher resolutions than HD televisions do. Such very high resolution video can consequently be taxing of computer hardware. A second, lower-resolution copy of the screen capture media file was also produced. The lower resolution version of the screen capture video was used for most of the analysis. Details such as in-game text were not legible on this lower resolution copy, though overall game play was still quite distinguishable. The video stream from the camera was rendered only in this lower quality format, as video equipment captures images at lower resolution than screen capture software does, and there were no elements in this media stream that required a high resolution copy to decode. Experience suggested that the lower-resolution versions would be sufficient for most of the multiple video analytic tasks we intended to pursue. [22]

This data was analyzed using Transana's multi-user version4), which allows multiple simultaneous researchers to work on the same data at the same time. As each researcher works on the data, the changes that person makes are shared with all others currently logged on so that each researcher can see the changes others make as they are made (for more details on how we worked with multiple researchers in another project, see Paul DEMPSTER & David WOODS, 2011, this issue). [23]

With the present data set, the video and audio data was collected by a single researcher and was then shared with other members of the research team. The researcher who collected the data also took responsibility for doing initial transcription of the data. However, this multi-user model works well even when data is collected at multiple sites and when transcription and analytic duties are divided up amongst more members of the research team. [24]

Since the release of version 2.40, Transana has had the capacity to handle multiple simultaneous media files. Both media streams were loaded into Transana 2.41 so that they could be synchronized and displayed simultaneously, side by side. This can be seen in the upper right section of Figure 3.

Figure 3: Complex data in Transana 2.41 (enlarge this figure here) [25]

Many researchers find it helpful during the analysis of video to have written transcripts that correspond to their video files. It is much easier for researchers to find segments of video they want to view by searching a transcript for a remembered word or phrase than to seek a segment of video by scanning through the images available from the video file. The more complex the data being represented and the more subtle the analysis being conducted, the more useful written transcripts can be (GEE, 1999). This practical truth has been central to Transana's analytic design from the beginning. Thus, the next analytic step in analyzing this data set was to develop a transcript representation of the data captured in our two video streams. [26]

As mentioned above, the two video files for this data set had largely independent audio tracks that sometimes converged and synchronized, as when the observed player interacted with other players using the in-game chat utility, and sometimes diverged, as when the observed player did talk-aloud for the camera and ignored the in-game chat going on in the background. [27]

To handle this, the researchers created multiple verbatim transcripts, one for each media file. One transcript captured the in-game chat for all players except the observed player and the other transcript captured the observed player's chat contributions, talk-aloud segments, and discussion with the researcher during game play. In Transana, transcripts can be linked to media files so that a highlight in the transcript moves as the video plays, showing the interplay between transcripts and media files. The text flows more smoothly in multiple separate transcripts than in a multi-column display within a single transcript, and multiple separate transcripts have proven to be much more flexible than complex single-transcripts for a variety of subtle analytic acts during the coding and analytic process.5) [28]

It would have been possible to create transcripts based on function rather than on audio source, creating an in-game chat transcript that drew from both audio sources and a talk-aloud transcript that reflected only the camera's audio and that excluded those parts of the audio where the observed player participated in in-game chat. Both approaches are analytically valid. Different researchers have different preferences and different research questions may benefit from different approaches, suggesting the need for the software to be maximally flexible. The researcher has full control over how transcripts are laid out in Transana and which transcripts are displayed at any given time. In this instance, Dr. WOODS pursued his personal preference to keep transcripts linked to source media files rather than having the transcript be conceptually separated. [29]

The combination of the two media files and the two transcripts, however, was not sufficient for this research team's analysis of this data. The researchers felt there was something essential still missing when they viewed the two synchronized video files synchronized with the two verbatim transcripts. The researchers felt it was still too difficult to make sense out of what they were seeing. [30]

It should be noted that the pace of play in the particular game being observed was extremely fast, and that neither of the authors had ever played this particular game. Over the course of 25 minutes, the observed player went through 34 character life cycles made up of character generation, in-game character action, and character death. (We later determined that the average character life for the observed player was just over 32 seconds long, with only 1 life lasting over 65 seconds long and one life lasting less than 10 seconds at the extremes.) The action of the game appeared to be mind-bogglingly fast to the researchers as outside observers (even when slowed down in Transana), though the observed player demonstrated a nuanced, multi-layered understanding of what was happening at all times, not just with his own character but also with the overall progress of the two competing teams. The researchers hypothesized that the issue was one of experience with the game. They needed to learn to read and interpret the complex game screen the way the player did. The research team, in other words, lacked the analytic lens the player possessed to understand the game as it unfolded. [31]

In an attempt to provide such an analytic lens for the research team, Dr. WOODS loaded the high-resolution copy of the screen capture video into Transana as a stand-alone media file and created what he called the "Game Event transcript." This descriptive transcript detailed exactly what was happening on screen. Dr. WOODS identified the individual video frame where each transition in the game play occurred, including such events as player character creation and death, character movement from one part of the game environment to another, and various types of player encounters. Between these transition frames, he wrote brief summaries of what was occurring during that game segment. This highly detailed and precise transcript was tedious to create, but proved to be crucial in unlocking the flow of overall game play and making it accessible to the team for analysis. It also allowed the research team to measure duration of game events with an accuracy of 1/30th of a second when such precise measurement was desired. Once it was complete, the game event transcript was added to the multiple media file display as a third transcript. This additional transcript provided the essential context information necessary for the researchers to make sense out of the complex data being displayed. This transcript can be seen in the lower left hand portion of Figure 3 above. [32]

It is interesting to note that the time scales and meaningful segment transitions in the three transcripts produced in this analysis are almost entirely independent of each other. It makes sense that the in-game chat is paced independently of the game play of the one observed participant, as each player participating in the chat session is playing his or her role in the game largely independently of other players. Participants in the chat were from both teams in this game, and neither team was using this chat channel to coordinate their strategy or game play in any significant or sustained way. The game pace was so fast for the observed player that it is reasonable to assume that each player is essentially acting almost entirely independently from all other players, and that the players were only aware of the in-game status of a small number of other players at any given time. Furthermore, little of the chat was actually game-related, other than the occasional description of something that had just occurred, such as a player describing the nature of her or his recent death. [33]

Because the three transcripts look at different scopes within the data, with different actors and independent time lines, it would be essentially impossible to capture all of this information coherently in a single transcript. The scopes and meaningful time scales are simply too different. Transana's approach of allowing multiple independent transcripts to be displayed simultaneously makes it easy to look at these three analytic layers both independently and in combination with each other. [34]

It is also interesting to note that the observed player was able to interact with a very complex virtual environment and talk casually with the researcher simultaneously and with little apparent difficulty. Game events rarely altered the flow or content of his talk-aloud, and his interactions with the researcher did not produce any perceptible effect on his game performance. [35]

Thus, the observed player's game play, the in-game chat amongst all players, and the observed player's interaction with the researcher all had essentially independent time lines. The only time there was any convergence was when the observed player participated in the chat. [36]

The research team made multiple passes through the data to analyze it on several different levels. Each pass was aimed at gaining an understanding of a different aspect of the information this data contained. In Transana, this sort of analytic activity is operationalized through the creation of clips, which are segments of the larger video file that are identified by the researcher as being analytically interesting, and through the application of coding to those clips. The clip is the basic unit of analysis in Transana, and all coding within Transana occurs at the clip level. [37]

The first analytic pass was a simple structural analysis of game play aimed at making the flow of the observed game play more explicit to the research team. A series of clips were created that started at each of the observed player's character creations and ended at the character's death. That is, each clip represented one full player character life. These clips were coded for which team the player was on, what type of character he was playing, and what weaponry he used during that character life. This simple coding scheme reveals the overall structure of how this player played this game, and is shown in the upper left section of Figure 3 above. Each horizontal bar represents a code (keyword) that has been applied to a clip (a selected segment from the larger media file set) across the time line of the recorded video. [38]

This particular layout of the coding shows that the player started out on the red team, but switched to the blue team about 1/3 of the way through the observed play. It shows that the observed player chose to play one type of character, the Soldier, for about the first 8 minutes, switched to a second type of character, the Heavy, for about 5 minutes, then switched to a third type of character, the Pyro, until nearly the end of the game. We see that the observed player changed weapons simultaneously with character type for the most part, though also added a second weapon during his time as the second character type, the type he played for the least amount of time. We see that player character lives tend to be quite short, and can clearly see the pattern of 37 lives across 24 minutes of play. We can see that there were a mix of longer lives (in the 45 second to 85 second range) as well as shorter lives (of 30 seconds or less) that does not immediately appear to be related to the choices of character type or weapon the player was making. (Transana includes a utility for exporting data for mixed methods analysis, so we could follow up those impressions with formal quantitative analysis if such analysis had been considered important to the present analysis.) This first analysis is not intended to be particularly deep, but just gives the researchers a flavor for the structure and flow of the game, addressing the analytic issue described above that led to the creation of the game event descriptive transcript in the first place. [39]

It should be noted that this portion of the analysis could have been conducted adequately based on only the screen capture media file and the game events description transcript. This analysis did not require the full complexity and integration of the Transana environment that has been described so far. However, it was also the judgment of the researchers that the coding from this initial pass would prove useful when mixed or compared with other, later analyses, or at least might provide a degree of anchoring for understanding where particular details and game incidents fit into the bigger picture of overall game play, so this analytic pass was conducted from the integrated environment made up of both media files and all three transcripts. Thus, it was available to the researchers if desired as they explored other aspects of the data at other times. [40]

In a second analytic pass, one of the researchers focused on the language used in the online chat, particularly focusing on chat that was not related to game play. A new collection was created to accept clips for the second analytic pass, and chat-specific clips were created and coded. For this analytic pass, data from both media streams and multiple transcripts were needed simultaneously so that the entire chat record could be analyzed. Because the observed player's contributions to the chat were stored in a different media file than the contributions of all the other players, analysis of the chat as a whole, single unit would have been significantly more difficult without the synchronization and integration of the two media files provided in Transana. [41]

Once the chat was coded, the researcher used Transana's Keyword Map, as shown in Figure 4, which displays coding across the time line of a media file, to explore relationships between the different codes that had been applied. The Keyword Map provides tools that allow the researcher to manipulate how coding is displayed as part of the process of the visual exploration of the relationship between different codes across time. [42]

As the top row of each segment of Figure 4 shows, a significant portion of the in-game chat during the first third of the recorded gaming session was devoted to a small number of players saying inappropriate things in response to being informed that we wanted to record the game session. During debriefing, the observed player stated that, while he felt that his own game play was unaffected by being observed, the in-game chat during the observation period was not typical of the chat that usually goes on during this game. He conjectured that the atypical chat behavior of the other players was in response to the session being recorded, but also asserted that game play itself was not noticeably affected. This inappropriate chat primarily took the form of a small number of players trying to come up with more disgusting and objectionable topics they could discuss.

Figure 4: Transana 2.41's keyword map of in-game chat (enlarge this figure here) [43]

The response by the rest of the group to this behavior included variations of "shut up," shown in the second line, more complex responses, shown in the third line, and ignoring the behavior. The "shut up" response appeared to be largely ineffective, and was abandoned during the first quarter or so of the session. Ignoring the offensive behavior (as indicated by the absence of a coded response) did not appear to be entirely effective either. [44]

It was interesting to explore the content of the clips coded in the third line of Figure 4 in further detail. One can load the video clip underlying a particular bar in Transana's Keyword Map simply by clicking on that bar. After reviewing the clips coded as "That's Inappropriate," the research team concluded that there was a distinct shift over time in the content of those third-line responses towards being more direct and insulting to the individuals participating in the inappropriate chat. This direct approach ultimately appears to have contributed to the reduction of the inappropriate talk, although it is also possible that the behavior simply extinguished on its own. [45]

3.3 A sophisticated analytic environment

The analytic pass described above was one component of a fuller analysis made up of several similar analytic passes looking at various aspects of game play, in-game chat, and observed player commentary. As mentioned before, however, we do not want this paper to get too lost in the details of the specific analysis of this specific data. The detailed description provided above was meant to serve as an example of the analytic approach taken to this data rather than being an exhaustive description of the results obtained from this data set, or even from this analytic pass. We wish to emphasize the important point that, by this point in the analytic process, the research team had a very powerful analytic environment in place within which they could pursue a detailed analysis of their complex data set. [46]

Multiple researchers can connect to the data set and work on analysis at the same time. These researchers can be located anywhere with internet access. When one member of the research team makes a change to the data, that change is reflected on the screens of all other researchers immediately, in real time. [47]

Researchers can view both of the overlapping media files simultaneously, (Transana supports up to four simultaneous media files). While exploring and analyzing the data, the researchers have full control over which audio tracks are played back at any given time, (i.e. they can be enabled or disabled,) and which video and audio tracks are included in each clip the researcher creates and codes. [48]

Researchers can also look at multiple layers of analysis, as embedded in different transcripts, at the same time. In the analysis presented here, each transcript captures a different aspect of the observed game play. Each transcript has a different focus and function, and each transcript embodies a different analytic view of the data. Transana can display up to five transcripts simultaneously, with no limit on the number of different transcripts that can be created for each media file set, catering for any level of complexity. [49]

Finally, as data is coded, that analytic information can be displayed on the main screen (see Figure 3) and can be brought to bear on further analysis. In the data described here, the visual representation of the flow of game play shown in the Transana's Visualization Window proved crucial to the researchers in gaining access to data that was initially difficult to deconstruct effectively. [50]

This environment allows the researcher a tremendous amount of freedom and power in exploring data. The Transana environment, as shown in Figure 3 above, presents multiple views on the events that were recorded, through multiple media files, as well as allowing the researcher to overlay multiple analytic perspectives over these media files through the use of multiple transcripts and the use of coding visualizations. These multiple perspectives can be shared and refined by all members of the research team, leveraging opportunities for confirmation, discussion, and expansion on the emerging understanding of the data that such collaboration can facilitate. [51]

As a result, researchers can select different combinations of media files, transcripts, and codes to create clips that fit specific analytic purposes very precisely. Clips can be narrowly focused by selecting one media file and one transcript. Clips can be broader, incorporating multiple media files, multiple transcripts, or both. Clips can focus on particular interactions through the careful selection of some but not all available components. Transana allows the researcher a lot of flexibility during the clip creation process. [52]

The authors believe that Transana is unique amongst qualitative analytic software in offering the combination of multiple simultaneous users, multiple simultaneous media files, and multiple simultaneous transcripts. In this paper, we present an example of a complex data set and its analysis, making use of all of those software features. Transana made this complex analysis straightforward, and less complicated. [53]

The ability for multiple researchers to access the data simultaneously is important because it allows for sharing of the work load, as well as enabling researchers with different perspectives to contribute to the data analysis. This issue is explored in more detail in Paul DEMPSTER and David WOODS (2011, this issue). Several qualitative analysis packages have started supporting this sort of collaborative analysis in recent years, and this trend is likely to continue. [54]

The ability to view multiple media files simultaneously and in synchronization was essential to this analysis. Because the local and remote components of the audio chat were captured in different media files, both media files were required to hear all aspects of the chat. In other research involving large focus groups captured with multiple cameras, Transana's multiple simultaneous media file feature has allowed researchers to evaluate the facial expressions and body language of both speakers and other participants captured in reaction shots. Here, simultaneous video display adds considerable analytic power over trying to view the media files separately, and it adds considerable convenience compared to having to create split-screen or picture-in-picture video files. [55]

The ability to view multiple transcripts simultaneously also proved crucial in this analysis. Transcripts can go beyond the role of being a written representation of verbal content; they can contain descriptive and analytic information about the media files they are linked with, and can serve as lenses through which one gains additional understanding of the content of media file data. In this analysis, the game event descriptive transcript was crucial for the researchers in their efforts to unpack the visuals of the recorded game play. One reason for this is that the game play was very rapid. In addition, some audio occurred on a different time scale from the visual elements of the game play. This meant that the audio recorded chat was largely disconnected from the play of the individual being recorded. Having a transcript that shares the time scale of the visuals and that reflects the analytic layer related to game play made analysis of that layer much easier. The chat transcripts were disjointed from the game play in a manner that interfered with accessing what was occurring. Having multiple simultaneous transcripts made viewing the media files on multiple levels much easier as they could be toggled on and off as required. [56]

And having all of these features available at once allowed the researchers to access this complex data in a very sophisticated way. The researchers could look at the data using different lenses and thinking about it in different ways because of the richness of the analytic environment that Transana provides. This combination of tools allows for a level of analytic efficiency and sophistication not previously attainable. That is what bleeding edge development is all about. [57]

Dr. WOODS would like to thank the Wisconsin Center for Education Research for their ongoing support for the continued development of Transana as we continue to seek the bleeding edge of the qualitative analysis of video and audio data.

1) See Paul DEMPSTER and David WOODS (2011, this journal), for a discussion of how the authors approached the KWALON dataset using Transana, one of the software packages represented at the conference. <back>

2) Please note that the technology described in this paper was bleeding edge when the data was collected and analyzed, but with the subsequent release of Transana 2.40, has moved from bleeding edge to leading edge in the time it took to prepare for the conference and to write and publish this paper. <back>

3) FRAPS is similar to Camtasia, CamStudio, TipCam, Wink, and a number of other screen capture programs. However, FRAPS works in the video modes that full-screen video games tend to use, while other programs tend not to work properly in those modes (see MACGILCHRIST & VAN HOUT, 2011, in this issue for a discussion of Camtasia). <back>

4) Transana has had this capacity since 2002. Recently, several other qualitative analysis packages have started offering multiple simultaneous user support as well, including Cassandre, Framework, TAMS-Analyzer, and Nvivo 9. <back>

5) For more information about working with multiple simultaneous transcripts, see HALVERSON et al. (under review). <back>

Alasuutari, Pertti (2004). Social theory and human reality. London: Sage.

Anderson, Craig A.; Shibuya, Akiko; Ihori, Nobuko; Swing, Edward L.; Bushman, Brad J.; Sakamoto, Akira, et al. (2010). Violent video game effects on aggression, empathy, and prosocial behavior in eastern and western countries: A meta-analytic review. Psychological Bulletin, 136(2), 151-173.

Aoyama, Yuko & Izushi, Hiro (2003). Hardware gimmick or cultural innovation? Technological, cultural, and social foundations of the Japanese video game industry. Research Policy, 32(3), 423-444.

Banks, Marcus (2007). Using visual data in qualitative research. London: Sage.

Bauer, Martin & Gaskell, George (Eds.) (2000). Qualitative researching with text, image and sound: A practical handbook. London: Sage.

Becker, Howard (1986). Doing things together. Selected papers. Evanston, Ill.: North Western Press.

Beilin, Ruth (2005). Photo elicitation and the agricultural landscape: "Seeing" and "telling" about farming, community and "place". Visual studies, 20(1), 56-68.

Bensley, Lillian & Van Eenwyk, Juliet (2001). Video games and real-life aggression: Review of the literature. Journal of Adolescent Health, 29(4), 244-257.

Berger, Peter & Luckmann, Thomas (1966). The social construction of reality: A treatise in the sociology of knowledge. New York: Anchor Books.

Creswell, John (2007). Qualitative enquiry and research design: Choosing among five approaches. London: Sage.

Creswell, John (2009). Research design: Qualitative, quantitative, and mixed methods approaches. London: Sage.

Dempster, Paul G. & Woods, David K. (2011). The Economic Crisis Though the Eyes of Transana. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1), Art. 16, http://nbn-resolving.de/urn:nbn:de:0114-fqs1101169.

Denzin, Norman (2004). Reading film: Using photos and video as social science material. In Uwe Flick, Ernst von Kardorff & Ines Steinke (Eds.), A companion to qualitative research (pp.234-247). London: Sage.

di Gregorio, Silvana & Davidson, Judith (2008). Qualitative research design for software users. London: Sage.

Dill, Karen E. & Dill, Jody C. (1998). Video game violence: A review of the empirical literature. Aggression and Violent Behavior, 3(4), 407-428.

Dorman, Steve M. (1997). Video and computer games: Effect on children and implications for health education. Journal of School Health, 67(4), 133-138.

Emmison, Michael & Smith, Phillip (2000). Researching the visual: Images, objects, contexts and interactions in social and cultural inquiry. London: Sage.

ESA (2010). 2010 sales, demographic and usage data: Essential facts about their computer and video game industry. Entertainment Software Association.

Ferguson, Christopher J. & Rueda, Stephanie M. (2009). The Hitman study: Violent video game exposure effects on aggressive behavior, hostile feelings, and depression. European Psychologist, 15(2), 99-108.

Fielding, Nigel & Lee, Raymond (Eds.) (1991). Using computers in qualitative research. London: Sage.

Fielding, Nigel & Lee, Raymond (1998). Computer analysis and qualitative research. London: Sage.

Gaume, Nicolas (2006). Nicolas Gaume's views on the video games sector. European Management Journal, 24(4), 299-309.

Gee, James Paul (1999). An introduction to discourse analysis: Theory and method. London: Routledge.

Gee, James Paul (2003). What video games have to teach us about learning and literacy. Computer. Entertainment, 1(1), http://portal.acm.org/citation.cfm?id=950595&coll=DL&dl=ACM&retn=1#Fulltext.

Gee, James Paul (2004). Language learning and gaming: A critique of traditional schooling. London: Routledge.

Gee, James Paul (2005). Why video games are good for your soul: Pleasure and learning. Champaign: Common Ground Publishing.

Gee, James Paul (2008). Video games and embodiment. Games and Culture, 34(3), 253-263.

Gentile, Douglas A.; Lynch, Paul J.; Linder, Jennifer R. & Walsh, David A. (2004). The effects of violent video game habits on adolescent hostility, aggressive behaviors, and school performance. Journal of Adolescence, 27(1), 5-22.

Greitemeyer, Tobias & Osswald, Silvia (2010). Effects of prosocial video games on prosocial behavior. Journal of Personality and Social Psychology, 98(2), 211-221.

Griffiths, Mark (1999). Violent video games and aggression: A review of the literature. Aggression and Violent Behavior, 4(2), 203-212.

Halverson, Erica; Bass, Michelle & Woods, David (Under review). Analysing multimodal data in the context of use video production. Qualitative Inquiry.

Halverson, Richard; Shaffer, David; Squire, Kurt & Steinkuehler, Constance (2006). Theorizing games in/and education. Paper presented at the Proceedings of the 7th International Conference on Learning Sciences, Indiana University, Bloomington IN, U.S.A.

Ignatow, Gabriel (2003). "Idea hamsters" on the "bleeding edge": Profane metaphors in high technology jargon. Poetics, 31(1), 1-22.

Ignatow, Gabriel & Jost, John (2000). "Idea hamsters" on the "bleeding edge": Metaphors of life and death in silicon valley. Research paper series: Graduate School of Business Stanford University, 1628.

Kelle, Udo (Ed.) (1995). Computer-aided qualitative data analysis: Theory, methods and practice. London: Sage.

Kelle, Udo (2004). Computer-assisted analysis of qualitative data (Jenner, B, Trans.). In Uwe Flick, Ernst von Kardorff & Ines Steinke (Eds.), A companion to qualitative research (pp.276-284). London: Sage.

Kelle, Udo (2007). Computer assisted qualitative data analysis. In Seale, Clive, Gobo, Giampetro, Gubrium, Jaber & Silverman, David (Eds.), Qualitative research practice (pp.443-460). London: Sage.

Kelle , Udo & Laurie, Heather (1995). Computer use in qualitative research and issues of validity. In Udo Kelle (Ed.), Computer-aided qualitative data analysis: Theory, methods and practice (pp.19-28). London: Sage.

Lee, Raymond (2004). Recording technologies and the interview in sociology, 1920-2000. Sociology, 38(5), 869-889.

Lewins, Ann & Silver, Christina (2007). Using software in qualitative research: A step by step guide. London: Sage.

Loguidice, Bill & Barton, Matt (2009). SimCity (1989): Building blocks for fun and profit. In Loguidice, Bill & Barton, Matt, Vintage games (pp.207-224). Boston: Focal Press.

Lynch, Jeremy; Aughwane, Paul & Hammond, Toby M. (2010). Video games and surgical ability: A literature review. Journal of Surgical Education, 67(3), 184-189.

Macgilchrist, Felicitas & Van Hout, Tom (2011). Ethnographic Discourse Analysis and Social Science. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1), Art. 18, http://nbn-resolving.de/urn:nbn:de:0114-fqs1101183.

Markey, Patrick M. & Markey, Charlotte N. (2010). Vulnerability to violent video games: A review and integration of personality research. Review of General Psychology, 14(2), 82-91.

Morse, Janice & Richards, Lyn (2002). Read me first for a user's guide to qualitative methods. London: Sage.

Pentland, Brian (1997). Bleeding aid epistemology: Practical problem solving in software support hotlines. In Stephan Barley & Julian Orr (Eds.), Between staff and science. Technical work in us settings (pp.113-128). New York: Cornell University Press.

Peshkin, Alan (1988). Understanding complexity: A gift of qualitative inquiry. Anthropology & Education Quarterly, 19(4), 416-424.

Pink, Sarah (2007). Doing visual ethnography. London: Sage.

Prosser, John (Ed.) (1998). Image-based research: A sourcebook for qualitative researchers. London: Sage.

Richards, Lyn (1998). Closeness to data: The changing goals of qualitative data handling. Qualitative Health Research, 8(3), 319-328.

Richards, Lyn (2005). Handling qualitative data: A practical guide. London: Sage.

Richards, Lyn & Richards, Tom (1994). From filing cabinet to computer. In Alan Bryman & Robert Burgess (Eds.), Analysing qualitative data (pp.146-172). London: Routledge.

Rozin, Paul; Haidt, Jonathan & McCauley, Clark (1993). Disgust. In Michael Lewis & Jeanette Haviland-Jones (Eds.), Handbook of emotions (pp.637-654). New York: Guilford Press.

Shaffer, David; Squire, Kurt; Halverson, Richard & Gee, James Paul (2005). Video games and the future of learning. Phi Delta Kappan, 87(2), 104-111.

Squire, Kurt (2006). From content and context: Videogames as designed experience. Educational researcher, 35(8), 19-29.

Steinkuehler, Constance A. (2006). Massively multiplayer online video gaming as participation in a discourse. Mind, Culture, and Activity, 13(1), 38-52.

Taylor, T. (2006). Play between worlds: Exploring online game culture. Cambridge MA: MIT Press.

Wagner, Jon (2002). Contracting images, complimentary trajectories: Sociology, visual sociology and visual research. Visual studies, 17(2), 160-171.

Weibel, David; Wissmath, Bartholomäus; Habegger, Stephan; Steiner, Yves & Groner, Rudolf (2008). Playing online games against computer- vs. human-controlled opponents: Effects on presence, flow, and enjoyment. Computers in Human Behavior, 24(5), 2274-2291.

Weitzman, Eban A. (1999). Analyzing qualitative data with computer software. Health Services Research, 34(5), 1241-1263.

Weitzman, Eban & Miles, Matthew (1995). Computer programs for qualitative data analysis: A software source book. London: Sage.

Yee, Nick (2006). The demographics, motivations, and derived experiences of users of massively multi-user online graphical environments. Presence: Teleoperators and Virtual Environments, 15(3), 309-329.

David WOODS is a Researcher at the Wisconsin Center for Education Research at the University of Wisconsin, Madison. His primary interest is in facilitating the analytic work of researchers, particularly those with challenging data or innovative analytic approaches. He is the lead developer of Transana, software for the transcription and qualitative analysis of video and audio data.

Contact:

David K. Woods, Ph.D.

Wisconsin Center for Education Research

University of Wisconsin, Madison

1025 W. Johnson St., #345-A

Madison, WI 53706-1706

USA

Tel.: 001 608 262 1770

Fax: 001 608 265 9300

E-mail: dwoods@wcer.wisc.edu

URL: http://www.transana.org/

Paul G. DEMPSTER is a Research Fellow at the University of Leeds UK. He is also an honorary Research Associate at the University of Wisconsin Madison. He is a Medical Sociologist, I.T. specialist and Thanatologist, currently researching governance and IT within the NHS. He has a longstanding research interest in the use of qualitative research software, its development and application in new and distinctive ways.

Contact:

Paul G. Dempster, Ph.D.

Leeds Institute Health Sciences

Room 2.02/11

Charles Thackrah Building

101 Clarendon Road

University of Leeds

LS17 6NP

UK

Tel.: 0044 (0)113 3430858

E-mail: p.dempster@leeds.ac.uk

URL: http://www.leeds.ac.uk/hsphr/hsc/biogs/pd.html

Woods, David K. & Dempster, Paul G. (2011). Tales From the Bleeding Edge: The Qualitative Analysis of Complex Video Data Using Transana [57 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1), Art. 17, http://nbn-resolving.de/urn:nbn:de:0114-fqs1101172.