Volume 12, No. 3, Art. 21 – September 2011

Beyond Transcription: Technology, Change, and Refinement of Method

D. Thomas Markle, Richard E. West & Peter J. Rich

Abstract: Qualitative researchers have evolved their methods continually, often due to technological breakthroughs that have enabled them to collect, analyze or present data in novel ways or to obtain a stronger authenticity or reflection of participant perspectives. In examining historical situations that have led to methodological shifts, we assert that the qualitative research community is currently on the precipice of another such change, specifically in the transcription of audio and visual data. We advocate for the benefits afforded by emerging technologies to collect, analyze, and embed in research reports actual multimedia data, thus avoiding the loss of meaning and unavoidable interpretation bias inherent in transcription. Working with data in its original multimedia (audio or video) state, instead of a transcription, can allow for greater trustworthiness and accuracy, as well as thicker descriptions and more informative reporting. We discuss the challenges still present with this approach, along with suggestions for improving future methodologies.

Key words: transcription; data analysis; multimedia; video analysis; methodologies; audio analysis; data reporting; authenticity

Table of Contents

1. A Brief History of the Role of Technology in Qualitative Inquiry

1.1 On-site research

1.2 Auditory recording and transcription

2. Continuing Challenges

2.1 Efficiency

2.2 Accuracy

2.3 Trustworthiness

2.4 Reporting

3. Emerging Technology-enhanced Research Techniques

3.1 Analysis

3.2 Reporting: Greater accuracy and thicker, more in-depth descriptions

3.3 Greater trustworthiness through a descriptive audit trail

4. Barriers to Overcome

5. Conclusion

Qualitative researchers progressively review and revise their methods for collecting and analyzing data (MONDADA, 2008; RIST, 1980; SECRIST, KOEYER, BELL & FOGEL, 2002; URRY, 1972), often not only due to the creativity of the researchers, but also to a milieu of historical and cultural factors. We argue that technological advancements are often cultural catalysts for major changes in qualitative methodology that expand our abilities to collect or analyze data. Once a new technology is recognized and employed by a few researchers to benefit specific studies, the field begins altering its methods permanently to utilize these new tools (see MALINOWSKI, 1984 [1922], for example). It could be argued that MALINOWSKI's field research in Papua, New Guinea fundamentally changed the concept of participant observation and ethnography. Slowly others in the field followed suit, leading to major methodological shifts (CLIFFORD, 1983). While not every substantial methodological change is due to the acceptance of a novel technology, this article argues that 1. it is possible to use novel breakthroughs in technology as bases for considering changes in qualitative methods, and 2. qualitative researchers are again on the precipice of a fundamental change in method spurred by current technological advances. By embracing these new methods, researchers can improve the efficiency and authenticity of their work. [1]

1. A Brief History of the Role of Technology in Qualitative Inquiry

Early anthropologists often relied on previously published documents as their primary sources. James CLIFFORD (1983) summarized this approach in his contrast between the illustrated frontispiece of Father LAFITAU and the photographic frontispiece of Bronislaw MALINOWSKI: "The 1724 frontispiece of Father Lafitau's Moeurs des Savages Ameriquains portrays the ethnographer as a young woman sitting at a writing table amidst artifacts ... his author transcribes rather than originates" (p.118). [2]

Many academics, like James FRAZER, were passive collectors of data who gained their insights from information gathered by others, such as travel logs and books about people whom the researchers had never seen. Such limitations seemed to be due to lack of funds, transportation, or initiative on the part of the academic. FRAZER's once-celebrated work "The Golden Bough" (1995 [1922]) is an example of research derived largely from second-hand sources; it consists primarily of interpretation and analysis of texts by a man who personally collected almost no data (LEACH & WEISINGER, 1961). Subsequent researchers (BEARD, 1992; DOUGLAS, 1978) have commented critically on works produced during that era of qualitative research history, noting a variety of resulting flaws and limitations in the work of academic institutions, primarily that poor analytical conclusions were based on little evidence or completely fabricated (DOUGLAS, 1978). Today a researcher attempting such armchair research on a living population without direct interaction would be challenged as lacking authenticity (CLIFFORD, 1983). [3]

Eventually transportation technology improved, and traveling to even remote locations became possible. In addition, typesetting technology progressed, and typewriters, ink ribbons, and other writing equipment became small and hardy enough to be taken into the field. Starting in 1914, Bronislaw MALINOWSKI began his expedition, sailing from August to March to the Pacific island of New Guinea to study the economy of its indigenous peoples. In his landmark book "The Argonauts of the Western Pacific" (1984 [1922]), MALINOWSKI referenced his use of technology in field research at the time and explained his ethnographic approach. Foundations of modern methods for conducting ethnographical research and producing written field notes have been derived from his work (BERNARD, 2000; KIRK & MILLER, 1986). At this point technology and method merged to form a new standard of authenticity (see GUBA & LINCOLN, 1989), and anthropological researchers were expected to travel to the locations of their research and collect their own data (CLIFFORD, 1983). [4]

1.2 Auditory recording and transcription

Another methodological shift was brought on by the advancement of audio recording technology; recording and transcription of interviews became a staple of qualitative research. Before this change qualitative research was conducted largely with handwritten field notes by on-site researchers who were observing and talking to those being studied (GIBBS, FRIESE & MANGABERIA, 2002). It was not until the 1970s, when portable audio recording could be taken directly into the field, that transcription became a viable method and researchers could analyze, interpret, and report participants' own words. PATTON (2002) believed that "the creative and judicious use of technology [could] greatly increase the quality of field observations and the utility of the observational record to others" (p.308) without being obtrusive. This was a significant advancement for interpretive methods. RAPLEY (2007) wrote,

"The actual process of making detailed transcripts enables you to become familiar with what you are observing. You have to listen/watch the recording again and again. ... Through this process you begin to notice the interesting and often subtle ways that people interact. These are the taken-for-granted features of people's talk and interaction that without recordings you would routinely fail to notice, fail to remember, or be unable to record in sufficient detail by taking hand-written notes as it happened.” (p.50) [5]

This process of audio recording, transcribing, and analyzing textual data is still the accepted norm. The latest methodological shift has been brought on by the use of video analysis. Video analysis extends the ability of researchers to visit a site by enabling them to virtually re-visit the studied scene repeatedly and as many times as necessary, gaining greater insight and interpretation of transpired events. Though video came on scene during the mid-twentieth century (FULLER & MANNING, 1973), the recent ubiquity of video tools has created an explosion of interest in using video in multiple aspects of research (DERRY et al., 2010). KNOBLAUCH, BAER, LAURIER, PETSCHKE & SCHNETTLER (2008, p.4) claimed that visual data "provides a more direct record of the actual events being investigated than any of the other major forms of data collection used by social researchers". Yet, "despite pioneering work on video analysis and a century of ethnographic film making, academic practice has remained a firmly writing and text based endeavour in both its subjects of attention and practice of production" (LAURIER, STREBEL & BROWN, 2008, p.3). [6]

Computer-Assisted Qualitative Data Analysis Software (CAQDAS), digital recorders, transcription machines, and software have improved efficiency for many, even though some qualitative researchers do not use this software (FIELDING, 2000). [7]

Despite the advantages to audio transcription, many challenges to this method of data representation through transcripts remain, including issues of efficiency, accuracy, trustworthiness, and reporting. [8]

Transcription can become one of the lengthiest aspects of the data analysis process. As one qualitative student remarked, "[t]he whole process of doing the transcription is lonely and tiring," and "the transcription process is intensive and tough" (ROULSTON, DeMARRAIS & LEWIS, 2003, p.657). One researcher described it as a "chore" that can take six hours for every recorded hour (AGAR, 1996, p.153). However, EVERS (2011) pointed out four different varieties of transcription and remarked that depending on the kind of transcription being done, the task could be closer to 4-60 hours for every recorded hour of data. MATHESON (2007) lamented over "how slowly transcription technology was improving" (p.547), especially with how "tedious" it was (p.549). Many dissertations and studies are placed on hold for months or years until the researcher can find the time or money for transcription. For a fast typist, transcribing a single hour-long interview can take 3-4 hours. When the process involves less-efficient typists, poor-quality recordings, or focus group interviews, the time is much longer. PATTON (2002) even included guidelines for conducting interviews in ways that minimize transcription pain. Many avoid transcribing their own interviews by requiring student assistants to complete the task or by paying a professional transcriptionist—a heavy cost frequently borne by the researchers themselves. While many have argued that transcribing one's own data helps the researcher to stay immersed in the data (BIRD, 2005; LAPADAT & LINDSAY, 1998; RAPLEY, 2007; TILLEY, 2003; TILLEY & POWICK, 2004), the cost-benefit ratio is decidedly poor. [9]

Furthermore, transcription inefficiencies are compounded when researchers attempt to speed the process at the expense of transcript quality. MCLELLAN, MACQUEEN and NEIDIG (2003) have argued that inappropriate and inadequate methods of preparing transcripts can cause setbacks in analysis and research completion, even introducing major errors into the findings. Thus attempts to improve the efficiency of transcription often backfire, causing even greater delays. [10]

The professed benefit of using recorded audio and video is increased authenticity. Yet transcribing spoken data inevitably loses information as the concrete event or emotional response is translated into written language—a symbolic form inherently less rich and authentic. Thus transcription can result in the loss of pragmatics—the role of context and inflection on speech. For example, the simple greeting "Hello" may be said in any number of ways that change the speaker's tone and intent. Turning audio data into text data sacrifices elements of natural speech such as intonation, pause, juncture, pitch, stress, and register that convey added information by helping to place spoken words inside a greater contextual reference that increases insights and understanding beyond the words. Research by MEHRABIAN (1971) suggested that roughly 7 percent of information conveyed through direct face-to-face communication is carried by words alone, and that adding vocal tones increases the information transmitted by roughly 38 percent. However, capturing such pragmatics in symbols can be difficult, and thus in the process of transcription some meaning is lost and eventually forgotten (EMERSON, FRETZ & SHAW, 1995). [11]

Conversation analysts have developed symbols to represent these missing pieces of meaning. BAUER and GASKELL (2000) explained: "In order to facilitate analysis this recording needs to be transcribed in a system of symbols that highlights certain features of the events, while neglecting others" (p.265). But these symbols make transcription more time consuming and inefficient, necessitating smaller sample sizes. Furthermore,

"conversation analysis when initiated by Harvey Sacks (1992) was not to be a disengaged inquiry of conversation, a collection of professional analysts theorising about language from the benefit of a higher ground. The analysis of conversation, urged Sacks, was to be the analysis made by speakers themselves in and through their conversation" (LAURIER et al., 2008, p.3). [12]

In other words, these symbols are not perfect representations. For example, ellipsis ("...") cannot accurately convey the awkwardness of a 3-second silence, nor can "(overlap)" authentically show how abruptly someone disrupts a conversation. Only by listening to a speaker's pragmatic inflections can a researcher distinguish between identically written but authentically different phrases. [13]

Consider the following excerpt from a research study conducted by one of the authors. The context is a student animation design studio, and the research is investigating the nature of collaborative creativity as the students develop their senior animation film. The film is about a classic underdog versus Goliath boxing match, and in this class period the students are discussing how to describe the vast differences in the two fighters (strong and privileged Paddy and weak Clarence) in a visual and humorous way. In this excerpt, the students discuss how they might humorously show that Paddy comes from a genteel background but is still a savage fighter.

Video 1: This and the subsequent video show examples of animation students discussing their project using gestures, nonverbal

communication, and emotional outbursts. These examples represent the kinds of meaning that might be lost if these videos were

transcribed for analysis. In this video, the animation students discuss through gestures how to animate one of the main characters.

The video can also be seen at http://byuipt.net/rick/FQS/FQSVideos.html. [14]

The following is a transcript of the short event, using typical conversation analysis notations (LIDDICOAT, 2007)1):

T: He's going to be chowing down on his food? ((Looking at J, who is looking down)) [overlap with inaudible utterances] I imagine him going AAARRR ((makes motion of devouring drumstick)), but then how is he going to be refined? ((holds hand up in genteel fashion))

J: We were thinking, I don't know, we were thinking he would be refined and then all of a sudden go ((pretends to devour food on a plate)) [overlaps with laughter from observer]

G1: And he could have like a little kerchief that he like pulls out of his=

<J2: After he chokes on it=

G1: Yeah ((Both G1 and J make an eating motion))

G2: After he devours everything=

G1: Yeah he just kind of and then you know=

J: Or have somebody else wipe his mouth for him > ((makes motion of wiping the corners of his mouth with a napkin))

(….........)

T: Or he could even be sitting at the table

J: Sitting with his boxing gloves on(h) ((both make the hand motion of exaggerated cutting with hands turned downwards))

heh heh heh (offscreen)

Yeah

(….........)

heh heh heh (offscreen)

A: Does he have cheese? What would he be cutting?

T: Like he'd be sitting at the table with like a handkerchief ((Both T and J make exaggerated faces and T motions tucking a handkerchief in his shirt collar))

A: Oh. I think that's an awesome contrast

T: Well, I think that (.) would be good. [15]

While the conversation analysis conventions help to describe the interactive nature of this event, the transcript fails to communicate the students' excitement for the various ideas. Especially in an incident of research study about group creativity, subtle nonverbal hints such as smiling, nodding one's head, and showing excitement in one's eyes, along with laughter, may be enough to encourage someone to continue thinking in a certain direction and to discourage contradictory thoughts. In addition, most of what students communicated about how they should animate the characters came from the students' facial expressions and gestures. Simply indicating that the students were "exaggerated" in a particular gesture does not adequately describe the experience. [16]

In this second example, the students are still trying to collaboratively determine the nature of Paddy's character and select ways to visualize this through animation, particularly during the actual fight sequences.

Video 2: In this video, the animation students discuss different ways to animate the fight scene between the two main characters.

The video can also be seen at http://byuipt.net/rick/FQS/FQSVideos.html. [17]

The following is a transcript of this second event, using typical conversation analysis notations:

A: That could also spill over into his animation, because they had a whole style of fighting a gentleman's fight was like ... ((takes an exaggerated boxing stance and makes exaggerated boxing maneuvers))

Yeah yeah

Heh heh heh

A: It was like=

T: And then Clarence could be like

Heh heh heh

A: And he's got a style, he could like still whale on him but it could be funny (yeah) this big like "gentleman"= (T and A make exaggerated boxing motions))

J: Jumping up and down=

A: Good job! Nice hit! ((A makes motions of absorbing a blow))

T: Clarence would be punching him and he would ((T makes exaggerated faces imitating Paddy absorbing a weak punch from Clarence and bobbing his head in positive reinforcement))

Heh heh [18]

Again in this example, the transcript loses much of the meaning, emotion, and humor of the episode as the students laugh and respond to the two main speakers' interpretation of a Paddy fight. Only through the video can a researcher begin to approach the experience of being there live and observing how the initial idea of a David versus Goliath overmatched boxing match began to emerge and take form through the interactions of the group. [19]

Emotion compounded with human behavior is more readily communicated when the original source is video data. The transcription, while accurately reporting the words spoken, fails to capture the individual and collective actions and emotions that provide richness to an event. Most of the transcription attempts to re-create the video through descriptive language and thus becomes as much an interpretive act as the subsequent data analysis. An unnecessary level of data is potentially lost by adding this extra layer of interpretation. [20]

Complicating matters, transcription is never theory-neutral (PSATHAS & ANDERSON, 1990), but instead reflects transcriptionist biases and theoretical frameworks. LAPADAT and LINDSAY (1998) assigned five pairs of graduate students to transcribe the same book-reading session between a mother and child. They found that the different transcriptionists varied in the quantity and consistency as well as the type of interactions that they transcribed. These decisions were linked to interpretative differences between the students. The researchers concluded that the person doing the transcription subtly interprets and changes the kind of text produced. As RAPLEY (2007), acknowledged, "[t]ranscripts are by their very nature translations—they are always partial and selective textual representations" (p.50). Because transcripts are the main or even sole source of data for many research studies, the reality that interpretation begins in the transcription process has significant implications for final research findings and conclusions. [21]

This situation is compounded by the fact that professional transcriptionists employed by researchers are often not familiar with the study's participants, research setting, theoretical foundations, or cultural nuances, and their personal biases and theoretical frameworks are never reported as part of the study's methodology. This lack of "theoretical sensitivity" (GLASER & STRAUSS, 1967) and use of personal interpretation, along with the simple reality that many recordings are of poor listening quality, can lead to transcription errors as minor as misspellings or as major as omission of key phrases, possibly causing delay and errors in the research (McLELLAN, MacQUEEN & NEIDIG, 2003). [22]

LINCOLN and GUBA (1985) suggested that to improve the trustworthiness of qualitative data, researchers should create an audit trail so that others can audit the researcher's analysis and findings. This inquiry audit serves the same purpose as replicability studies in positivistic paradigms, but instead of searching for perfect replication, the auditor evaluates whether the researcher's conclusions are justified by the data. LINCOLN and GUBA suggest that an audit trail should provide access to raw data, data reduction and analysis products, data reconstruction and synthesis products, process notes (a researcher's journal), and instruments. This process allows "an auditor (second party) [to] audit the decisions, analytical processes, and methodological decisions of the primary researchers (first party) ... ex post facto" (CUTCLIFFE & McKENNA, 2004, p.127). [23]

While theoretically a good idea, a useful audit trail is difficult to provide, and in our experience, inquiry audits are rarely undertaken. Raw audio has typically been difficult to share with other researchers, and transcripts are often so voluminous that they cannot be included in an article or report, unless the journal is available online such as FQS, and can allow for attached appendices. In dealing with print journals, researchers instead must subjectively choose portions of their data to share with auditors and to eventually publish in a research article or report. Readers must try to discern by judging the "thickness" (GEERTZ, 1973) of the descriptions whether they can rely on the researcher's interpretation of the data. Any reader who questions the trustworthiness of the researcher or of the research findings published in print form cannot typically appeal to the original data. ASHMORE and REED (2000, p.45) explained that typically in most reported research, "it is fragments of transcript that evidence the author's analytic claims" and that the alternative is to go back to the original data—untranscribed—which "is always somewhere else" and is thus "unavailable for questioning." While computerized tools for analyzing textual data can make it easier to share an audit trail with the reader, it is still rarely done, and especially original multimedia files are rarely provided with research reports. [24]

Part technical report, part narrative art—the qualitative research article is the end goal of any qualitative study. The purpose of the write-up is to "contribute to the conversation" in a discipline (HATCH, 2002, p.221). RAPLEY (2007) stated that the benefit of transcripts is that "you can use them in the presentation of your findings" (p.50). Yet print journal space restrictions make it difficult for researchers to provide enough description and data to truly contribute to a research conversation. Because the thickness of the descriptions reported is a main goal for establishing the trustworthiness of qualitative work (LINCOLN & GUBA, 1985), journal space limitations require qualitative research reports to sacrifice trustworthiness. Consequently, researchers can feel that they have not adequately reported their findings and that interested readers will not truly understand the context for the conclusions. This lack of reporting depth undermines researchers' abilities to teach qualitative research, as there are rarely enough details in a research article or report, due largely to the fact that so few journals allow or have the ability to place hermeneutic units online, to allow beginning researchers to see the inductive process behind the curtain and to consider how they might have replicated the procedures or drawn similar or contrasting conclusions from the data. To compensate, many researchers are championing the archiving of digital qualitative data for secondary analysis and re-interpretation (CORTI, 2000; CORTI, DAY & BACKHOUSE, 2000). While many challenges remain regarding the anonymity and respect for the original intentions of the research participants, as well as the difficulty of determining technical standards, this is a movement that has the potential to provide great benefits for both scholars and students. [25]

3. Emerging Technology-enhanced Research Techniques

By revisiting emerging ideas about data analysis, coding, and reporting and by reexamining our goals as researchers, we may be able to leverage what is offered by emerging digital technologies to improve our research in efficiency, accuracy, trustworthiness, and reporting. [26]

Digital technologies enable researchers to collect a greater variety of data materials, including photographs, PDF files of participants' artifacts, and other video and audio media. Most CAQDAS programs allow for some coding of these multimedia elements, eliminating the need for producing transcripts before coding data when transcripts are unnecessary. For example, in the newest versions of NVivo, researchers can code the original video or audio files alongside coded text, and the coding instances are aggregated and reported together. PDF files (perhaps representing participant work samples) can also be coded. Other programs such as HyperResearch, MaxQDA, Transana, and Atlas.ti have similar capabilities to varying degrees (see EVERS, MRUCK, SILVER & PEETERS, 2001 for examples of different analyses conducted with these tools). Clearly this is the direction CAQDAS programs are moving, and future technology will likely feature improved multimedia coding, including the ability to code all data together regardless of medium. Perhaps even more important than the tools researchers use to analyze these data are current technological advances that enable readers to view multimedia data alongside a traditional text narrative. As researchers adopt these evolving technologies, they can maximize the benefit of each form of data, representing argument in text and data in their original formats. [27]

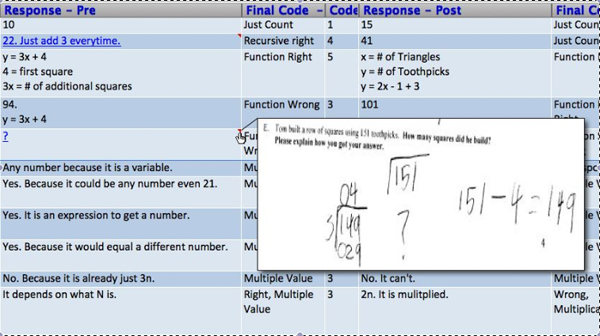

For example, one of the authors is conducting research that requires analyzing student responses on mathematical problems. The research team began transcribing responses, but quickly discovered that accurately representing the students' notation and workflow was difficult. They found it necessary to scan all 63 assessments (consisting of 14 responses each) to PDF format and to enter a screenshot of each response into a spreadsheet. Scanning and screen-capture technologies allowed them to create an image of the original data, which was a simpler process than transcribing each response. Entering this data into an Excel spreadsheet, they were able to code, filter, and sort the data by response type or analytical codes and thus more quickly and more accurately analyze students' original responses (see Figure 1).

Figure 1: An image showing how one researcher coded original data (in PDF form) and then analyzed it in Excel [28]

In addition, some programs focus specifically on coding video data. While these programs may not allow for coding of text like traditional CAQDAS programs, they are perhaps more efficient and powerful for handling multimedia data and may be preferable if the core data in a qualitative study are video (see RICH & HANNAFIN, 2009 and DERRY et al., 2010 for a summary of video annotation and video analysis software). The move to annotate video has become so ubiquitous that such tools are increasingly found in popular and social media. For example, the latest version of iMovie allows users to insert comments into specific video clips and to later search these comments. Viddler, a common social-media video tool, allows any lay user to upload, annotate, share and search for specific types of videos or comments made on portions of those videos. [29]

Video annotation tools (RICH & HANNAFIN, 2009) enable textual, audio, and video annotation of video or audio data. Research-oriented tools such as Transana®, StudioCode®, MediaNotes®, The Observer XT®, the Video Analysis Tool, and Dartfish Pro® enable researchers to go beyond simple annotation by searching, filtering, and sorting data in specific, user-defined ways, allowing them to find patterns within a single video or across large sets of videos. For example, in researching student creativity among animation students, we have found it much simpler to code the original video files using MediaNotes® than to transcribe the video conversations. These MediaNotes® codes can then be aggregated within the program or exported as a spreadsheet for further filtering and sorting in Microsoft Excel. Because of the immense volume of video data for this project (well over 100 hours of video), coding the original video data has been much more efficient and has allowed us to filter through the data more quickly than if we had required typewritten transcripts. [30]

Video analysis tools allow us to glimpse not only the possibility of coding multimedia data, but also ways for improving eventual dissemination. For example, VITAL, developed at Teacher's College, Columbia University, allows users to reference filtered video segments as hyperlinks in text-based manuscripts. Visualization and referencing of authentic data with refined analysis has never been more readily available than at the current time. [31]

While we believe that researchers should consider the benefits of coding data in its original source (i.e., as an audio or video recording), we recognize that even visual and audio information is subject to personal bias, both of the one who captured the data, as well as the one who analyzes it. Douglas MACBETH (1999) described how seeing through the camera lens results in a re-constructed view of reality. In addition, ASHMORE and REED (2000, p.6) explained that "taping as an activity receives much less explicit discussion" even though the tape itself can be influenced by the researcher's paradigm, affecting how things were recorded and then viewed. Thus, while coding audio-visual data enables researchers more sensory information available from the originally researched subject, it also remains only an approximation, albeit closer to the original than other sources. [32]

Sometimes transcripts are still needed. The efficiency of the transcription process could be improved by using voice-recognition software. While software such as Dragon Naturally Speaking® and MacSpeech Dictate® is still not sophisticated enough to handle the "complex world of qualitative interviewing" (EVERS, 2011, p.50), these tools are still remarkably capable at transcribing digital files featuring one voice. One of the authors is conducting research in which participants record reflective voice memos in answer to written prompts about their weekly experiences in a collaborative learning environment. Because only a single voice is recorded in each memo, in contrast with typical interviews, the software program can probably be helpful in producing a transcription. Still, the efficiency of such software is still under debate. While MATHESON (2007) claims the entire process is over 14 percent faster, MACLEAN, MEYER, and ESTABLE (2004) and JOHNSON (2011) have found the overall process (including cleaning up the transcript) to take slightly more time and be slightly less accurate. Others (PARK & ZEANAH, 2005; EVERS, 2011) have not been able to find a measurable advantage of one over the other. Mostly likely, the difference in experience can be related to: 1. the transcriptionist's typing speed and accuracy, 2. the amount of time using and training one's voice dictation software (the longer it is used with a single voice, the more accurate it becomes), and one's approach to the entire process. Some researchers have explored methods for conducting interviews so that voice-recognition software can still be used for the transcription (MATHESON, 2007). As voice-recognition technology and subsequent research methodologies continue to improve, there is great potential for more efficient and complete transcripts. [33]

3.2 Reporting: Greater accuracy and thicker, more in-depth descriptions

When researchers code the actual data and transcribe only what they will later need for their report, accuracy can be improved because they eliminate errors and misinterpretations introduced by outside transcribers. By coding data in their original multimedia forms, researchers can improve the accuracy of their interpretations because they can return again and again to the original data complete with all of its imbued and nonverbal meaning. Doing so allows them to actually analyze and share more data (verbal and nonverbal, see MEHRABIAN, 1971). In addition, by revisiting the actual interviews or observations, researchers can maintain a greater sense of participants' perspectives. [34]

Besides improvements in data analysis, digital recording technologies are so ubiquitous that they offer new possibilities for enhanced data collection. In a research study of group creativity among student designers (WEST & HANNAFIN, in press), recall of specific interactions within the group was critical. Fearing that the participants would not be able to recall sufficient details in an end-of-semester interview, the researcher asked each participant to record a 10-minute weekly voice memo, detailing the group interactions for that week only. The method allowed for greater accuracy in students' recall of the events since they recorded their descriptions almost immediately after the interactions occurred. Because digital recorders (available in most mp3 players and computers) are so readily available, it was not inconvenient for participants to make these brief weekly recordings, and the data collected were often more descriptive and rich than data typically produced by an interview, as proven when an interview was conducted with one participant, and the participant could no longer remember the pertinent details in great detail. Consequently, the case study on this participant was not as rich as those where the participants had completed weekly voice memos. [35]

In addition, snippets of original video or audio data can be easily embedded into research reports using programs such as Adobe Acrobat® or its competitors such as Skim (an open-source PDF viewing/annotation tool). In the same way that those producing printed data-based reports are expected to embed images and figures, future researchers may be expected to embed snippets of their actual audio and video files. Doing so provides two immediately apparent benefits. First, readers can actually hear or see the participant(s) instead of trusting the researcher's admittedly incomplete transcription, thus increasing the trustworthiness of the research. Second, embedding audio files does not take up written page space, allowing an astute writer who chooses to embed original audio or video snippets to report more data within the same page limits. Thus, using embedded audio or video data enables thicker descriptions, greater understanding of the participants' voice and perspectives, and greater persuasive reporting, since viewing a participants' recall of an emotional event can be much more persuasive. [36]

3.3 Greater trustworthiness through a descriptive audit trail

Modern technology can improve the trustworthiness of qualitative data by making it feasible to leave a sufficient audit trail for other researchers to evaluate. With online storage costs dropping, researchers, institutions, or journal/book publishers can be reasonably expected to store original data and analysis files. FIELDING (2000, p.9) claims that, "contemporary qualitative researchers need to design their research with archiving in mind from the outset." A link to these files can be provided within a researcher's article. In fact, journals such as Contemporary Issues in Technology and Teacher Education,the International Journal of Learning and Media, and of course FQS already provide this option. With a carefully recorded researcher's journal and access to original data, readers could choose to retrace the researcher's logic in developing ideas during analysis. [37]

Novice researchers and teachers of qualitative research would benefit greatly from these audit trails. In many disciplines, novices develop skills by reanalyzing classic data sets. As early as 1976 the Murray Research Center established a repository for social and behavioral science data on human development and social change. As technology has advanced, the richness of the media stored has kept pace (JAMES & SORENSEN, 2000). The richness of the media stored for secondary analysis of qualitative studies is vitally important. As FIELDING (2000, p.6) pointed out, "If the debate over epistemological issues relating to secondary analysis tells us anything, it is that it is very important that archived materials include as much information about the context of the original data collection as possible." CORTI (2000, p.22) added "there is really no substitute for listening to people's own words" and advocated for the archival of the original recordings when possible. [38]

JAMES and SORENSEN further warn that,

"in order to be an effective resource for new research and contribute to minimizing the waste of data, it is important not only to preserve and document data, but also to let the research community know about the availability of the data and to provide some training in how to use it" (2000, p.2). [39]

Thus, simply storing original data is insufficient. The data must be used, standardized (CORTI & GREGORY, 2011), and advertised as available for use, but by making data archival more of the norm, there would be many benefits to the field of qualitative research. For example, powerful applications might be made in a course on grounded theory methodology if we could use GLASER and STRAUSS' original data (1965) gathered for their historic research on awareness of dying; or in a class on feminist theory by listening to the 135 interviews, along with the researchers' developing analyses, of the classic study on women's ways of knowing (BELENKY, CLINCHY, GOLDBERGER & TARULE, 1997); or in a study of critical incident technique by examining FLANAGAN's (1952, 1954) original military studies; or in introducing hermeneutic phenomenology by going to HEIDEGGER's own analysis (2001 [1927]). [40]

This transparency is risky, of course, for both the participants (whose voices and faces would persist online) and the researchers (who would have to show their procedures in all their messiness). Issues related to participant anonymity, as well as how to standardize analysis files across software programs remains a major issue that needs to be untangled, but there are potential options for overcoming these concerns (CORTI et al., 2000). One possibility for anonymity might be to again turn to computer technologies, as they can distort faces in photographs or video and disguise voices in audio recordings to preserve anonymity—similar to methods used to protect the identities of crime victims during public interviews or court testimonials. This procedure is currently time-consuming (CORTI, 2000), but future improvements in facial detection software may make it easier to apply a filter to a video that recognizes a face and automatically distorts it. [41]

This level of security could help assuage participant concerns. While it may be impossible to locate former research participants to discuss these measures with them, researchers can commit to asking all future research participants for their consent in archiving multimedia data files, if proper confidentiality measures are met. In our experience, our university has been supportive of this strategy, and nearly 90% of our research participants when asked are comfortable giving consent for their data to be archived for research use if disassociated with their names and identities. [42]

While these technologies may afford exciting adaptations to qualitative research methods, several barriers remain. The first challenge is with the ethics of embedding participants' audio and video in articles and on the Internet. As many participants and researchers post far more potentially damaging information on their blog or Facebook sites, such hurdles could likely be overcome with proper informed consent and institutional review board oversight, or post-research anonymization techniques (CORTI et al., 2000). The greatest current threat to information privacy seems to be individuals themselves. Infosecurity Europe, an information technology tradeshow, conducts regular on-the-street interviews to demonstrate the susceptibility of individuals to providing personal information in exchange for the possibility of winning a prize as simple as a chocolate egg. In test after test, close to 100% of individuals freely divulged personal information that could put themselves at risk (SALTA, 2005). Thus the ethical issue for researchers may not be in acquiring permission to embed participants' original data in research reports, but rather in protecting participants' privacy interests when they may not do so themselves. This would necessarily lead researchers to adopt ethical norms considering what kinds of original data they would and would not embed in research reports. Thus, researchers may choose to only archive, publish, and share research snippets that will not be personally damaging to their participants. This issue is similar in many ways to current ethical norms about the appropriate use of sensitive transcribed quotations. [43]

Another challenge is that reporting actual audio and video snippets may create in participants a false sense of trust for the researcher, who could still manipulate the media to portray a specific perspective. PATTON (2002) realized that "a downside to visual technology has emerged, since it is now possible to not only capture images on film and video but also change and edit those images in ways that distort" (p.308). However, such distortion is not any more probable with multimedia than with text, which researchers can easily manipulate with the use of an ellipsis "(...)" to hide portions they do not want to reveal. If anything, greater access to raw data promises higher, not lower, representational fidelity, even though complete trust can never be placed in a single interpretation of circumstances. [44]

A lingering issue is the still-developing viability and usability of the tools themselves. Any time new technologies are used, there are challenges including time required to master the new technology, apprehension in using the new tools, and trial and error while the researcher attempts to understand the most effective technology-enabled methods. In the research on student creativity conducted by one of the authors, the research team evaluated a popular CAQDAS tool that provides video coding, but found the video components to be clunky, time-consuming, and simply not very functional. EVERS (2011) similarly surveyed her own students and colleagues about coding original data in popular CAQDAS tools and found that most preferred to work with transcripts in these tools than with the original data. However, much of this problem may be because current CAQDAS tools are not yet very sophisticated in multimedia coding. Video-specific analysis tools that are designed specifically for video analysis are more robust, but are often still difficult to find and expensive to purchase since they are not as widely used as the most common CAQDAS options such as NVivo and Atlas.ti. In our case, the research team finally settled on MediaNotes® because of an institutional license available at our university, but this tool is not yet widely available to other researchers. Additionally, it has been challenging to find cameras that can record directly in a format that MediaNotes® can use without compressing the files, which is time consuming. The research team has found that video cameras and video analysis tools are much more complicated and proprietary than audio recorders, formats, and transcription tools. Thus, while the concept of coding original video data may still have merit, the technologies need to be developed further to make this strategy practical. With the explosion of digital video on the Internet, it seems that these improvements should be forthcoming. [45]

In her discussion on coding original data, EVERS (2011) also discussed several important considerations for why text transcripts still provide benefits over original data. These considerations include the ability to read more slowly and thoughtfully over some portions of text documents, text search features, document linking abilities, and code aggregation features common in CAQDAS tools. These insights relate to the nuances of how most qualitative researchers conduct their analysis. It is our position that coding original data provides many strong benefits, outlined in this article, but that the concerns raised by EVERS need to be addressed in future iterations of multimedia analysis tools. In the meantime, it is possible with some video analysis tools to export the annotations to a spreadsheet that can be imported into Microsoft Excel for sorting, aggregating, searching, and additional analysis. [46]

Finally, the cost of purchasing the technologies must be considered, including the video cameras and multimedia analysis tools. In addition, multimedia analysis tools are not widely available, although common CAQDAS tools are developing multimedia capabilities, though these features are still fairly primitive. As technology continues to evolve, these tools will become more sophisticated and affordable. Recently video cameras have been developed that are nearly as affordable as audio recorders but still produce high definition video. Many digital cameras can even serve as functional video cameras. Finally, some video analysis tools are quite affordable, such as Transana. [47]

When qualitative research is viewed through the lens of history, we see how fundamental shifts in method are often concurrent with advancements in technology. As in earlier times, technological progress now offers the potential for more accurate, efficient, and trustworthy representations of qualitative data. PATTON (2002) stated,

"Whether one uses modern technology to support fieldwork or simply writes down what is occurring ... the nature of the recording system must be worked out in accordance with the participant observer's role, the purpose of the study, and consideration of how the data-gathering process will affect the activities and persons being observed" (p.309). [48]

Instead of accepting transcription as the de facto technique for interpretive research, we suggest continually evaluating the technological landscape and considering the emerging possibilities present for improving our research designs. With the predictable further development of these technologies, we can easily discern a time in the near future when the tools we have discussed will be much more functional, usable, and universally affordable, lessening some of the challenges we have mentioned in this article. The question we raise is whether qualitative researchers will be ready with progressive methods to take advantage of these emerging tools? [49]

1) Explanation of symbols: (()) = comments from the transcriber about nonverbal actions; [] = overlapping speech; equals sign = speech immediately blending together without a pause; > or < = slower or faster speech; (.) = a one-second pause; ( ) = brief pause; underlined = spoken emphasis; (h) = laughing during speech. <back>

Agar, Michael H. (1996). The professional stranger: An informal introduction to ethnography. San Diego, CA: Academic Press.

Ashmore, Malcolm & Reed, Darren (2000). Innocence and nostalgia in conversation analysis: The dynamic relations of tape and transcript. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 1(3), Art. 3, http://nbn-resolving.de/urn:nbn:de:0114-fqs000335 [Accessed: August 31, 2011].

Bauer, Martin W. & Gaskell, George D. (2000). Qualitative researching with text, image and sound: A practical handbook for social research. London: Sage.

Beard, Mary (1992). Frazer, Leach, and Virgil: The popularity (and unpopularity) of The Golden Bough. Comparative Studies in Society and History, 34(2), 203-224.

Belenky, Mary; Clinchy, Blythe; Goldberger, Nancy & Tarule, Jill (1997). Women's ways of knowing: The development of self, voice, and mind (10th ed.). New York, NY: Basic Books.

Bernard, H. Russell (2000). Social research methods: Qualitative and quantitative approaches. Newbury Park, CA: Sage.

Bird, Cindy M. (2005). How I stopped dreading and learned to love transcription. Qualitative Inquiry, 11(2), 226-248.

Clifford, James (1983). On ethnographic authority. Representations, 2, 118-146.

Corti, Louise (2000). Progress and problems of preserving and providing access to qualitative data for social research — the international picture of an emerging culture. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 1(3), Art. 2, http://nbn-resolving.de/urn:nbn:de:0114-fqs000324 [Accessed: August 31, 2011].

Corti, Louise & Gregory, Arofan (2011). CAQDAS Comparability: What about CAQDAS data exchange? Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1), Art. 35, http://nbn-resolving.de/urn:nbn:de:0114-fqs1101352 [Accessed: August 31, 2011].

Corti, Louise; Day, Annette & Backhouse, Gill (2000). Confidentiality and informed consent: Issues for consideration in the preservation of and provision of access to qualitative data archives. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 1(3), Art. 7, http://nbn-resolving.de/urn:nbn:de:0114-fqs000372 [Accessed: August 31, 2011].

Cutcliffe, John R. & McKenna, Hugh P. (2004). Expert qualitative researchers and the use of audit trails. Journal of Advanced Nursing, 45(2), 126-135.

Derry, Sharon J.; Pea, Roy D.; Barron, Brigid; Engle, Randi A.; Erickson, Frederick; Goldman, Rikki; Hall, Rodgers; Koschmann, Timothy; Lemke, Jay L.; Sherin, Miriam G. & Sherin, Bruce L. (2010). Conducting video research in the learning sciences: Guidance on selection, analysis, technology, and ethics. Journal of the Learning Sciences, 19(1), 3-53.

Douglas, Mary (1978). Judgments on James Frazer. Daedalus, 107(4), 151-164.

Emerson, Robert M.; Fretz, Rachel I. & Shaw, Linda L. (1995). Writing ethnographic fieldnotes. Chicago, IL: University of Chicago Press.

Evers, Jeanine C. (2011). From the past into the future. How technological developments change our ways of data collection, transcription, and analysis. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1), Art. 38, http://nbn-resolving.de/urn:nbn:de:0114-fqs1101381 [Accessed: August 31, 2011].

Evers, Jeanine C.; Mruck, Katja; Silver, Christina; & Peeters, Bart (Hrsg.) (2011). The KWALON Experiment: Discussions on Qualitative Data Analysis Software by Developers and Users. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1), http://www.qualitative-research.net/index.php/fqs/issue/view/36 [Accessed: August 31, 2011].

Fielding, Nigel (2000). The shared fate of two innovations in qualitative methodology: The relationship of qualitative software and secondary analysis of archived qualitative data. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 1(3), Art. 22, http://nbn-resolving.de/urn:nbn:de:0114-fqs0003224 [Accessed: August 31, 2011].

Flanagan, John C. (1952). The critical incident technique in the study of individuals. Washington D.C.: American Council on Education.

Flanagan, John C. (1954). The critical incident technique. Psychological Bulletin, 51(4), 327-358.

Frazer, James G. (1922). The golden bough. New York, NY: Touchstone.

Fuller, Francis F. & Manning, Brad A. (1973). Self-confrontation reviewed: A conceptualization for video playback in teacher education. Review of Educational Research, 43(4), 469-528.

Geertz, Clifford (1973). The interpretation of cultures. New York, NY: Basic Books.

Gibbs, Graham R.; Friese, Susanne & Mangaberia, Wilma C. (2002). The use of new technology in qualitative research. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 3(2), Art. 8, http://nbn-resolving.de/urn:nbn:de:0114-fqs020287 [Accessed: February 17, 2011].

Glaser, Barney G. & Strauss, Anselm (1965). Awareness of dying. New Brunswick, NJ: AldineTransaction.

Glaser, Barney G. & Strauss, Anselm L. (1967). The discovery of grounded theory. Chicago, IL: Aldine.

Guba, Egon G. & Lincoln, Yvonna S. (1989). Personal communication. Beverly Hills: Sage.

Hatch, J. Amos (2002). Doing qualitative research in education settings. Albany: State University of New York Press.

Heidegger, Martin (2001 [1927]). Sein und Zeit. Tübingen: Akademie-Verlag.

James, Jacquelyn B., & Sorensen, Annemette (2000). Archiving longitudinal data for future research. Why qualitative data add to a study's usefulness. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 1(3), Art. 23, http://nbn-resolving.de/urn:nbn:de:0114-fqs0003235 [Accessed: August 31, 2011].

Johnson, Brian E. (2011). The speed and accuracy of voice recognition software-assisted transcription versus the listen-and-type method: A research note. Qualitative Research, 11(1), 91, doi:10.1177/1468794110385966.

Kirk, Jerome & Miller, Marc L. (1986). Reliability and validity in qualitative research. Newbury Park, CA: Sage.

Knoblauch, Hubert; Baer, Alejandro; Laurier, Eric; Petschke, Sabine & Schnettler, Bernt (2008). Visual analysis. New developments in the interpretative analysis of video and photography. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 9(3), Art. 14, http://nbn-resolving.de/urn:nbn:de:0114-fqs0803148 [Accessed: August 31, 2011].

Lapadat, Judith C. & Lindsay, Anne C. (1998). Examining transcription: A theory-laden methodology. Paper presented at the Annual Meeting of the American Educational Research Association in San Diego, CA on April 13-17.

Laurier, Eric; Strebel, Ignaz & Brown, Barry (2008). Video analysis: Lessons from professional video editing practice. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 9(3), Art. 37, http://nbn-resolving.de/urn:nbn:de:0114-fqs0803378 [Accessed: August 31, 2011].

Leach, Edmund R. & Weisinger, Herbert (1961). Reputations. Daedalus, 90(2), 371-399.

Liddicoat, Anthony J. (2007). An introduction to conversation analysis. London: Continuum.

Lincoln, Yvonne S. & Guba, Egon G. (1985). Naturalistic inquiry. Newbury Park, CA: Sage.

MacBeth, Douglas (1999). Glances, trances and their relevance for a visual sociology. In Paul L. Jalbert (Ed.), Media studies: Ethnomethdological approaches (pp.135-170). Lanham MD: University Presses of America.

MacLean, Lynne; Meyer, Mechthild & Estable, Alma (2004). Improving accuracy of transcripts in qualitative research. Qualitative Health Research, 14(1), 113-123.

Malinowski, Bronislaw (1922). Argonauts of the Western Pacific. Prospect Heights: Waveland.

Matheson, Jennifer L. (2007). The voice transcription technique: Use of voice recognition software to transcribe digital interview data in qualitative research. Qualitative Report, 12(4), 547-560, http://www.nova.edu/ssss/QR/QR12-4/matheson.pdf [Accessed: August 31, 2011].

McLellan, Eleanor; MacQueen, Kathleen M. & Neidig, Judith L. (2003). Beyond the qualitative interview: Data preparation and transcription. Field Methods, 15(1), 63-84.

Mehrabian, Albert (1971). Silent messages. Wadsworth, CA: Belmont.

Mondada, Lorenza (2008). Using video for a sequential and multimodal analysis of social interaction: Videotaping institutional telephone calls. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 9(3), Art. 39, http://nbn-resolving.de/urn:nbn:de:0114-fqs0803390 [Accessed: August 31, 2011].

Park, Julie & Zeanah, A. Echo (2005). An evaluation of voice recognition software for use in interview-based research: A research note. Qualitative Research, 5(2), 245-251.

Patton, Michael Q. (2002). Qualitative research & evaluation methods (3rd ed.). Thousand Oaks, CA: Sage.

Psathas, George & Anderson, Timothy. (1990). The "practices" of transcription in conversation analysis. Semiotica, 781(2), 75-99.

Rapley, Tim (2007). Doing conversation, discourse and document analysis. London: Sage.

Rich, Peter & Hannafin, Michael (2009). Video annotation tools: Technologies for scaffolding and structuring teacher reflection. Journal of Teacher Education, 60(1), 52-67.

Rist, Ray C. (1980). Blitzkrieg ethnography: On the transformation of a method into a movement. Educational Researcher, 9, 8-10.

Roulston, Kathryn; deMarrais, Kathleen & Lewis, Jamie B. (2003). Learning to interview in the social sciences. Qualitative Inquiry, 9(4), 643-668.

Salta, Anne (2005). Security no match for theater lovers, http://searchsecurity.techtarget.com/news/article/0,289142,sid14_gci1071265,00.html [Accessed: February 25, 2011].

Secrist, Cory; Koeyer, IIse; Bell, Holly & Fogel, Alan (2002). Combining digital video technology and narrative methods for understanding infant development. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 3(2), Art. 24, http://nbn-resolving.de/urn:nbn:de:0114-fqs0202245 [Accessed: August 31, 2011].

Tilley, Susan A. (2003). "Challenging" research practices: Turning a critical lens on the work of transcription. Qualitative Inquiry, 9(5), 750-773.

Tilley, Susan A. & Powick, Kelly D. (2004). Distanced data: Transcribing other people's research tapes. Canadian Journal of Education, 27, 291-310.

Urry, James (1972). "Notes and queries on anthology" and the development of field methods in British anthropology, 1970-1920. Proceedings of the Royal Anthroplogical Institute of Great Britain and Ireland, 45-57.

West, Richard E. & Hannafin, Michael, J. (in press). Learning to design collaboratively: Participation of student designers in a Community of Innovation. Instructional Science. doi:10.1007/s11251-010-9156-z.

Videos

Video_1: http://www.youtube.com/v/dV1Ngeowui0&hl=en_US&fs=1&rel=0&color1=0x2b405b&color2=0x6b8ab6 (425 x 355)

Video_2: http://www.youtube.com/v/uuyPn9Fxqxc&hl=en_US&fs=1&rel=0&color1=0x2b405b&color2=0x6b8ab6 (425 x 355)

Tom MARKLE is a visiting assistant professor with research interests in the influence of formal education on belief change, conceptual change modeling, and enhancing qualitative research using technology.

Contact:

D. Thomas Markle

Department of Individual, Family, and Community Education

123 Simpson Hall

University of New Mexico

Albuquerque, NM 87131, USA

Tel.: +1 505 277-3960

E-mail: markle@unm.edu

Richard WEST is an assistant professor and evaluation consultant with research interests in the integration of technology into teaching, collaborative creativity and learning, and distance education.

Contact:

Richard E. West

Instructional Psychology & Technology Department

150-H MCKB

Brigham Young University

Provo, UT 84602, USA

Tel.: +1 801 422-5273

E-mail: rickwest@byu.edu

Peter RICH is an assistant professor researching how teachers can effectively use video annotation tools to understand and improve their teaching.

Contact:

Peter J. Rich

Instructional Psychology & Technology Department

150-K MCKB

Brigham Young University

Provo, UT 84602, USA

Tel.: +1 801 422-1171

E-mail: peter_rich@byu.edu

Markle, D. Thomas; West, Richard E. & Rich, Peter J. (2011). Beyond Transcription: Technology, Change, and Refinement of Method

[49 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(3), Art. 21,

http://nbn-resolving.de/urn:nbn:de:0114-fqs1103216.