Volume 17, No. 3, Art. 11 – September 2016

Don't Blame the Software: Using Qualitative Data Analysis Software Successfully in Doctoral Research

Michelle Salmona & Dan Kaczynski

Abstract: In this article, we explore the learning experiences of doctoral candidates as they use qualitative data analysis software (QDAS). Of particular interest is the process of adopting technology during the development of research methodology. Using an action research approach, data was gathered over five years from advanced doctoral research candidates and supervisors. The technology acceptance model (TAM) was then applied as a theoretical analytic lens for better understanding how students interact with new technology.

Findings relate to two significant barriers which doctoral students confront: 1. aligning perceptions of ease of use and usefulness is essential in overcoming resistance to technological change; 2. transparency into the research process through technology promotes insights into methodological challenges. Transitioning through both barriers requires a competent foundation in qualitative research. The study acknowledges the importance of higher degree research, curriculum reform and doctoral supervision in post-graduate research training together with their interconnected relationships in support of high-quality inquiry.

Key words: doctoral education; qualitative methodology; dissertation research; qualitative data analysis software; QDAS; technology acceptance model; TAM; action research

Table of Contents

1. Introduction

2. Using Technology

3. Qualitative Data Analysis Software

4. Adult Learning Theory

5. Study Methodology

5.1 Technology acceptance model as analytic lens

5.2 Qualitative data analysis software (QDAS)

6. Findings

6.1 Barrier 1: How easy to learn and how useful will it be?

6.2 Barrier 2: Methodological transparency

7. Conclusions and Scholarly Importance

The study reported in this article explored the changing practices of qualitative research during the social science research phase of doctoral studies. Data was gathered in multiple settings around the world, including methods workshops and classes. A better understanding of doctoral student methodological decision making in relation to the use of qualitative data analysis software (QDAS) is the focus of this article. [1]

The doctoral experience commonly involves student engagement in some form of structured guided instruction which eventually evolves into individualized self-directed inquiry. During the development of a dissertation the student refines their skills in which to ultimately demonstrate mastery of research methodology. Illuminating these practices is an ongoing struggle for the doctoral dissertation student, as well as the novice qualitative researcher. A better understanding of these challenges may benefit dissertation supervision. [2]

A key step for the student is the display of competency in recognizing and judging high-quality research. Judging qualitative research quality has, however, proven to be highly contentious in the social sciences. Previous calls for greater transparency in qualitative inquiry were raised during the paradigm wars (BREDO, 2009; GAGE, 1989) and have continued over the past 30 years, partly to improve the methods and reporting of qualitative research (ANFARA JR., BROWN & MANGIONE, 2002; FREEMAN, DeMARRAIS, PREISSLE, ROULSTON & ST. PIERRE, 2007; MOSS et al., 2009). A common view from this literature has been the measurement of quality in the form of an end product. Such discussions primarily centered upon the presentation and reporting of the final results. Doctoral candidates are expected to be fully engaged in the entire research process during their production of a high-quality dissertation. The challenge, however, remains for the doctoral candidate of finding a way through the murky journey of the dissertation research process. In the study reported here, examining the process offers insights for both the candidates and the supervisors. [3]

Making the research process visible can be challenging for students, especially during the developing stages of a qualitative dissertation study. The need for greater transparency continues to grow as doctoral candidates and supervisors seek advances in research methods that promote high-quality qualitative practice. Constructive critical insights require the ability to examine the intricate thinking behind the design, analysis and integration of theoretical frameworks. Both philosophical and procedural criteria must enter into this examination. [4]

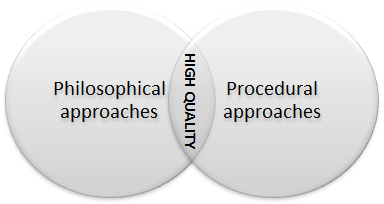

Judging the quality of qualitative research draws upon philosophical and procedural frameworks (KACZYNSKI, 2006; SILVERMAN, 2004). As depicted by Figure 1, these approaches ideally overlap as researchers apply elements of each when determining worth. Operating from a philosophical perspective, the scholar examines evidence of authenticity, credibility, transferability, dependability, and confirmability (LINCOLN, 1995; LINCOLN & GUBA, 1985). In contrast, procedural criteria tend to be more prescriptive and mechanical. The use of procedural criteria to judge the quality of qualitative research is appealing due to the efficient application of standardized checklists and matrices (AERA TASK FORCE ON REPORTING OF RESEARCH METHODS IN AERA PUBLICATIONS, 2006; BROMLEY et al., 2002; COBB & HAGEMASTER, 1987; CRESWELL, 2003).

Figure 1: Judging quality in qualitative research [5]

Regrettably, judging quality solely by procedural criteria without due consideration of philosophical criteria fails to capture the complex heterogeneity of qualitative research. FREEMAN et al. (2007) strongly opposed the formation of "a set of standards of evidence that may be taken up by others and used as a checklist to police our work" (p.29). Rather than setting measurable scale-based standards, researchers are encouraged to adopt high-quality research practices which promote quality among the diverse forms of qualitative inquiry. The overlap and interplay of philosophical and procedural criteria depicted in Figure 1 above, represents such an area of transparent common ground where high quality and credibility may best flourish. [6]

In this discussion, transparency is defined as the researcher making the research process visible to both him-/herself and others. The ability to look inside a qualitative study allows the researcher to engage in an emergent process of quality improvement. Sharing these insights with others further enhances credibility to a larger audience. This process of transparency requires access into the design thinking, methods, qualitative theoretical orientation and analysis (SALMONA, KACZYNSKI & SMITH, 2015) which the qualitative researcher has adopted. [7]

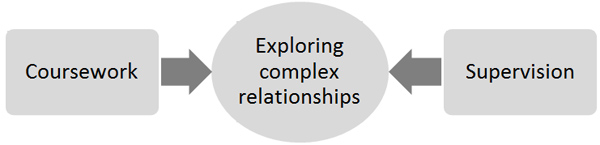

A major benefit from greater methodological transparency is increased research quality. Researchers have the potential to dramatically enhance quality as the use of QDAS becomes the standard in a future world. On this point, Di GREGORIO and DAVIDSON cautioned that "sloppiness will be painfully apparent" (2008, p.40) through this heighted insight and as a result, research methods will benefit as credibility and transferability of high quality qualitative inquiry is enhanced through transparency. A commitment to the ongoing challenges of supporting the exploration of high standards of research quality is essential. [8]

This discussion of judging research quality and promoting autonomous adult learning provides a framework to explore the growing use of technology during the research process. Learning to use a tool as a student and as a teacher are two different skill sets (WAYCOTT, BENNETT, KENNEDY, DALGARNO & GRAY, 2010). The use of technology as a tool in research involves the development of a unique set of competencies. For the supervisor, engaging in learning during the process stages of coursework and supervision is invaluable. Insights from the learning practices of post-graduate students potentially demonstrate the changing applications of technology. The growing use of QDAS is such an example and "working without computers is no longer an option for qualitative (or indeed any) researchers" (RICHARDS, 2015, p.4). Teachers can better engage in the teaching of research competencies by examining the thinking of the student researcher as they engage in research design using QDAS. Reform of coursework, addressing diverse adult student learning styles, promoting transparency through technology, and supervision of research all represent pieces of a complex puzzle. Exploring these relationships of student learning, research training and supervision opens significant paths for this inquiry. [9]

Reflecting on the international delivery and consumption of student learning, research training and supervision can enhance our larger understanding of doctoral education practices. This includes current practices in the delivery of post-graduate qualitative research courses which are increasingly incorporating and integrating the application of technological research tools. These relationships connect doctoral candidates, teachers, and supervisors in preparing for and promoting changes in the pedagogy of research methods. [10]

The next section discusses the growing role of technology in doctoral dissertation research. This is followed by Section 3, which provides an overview of software adoption from a historical discussion of the changing qualitative methodological relationships with technology. Section 4 assists in connecting the study to learning styles of doctoral students, and the study methodology is presented in Section 5. The findings in Section 6 identify two key barriers to technology adoption. The conclusion in Section 7 then promotes advances in our understandings of optimal uses of technology in support of teaching and learning processes. [11]

Technology is recognized as a key force in bringing about changes in teaching (NESHYBA, 2013) and in the delivery of research methods. The use of technology to improve teaching, however, is moderated by concerns that best practices are appropriately supported with evidence. Technology based instructional delivery, in itself, should not be construed to represent evidence based reform of teaching and learning (PRICE & KIRKWOOD, 2014). The growth in the quantity and quality of technological tools supporting research inquiry, however, continues to advance. President John HENNESSY of Stanford University (BOWEN, 2013) contended that digital trends in education are potentially overwhelming. He warned that "[t]here's a tsunami coming. [But] I can't tell you exactly how it's going to break" (p.1). The growing complexity of problem solving in the 21st century is increasingly driving advancements in educational technology to support teaching and learning and broaden thresholds of knowledge. [12]

An example of the growing scope of a digital trend at the level of graduate education is the delivery of a doctoral degree entirely through the internet. Coursework, supervision, data gathering, analysis, dissertation defense, and publication are all tasks which are now completed online. Software programs for each of these tasks continue to improve in quality. Universities are increasingly adopting some or all of these components as doctoral candidates pursue degree completion. A rapidly growing body of literature on the fit of QDAS is informing critical inquiry into the use of technology in doctoral research. [13]

Discussions of this trend have been published by FQS in many articles and three special issues on the use of technology in the qualitative research process: 1. GIBBS, FRIESE and MANGABEIRA (2002) edited an issue devoted to "Using Technology in the Qualitative Research Process" which drew attention to the application of digital analysis with particular attention to the use of audio, video, and photographic data; 2. "The KWALON Experiment; Discussions on Qualitative Data Analysis Software by Developers and Users," edited by EVERS, MRUCK, SILVER and PEETERS (2011) advanced papers from KWALON, an international conference featuring analysis software development clearly supporting relevant discourse on the digital trend; and 3. "Qualitative Computing: Diverse Worlds and Research Practices," edited by CISNEROS, DAVIDSON and FAUX (2012) again featured KWALON and where they emphasized the evaluation of the international impact of digital analysis. [14]

There is a general assumption that students enter doctoral research with a known set of skills—not so. Building conceptual theoretical knowledge into qualitative inquiry demands the continual weaving of theory and philosophy throughout the design and analysis of a study (BERG, 2004; PATTON, 2015). In addition, social science research competencies must be attuned to the complexity of idiosyncratic social problems. As doctoral candidates refine their research design and progress through the dissertation their degree of engagement evolves along with their growing need for progressively advanced research skills. This need for advanced knowledge increasingly involves an interface with an ever expanding relationship with technology: using literature review online databases (Ó DOCHARTAIGH, 2012), concept map tools (MARTELO, 2011), bibliographic software programs such as Endnote, and a growing range of QDAS programs are now common place for social science research. Engaging in high-quality research increasingly requires making visible an integration of competency-based technical skills with the application of methodological knowledge. Technological tools represent the fastest changing segment of qualitative research in which to make visible this complex fluid thinking of the researcher. What we as teachers and supervisors of qualitative doctoral inquiry do with this increased use of technology to explore the transparency of doctoral student inquiry, however, remains mostly untapped in the literature. [15]

3. Qualitative Data Analysis Software

In the 1990s the use of data analysis software rapidly gained attention among qualitative researchers. Up until this time qualitative researchers had to rely on index or system cards to hold and catalog data and research notes. Indexing is a systematic way to classify data documents by index terms to summarize its content, or to increase its detectability. Early leaders in technology adoption, Tom and Lyn RICHARDS (1991, 1994a, 1994b), recognized the benefits of using QDAS to promote transparency into the emergent process of qualitative inquiry: "An early goal was that any index system must be sufficiently flexible to receive and respond to new categories as they emerge" (1991, p.250). Moving from manual indexing systems to automating index/filing systems allowed the researcher to ask more complicated questions. This flexible use of technology allowed the researcher to engage in building code structures which supported the emergence of new categories and to rebuild and explore the complexity of meanings which were previously manually daunting. The authors cautioned, however, that

"having achieved the goal of progressive interrogation of emerging patterns, we rapidly discovered that it is easy to get lost in the analytical paths down which the data could lead ... the goal is not to automate analysis but to expose analytical processes to all possibly relevant information in flexible structures" (p.252). [16]

From this early insight we can recognize the importance of the researcher driving the path of the inquiry and efforts to firmly relegate QDAS to the role of a technological tool. [17]

More recently, Di GREGORIO and DAVIDSON (2008) contended that the quality and strength of a qualitative design requires careful examination of the "actual data, the coding system and its application to segments of data. Portability and transparency, two key features of QDAS, make this possible. For the experienced QDAS users, the software design and the substance are inextricably interwoven" (p.54). The implications from the growing use of QDAS are that eventually it becomes a standard in qualitative inquiry. [18]

An overview of QDAS development was provided by DAVIDSON and Di GREGORIO (2012) from a historical perspective which emphasized an ongoing qualitative methodological relationship with technology. Their discussion was framed around eight moments in qualitative research spanning the early 1900s into the future and its implications. This continuing journey for academics and developers includes honoring the tenants of qualitative methodology which have been built from the past and will shape the future. [19]

Doctoral candidates are very much a part of this technological journey of change in qualitative research practice. Teachers, dissertation chairs, thesis supervisors, and panel members are all instrumental in promoting research quality and curricular reform. Acknowledging the role of self-directed heuristic inquiry by the student, however, is very much an essential unique ingredient in doctoral studies. What the doctoral student does with greater insight into his/her research thinking drives to an important purpose of this article. [20]

Understanding adult learning is a complex endeavor ranging from ideas about self-directed learner, to the autonomous learner. Research students are expected to become self-directed learners who must assume the primary responsibility for planning, implementing, and even evaluating their independent effort (CANDY, 1991). As adult learners, this path toward self-directed heuristic knowledge generation is a skill which post-graduate students are expected to fully adopt as they move toward completion of a terminal degree (AUSTIN, 2011; LOVITTS, 2008; WALKER, GOLDE, JONES, BUESCHEL & HUTCHINGS, 2008). Most learners, when asked, will proclaim a preference for assuming such responsibility whenever possible. BIGGS' (1999) "3P" model of learning and teaching described three points in time at which learning-related factors are placed: presage, before learning takes place; process, during learning; and product, the outcome of learning. RAMSDEN's (2003) view of student learning in context also supports the value of self-directed knowledge generation. Several things are known regarding our understanding of teaching and self-directed learning e.g., individual learners can become empowered to take increasingly more responsibility for various decisions associated with the learning endeavor; self-direction does not necessarily mean all learning will take place in isolation from others; and self-directed learners appear able to transfer learning, in terms of both knowledge and study skill, from one situation to another. [21]

Autonomous learning is often associated with independence of thought, individualized decision-making and critical intelligence. BOUD (1988) provided several ideas on developing student autonomy and CANDY (1991) suggested that continuous learning is a process in which adults manifest personality attributes of personal autonomy in self-managing learning efforts. CANDY also profiled various autonomous learner characteristics including the use of technology, which provides, or seemingly provides, graduate students with the opportunity to take more responsibility for their own learning. [22]

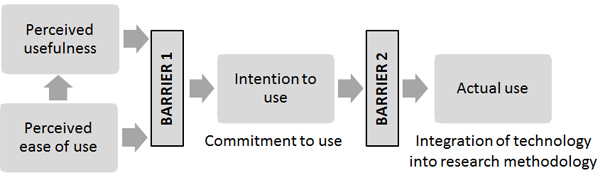

Good teaching is recognized as student-centered, flexible and inclusive of student diversity. BIGGS (2003) proposed that "good teaching is getting most students to use the higher cognitive level processes, which the more academic students use spontaneously" (p.5). For BIGGS, good teaching is based on the platform that underlies the work of other respected writers in the field such as LAURILLARD (2002) and RAMSDEN (2003) which posited that what the learner does is actually more important than what the teacher does. This extends the discussion to an essential point that university teaching is about helping students engage in learning (CLARK, 1997; LAURILLARD, 1997; SALMONA, 2009). In acknowledging this premise we recognize that further exploration of student learning of research methodology and the role of doctoral supervision is needed in higher education internationally. [23]

It is important to note that the idea of teaching to learning styles is in reality highly complex. BROOKFIELD (1990) put forward the idea that instead of affirming the comfortable ways students go about their learning, they can also be introduced to alternative modes of learning. In this way when students become real-world practitioners who are expected to demonstrate the skills to integrate technology into research methods, they will not be totally thrown by the experience, having already been introduced to a diversity of ways of planning and conducting such learning. [24]

The focus of our study was to better understand doctoral student methodological decision making in relation to the use of QDAS. Exploring these complex relationships helped us to provide greater insight into the development of research thinking. The following research questions further clarified the direction of the study.

How do doctoral students incorporate technology into qualitative data analysis?

What helps or hinders this process?

How do doctoral students conceptualize quality in data analysis? [25]

We are experienced international higher education academics. Coming from the United States and Australia, and working with doctoral students for over 25 years between us, we were able to draw upon the delivery of doctoral research classes; QDAS master class training in numerous locations internationally; and ongoing doctoral supervision.

Figure 2: Doctoral education delivery [26]

As shown in Figure 2, doctoral candidate supervision and the delivery of research methods coursework, may be viewed as separate components of doctoral education. For example, in the United States, traditional delivery involves candidate enrollment into structured doctoral programs which are predominately comprised of coursework. In comparison, Australian higher education current practice is commonly based on a strong one-to-one relationship with the supervisor. The Australian approach typically requires minimal doctoral level coursework. As a result, it is an increasingly common view in Australia that while Australian doctoral candidates develop a strong depth and breadth of knowledge in their field, they are comparatively weak in terms of research skills. The United States reliance on coursework may be seen as offering greater training in research skills. This may be, however, at the expense of a weaker autonomous learning foundation for the doctoral candidate. These simplified generalizations of the United States and the Australian models are presented to aid in framing a delimitation of this study within a much larger body of literature in higher education. It is not our intention in this article to discuss the international diversity of doctoral education models. Readers may refer to AUSTIN (2011), KILEY and PEARSON (2008), and WALKER et al. (2008) for more detailed discussions regarding the global diversity of doctoral education delivery. [27]

We used an action research approach to frame our study design which supports a strong iterative and cyclical framework. As a two member researcher team, our background and ongoing work with doctoral students enabled us to follow a flexible emergent research design process within the action research approach. The action research iterative cycle of planning, implementation, reflection, followed by formative revision (KOSHY, 2005; STRINGER, 2007) was maintained throughout the study to explore related meanings and identify paths to promote reforms in practice. [28]

The study involved three action research cycles. The first cycle involved gathering data in research methods classes and then reflecting on the analysis of that data. The second cycle was the delivery of QDAS master class training seminars, where the development of these seminars was informed by the data analysis and reflection from the first cycle. Finally, the third cycle, informed by the data analysis and findings from the previous cycles, involved the supervision of doctoral students actively engaged in dissertation research. Participant selection for the study applied purposeful sampling of information-rich cases (PATTON, 2015, p.264). This strategy involved criterion-based selection based upon doctoral student status, dissertation research in the social sciences, and expressed interest in the application of QDAS. [29]

The first planned action research cycle focused on doctoral students in research methods classes. Data was gathered to better understand how these students were learning the use of technology in their class setting. As we were actively engaged in teaching these courses, it was important to maintain anonymity of the participants and focus on the actual learning process. We were able to gather sufficient data using observations, teaching and training documents and structured open-ended questionnaires, without the need for interviews. Doctoral student participants in this cycle were post-graduate students from two universities in the United States and from two universities in Australia. [30]

In action research, the reflective stage of the iterative cycle offers the means to explore deeper meanings which support refinements in professional practice and growth. BOUD, KEOGH and WALKER (1985) explain "reflection is an important human activity in which people recapture their experience, think about it, mull it over and evaluate it. It is this working with experience that is important in learning" (p.19). Moving away from the action allows space for critical reflection on what has happened. Using Dedoose (a cloud-based mixed methods data analysis software) to manage and build connections in our data, we were able to provide space for ourselves to reflect on our understanding of the research focus and refine the flexible emergent research design to be more robust and trustworthy; allowing us to inform the design and development of the second action research cycle. [31]

Cycles two and three involved data gathering for a further two years (see Table 1 for a more detailed and specific description of the data gathering process). For the second cycle data was gathered from a series of 20 international QDAS master class training seminars conducted in Australasia, Europe, and the United States. For cycle three we were supervising doctoral students in the United States and Australia who were actively engaged in qualitative research design. Ethics approval was granted by our universities. [32]

Data triangulation, as described in Table 1, strengthened our study by including observations and field notes; memos which documented reflective methods and analytic issues (KACZYNSKI & KELLY, 2004; KACZYNSKI, SALMONA & SMITH, 2014); informal open-ended interviews; training and teaching documents; and responses from structured open-ended questionnaires. This large amount of data was managed by us using Dedoose, which strongly supports real-time online collaboration for data analysis. Using the software also allowed us to work with the data, filtered by cycle, or as a whole to integrate the analysis into our findings. See the following section 5.1 for more detail about the analysis process.

|

Data source |

Participants |

Time |

Location |

Data |

|

Doctoral students in research methods courses |

46 |

5 years |

USA, AU |

Structured open-ended questionnaires Observations Teaching and training documents |

|

20 international QDAS master class training seminars with average of 12 students per class |

240 |

2 years |

USA, EU, Australasia |

Observations Field notes Memos Teaching and training documents |

|

Supervision of doctoral students actively engaged in dissertation research |

24 |

2 years |

USA, AU |

Observations Field notes Memos Informal interviews |

Table 1: Data gathering [33]

5.1 Technology acceptance model as analytic lens

During the analysis phases we realized that we needed to understand how users learn about, and use technologies to be able to answer our research questions. So we reviewed literature around technology adoption and acceptance from the information systems body of knowledge. Concepts in technology acceptance model (TAM) theory (DAVIS, 1986, 1989) resonated with our own experiences, and it is a widely cited model used for understanding user motivation and predicting system use. Our reflections in the analysis phase of the action research was enhanced by drawing upon elements of TAM as a theoretical analytic lens for better understanding how students interact with new technology. As discussed earlier, the positioning of transparency into research development allows for an examination of relationships of emerging research technology and the research student learning process. Understanding acceptance or rejection of a new system has proven to be an extremely challenging issue in learning through technology. TAM theory provides the means to explore how the user adopts and uses any information technology and to identify any factors that may influence their technology adoption and utilization. [34]

TAM is a widely used tool for assessing and predicting user acceptance of emerging technology (DAVIS, 1989; DAVIS & VENKATESH, 1996; PICCOLI, AHMAD & IVES, 2001; VENKATESH, MORRIS, DAVIS & DAVIS, 2003). The model attempts to predict and explain system use by positing that perceived usefulness and perceived ease of use are of primary relevance in computer acceptance behavior. In essence, new users will use a new technology if they perceive it to be useful and easy to use. In its original form, the model defined the constructs of perceived usefulness as "the degree to which a person believes that using a particular system would enhance his or her job performance" (DAVIS, 1989, p.320), and perceived ease of use as "the degree to which a person believes that using a particular system would be free of effort" (ibid.). The TAM theory has evolved and continues to be redefined in response to critical discussions regarding limited predictive capacity and generalizable applications in an Information Systems environment (BAGOZZI, 2007; CHUTTUR, 2009). These expressed quantitative weaknesses of the theory drew on tensions regarding perceptions by the individual user and the social consequences of greater technological use. From a qualitative inquiry perspective; however, these weaknesses may be viewed as strengths to enhancing social insights through the reflective stage of the action research cycle. [35]

Even though TAM was developed and widely accepted in the information systems literature within a quantitative context, it provided a useful theoretical analytic lens for this study. Our reflections were thus enhanced through this analysis process which promoted inductively exploring qualitative findings, the identification of patterns, and building relationship links to support a carefully crafted web of meaning. This process improved the exploration of the dynamic challenges of incorporating a technological tool into a wide range of qualitative instructional practices including post-graduate coursework, data analysis master classes and doctoral supervision. [36]

5.2 Qualitative data analysis software (QDAS)

The data analysis master classes in this study were developed as an academic endeavor. NVivo software was used as an instructional tool only and, as such, does not constitute an endorsement or recommendation of a software program, nor is it our intent to provide a review of the various QDAS products currently available on the market. For such assistance, we recommend The CAQDAS Networking Project based at the University of Surrey, UK which provides an academic forum offering practical support, training and information in the use of a range of software programs (LEWINS & SILVER, 2009). [37]

QSR International is the qualitative research software developer for the NVivo QDAS program. The company offers NVivo technical training in an intensive two day format with an emphasis on the software command functions. As newer versions of NVivo are released, the features and functions of the software program expand. The two day training format no longer has sufficient time to cover this growing range of technical features. Other training models such as online and self-paced tutorials offer a range of delivery strategies and timeframes in response to the changing market. Additionally, universities offer research courses in QDAS for post-graduate students (KACZYNSKI & KELLY, 2004). [38]

Our master class training format represents a unique academic blend of practical fieldwork practices with qualitative theoretical considerations. This combination draws upon critical elements offered in corporate training workshops and micro lessons from traditional post-graduate coursework. Master class content in this study was designed as an instructional supplement to existing training models and delivered through a blending of technical software features with qualitative research methodological instruction. Using action research cycles, we continually reviewed and refreshed the content and format of this instructional delivery throughout the study. Participants were expected to enter with prior knowledge of research design, qualitative theoretical training, and to be currently pursuing dissertation studies. In addition to doctoral candidates, supervisors and faculty engaged in professional research were invited to attend. [39]

Inquiry throughout the study concentrated on how we can use technology as a tool to support and reform doctoral research practices and supervision. There are now many potential research avenues in the area of technology-mediated learning to further our exploration of the growing application of technology in the learning process. An explicit consideration of relationships among technology capabilities, instructional strategy, and contextual factors involved in learning is essential in framing such an inquiry (ALAVI & LEIDNER, 2001; LADKIN, CASE, WICKS & KINSELLA, 2009). [40]

Findings from the study relate to two significant barriers to acceptance which doctoral students confront: 1. aligning perceptions of ease of use and usefulness is essential in overcoming resistance to technological change (F#1 in Table 2 below) and, 2. transparency into the research process through the technology promotes insights into methodological challenges (F#2 in Table 2 below). [41]

The following table identifies evidence from the data which addresses the three research questions and demonstrates transparent strategies to link the study's research questions to data sources, and findings. The matrix is used to visually link specific research questions to findings and sources for data triangulation. This visual representation provides greater access to the credible logic and reasoning within the study and encourages analytical openness (KACZYNSKI et al., 2014).

|

Data source |

RQ11) |

RQ22) |

RQ33) |

|

Observations and field notes |

F#1 |

F#1 |

F#2 |

|

Memos (reflective, methods and analytic) |

F#2 |

F#1 |

F#2 |

|

Informal open-ended interviews |

F#1 |

F#1, F#2 |

F#2 |

|

Training and teaching documents |

F#1 |

F#1 |

|

|

Structured open-ended questionnaires responses |

F#1, F#2 |

F#1, F#2 |

F#2 |

Table 2: Linking research questions (RQ) to findings (F) through data sources [42]

6.1 Barrier 1: How easy to learn and how useful will it be?

The first barrier involves a researcher's intention to use technology based upon perceived ease of use. Minimal effort is desired when considering the adoption of a new software program. NVivo is recognized as a complicated software program, the use of which requires considerable effort with a steep learning curve. As a doctoral student in the United States explained: "somehow, in 'playing around' with the model feature of NVivo, I apparently managed to lose this afternoon's work ... Must be more careful playing with this NVivo tool. Sharp blades cut too easily." This new user reaction succinctly demonstrates the first barrier which was identified in this study. Adoption of QDAS technology such as the NVivo software program is perceived as difficult by qualitative researchers. The TAM lens, however, also connects ease of use with perceived usefulness (see Figure 3). Given the daunting challenges of manually conducting qualitative research, new users at an early or mid-stage in their dissertation studies were more likely to persevere. Researchers nearing the end of their studies, however, perceived the usefulness of the software to their work to be low. As a result, a small group of doctoral students attending a data analysis master class in Australia offered feedback that "at this late stage of their project they needed to not occupy themselves with learning and using another tool for their data." Students with prior exposure to QDAS through doctoral coursework in the United States, however, were more likely to remain engaged with further training and utilization of QDAS in their research. It is important to notice a difference here where QDAS training is increasingly part of doctoral coursework and embedded within research methods or qualitative analysis content in the United States. [43]

Our memos and observations frequently noted that early users of QDAS commonly approach the technology asking: "What does this software do that I can't do manually? Why should I use this in my research?" During this process the new user must be open to recognizing some value or benefit to adoption and move beyond a position of "convince me." The decision about whether to use technology, or not, is ultimately that of the researcher. Manual data management and analysis may be the best choice for some studies. Such a decision, however, must carefully consider a range of perceived benefits which might be realized from QDAS adoption. [44]

The following benefits were identified by participants as they confronted barrier one. They are paraphrased here:

data management tool supporting complex data triangulation

building connections and relationships in the data

concurrent analysis of both old and new data

assists the researcher to develop autonomous inductive insights

more efficient to use in the long-run, once over the learning hurdle

resolving discrepancies in latter stages of analysis

managing secure backups in multiple locations

ability to visualize and model data in different ways [45]

This listing of perceived benefits represents further evidence from the participants supporting this finding. In addition, the listing may be of value to supervisors as practical steps to assist doctoral candidates in re-engaging and pushing through barrier one. [46]

By moving beyond this initial resistance to the adoption of technology the researcher can benefit from a tool that offers greater transparency and visualization. As one doctoral student in the United States explained to her supervisor, "the more completely ideas can be displayed, shared, the more universal their meanings become." Visualization of connections in their data aids students in exploring and in reporting meanings to others. Aligning perceptions in ease of use and usefulness is essential in overcoming resistance to technological adoption. [47]

This barrier is an issue for the doctoral student as well as the supervisor. The comfort level, or discomfort level, of supervisors with QDAS was identified as a contributing factor to adoption decisions by doctoral students. Students in this study who wanted to use the software, where their supervisor was not familiar, or uncomfortable, with QDAS reported that they were discouraged from using any software in their analysis. Comments indicated that these supervisors were more comfortable with a traditional manual approach to analysis. This point is beyond the scope of this study, and further research into this relationship is warranted.

Figure 3: Barriers to use (adapted from TAM) (DAVIS, 1989; VENKATESH et al., 2003) [48]

Over a two year period, delivery of an introductory master class session was repeated four times at the same location in Australia. Doctoral students who returned to participate in these same data analysis master classes expressed frustration with their inability to retain software skills. As one of these students explained, "after a master class I just regress, I try to do a literature review using NVivo, but that doesn't work." New users persevered by returning for additional master class training and by also independently engaging in their research as self-directed learners. Behavioral intention to use requires a commitment to overcome the steep learning curve of a new technology. As one of the Australian master class doctoral students explained,

"I would like to learn more about using the NVivo software program, mainly so that I am confident in the way I am using the software from day one. There have been several instances in my past where I have jumped into a software program, only to realize later that I needed to modify the way that I was using it. This is not something that I want to do with my dissertation work!" [49]

Both students and supervisors are increasingly recognizing the essential need to continually practice with the software and to stay engaged with their research study in order to maintain satisfactory progress. Gaps in the application of research skills hinder analytic development for the doctoral candidate and further deter successful degree completion. An instructional phrase used in the data analysis master class reinforces this point: "if you don't use it you will lose it." A high intention to use outcome can only be achieved if the user uses and continues to use the QDAS technology. [50]

As new users make significant connections between the software and their research, behavioral adoption changes occur. By breaking down the technical training into small steps, the new user is able to build relevant connections to their study. Complex data analysis software programs offer an array of functions and features. The responsibility remains with the researcher to drive the research process and learn to harness the software program. Applying those features in the program that are presently relevant allows the new user to build their confidence in working with the tool. [51]

6.2 Barrier 2: Methodological transparency

Data analysis using the TAM lens identified a second barrier in this study which qualitative researchers confronted as they continued in their efforts to adopt new technology. We found new users approached NVivo training with an expectation that the training content would be exclusively technical. The technology is a software program and as such is perceived to operate like word processing or a similar computer program with perhaps unique commands. What the new user often fails to appreciate is the amount of methodological decision making required when running the program. Integration with qualitative methodology and the resulting impact upon potential revisions to study design is often unexpected. This shift in perceptions and subsequent insights into design challenges represents a second barrier as the new user struggles with harnessing technology as a tool for research. A doctoral student in the United States asked: "How should I connect the lessons from the NVivo training with my design and my plans for data?" The new user demonstrated methodological growth when critically thinking about research, which was triggered by their use of a software program function. This challenge becomes more apparent once the new user realized that the software is manageable and they are able to see growth in their project. As students increase their commitment to use the technology, they are more able to clearly identify potential foundation weaknesses in their studies' design methodology. [52]

As doctoral students advance their technical training and increasingly apply more of the program features of qualitative analysis software, they are drawn deeper into methodological considerations. For example, during the construction of folders for their data sources, the researcher is engaged in data management. Does the study design support data triangulation? Would additional forms of data enhance the quality of the inquiry? As the researcher engages in the process of coding data, finding patterns, labeling themes and developing category systems, a mix of demanding skills are required (SALDANA, 2009). The student's interpretations of the aims, tasks and context of the learning activities have a critical impact on their approach to learning. As the student explores the query and model features in NVivo they are drawn to critically consider what the study intends to answer and the quality of the evidence. As viewed by an Australian doctoral student during a supervision meeting, as the model came together, "I realized that perhaps the question is irrelevant to the study. Having the model to view things graphically is helping me to fine-tune and revise my questions to make sure they are pertinent to the study." During the progression of technological acceptance the new user initially asks a technical issue, which then leads to refinements and further development of the purpose of the study. To further complicate this experience, the student is often concurrently processing potential refinements in their application of a qualitative theoretical framework. As the researcher progresses in their inquiry, the tool potentially becomes a window into every facet of the study. In addition, the researcher is more clearly drawing distinctions between the technical uses of a software research tool and the unique challenges of thinking through the methodological emergent design adjustments which occur in qualitative inquiry. With clarity in drawing these distinctions the new user can appropriately avoid blaming the tool for their design headache. This transparency may be both frustrating and beneficial while aiding the student in their pursuit of high quality qualitative inquiry. [53]

As shown in Figure 3, actual use is achieved only after the new user reconciles perceived ease of use, and usefulness leading to a demonstrated competence and the intent to use. Overcoming both barriers requires a competent foundation in qualitative research. With a solid foundation the new user can make methodological choices which potentially entail emergent enhancements to their study design. In addition to modifications to the design these decisions also involve the weaving of theory and philosophy into analysis. Doctoral students are concurrently contending with the challenge of learning to apply the features and functions of a new technology, while also engaging in an acceptance process of adopting both philosophical and procedural criteria to their research. Making qualitative research thinking more visible during the process directly benefits learning for the doctoral candidate. As the researcher builds an audit trail throughout the inquiry they are able to make the messy process of decisions and choices more transparent to themselves and to others. This is best facilitated through the writing of research memos in a systematic manner and inclusion of the memos as a data source in the study. "Methods memos are used to record emergent design decisions and to describe the reasoning behind such changes" (KACZYNSKI et al., 2014, p.131). In particular, the researcher should write a methods memo each time a methodological decision is made which results in a design change. Through such steps the researcher builds an audit trail of evidence which enhances credibility and promotes transparency into their qualitative methodology. [54]

An integrated instructional delivery model promotes a hands-on approach which develops qualitative skills through software competency while simultaneously promoting high quality inquiry. Qualitative software promotes rich data analysis by managing the complexity of coding and then expands through queries, exploring paths of inquiry into the data. By viewing the data in different ways, deeper and multiple understandings can be explored. This assists in the construction of improved and more appropriate actions derived from these enhanced methodological understandings (CHARMAZ, 2014; PATTON, 2015; SALDANA, 2009). Looking at all of the data in an entire study without the influence of a timeline opens our insights and promotes inductive inquiry. This creates a level playing field for meanings and interpretations. By including the application of qualitative technology in qualitative research coursework, future researchers can increase the breadth in their qualitative research skills. In addition, professional development remains as an essential step for researchers to better understand the application of technological research tools to bridge theory and practice. Since qualitative research software now provides entirely new ways of handling data, it has become increasingly important that researchers explore innovations in student learning and supervision. [55]

7. Conclusions and Scholarly Importance

This study supports ongoing efforts (DAVIDSON, 2012; RICHARDS, 2015) to promote advances in our understandings of optimal uses of technology in support of teaching and learning processes, and development of ways to improve these processes. Universities are investing in research technologies to improve education at an increasing rate over the past decade. Although research on technology-mediated learning has increased in recent years, it still lags behind developments in practice. There are now many potential research avenues in the area of technology-mediated learning including the question of how does technology enhance learning. Such questions require an explicit consideration of relationships among technology capabilities, instructional strategy, and contextual factors involved in learning (ALAVI & LEIDNER, 2001; LADKIN et al., 2009). Issues of how technology-mediated learning influences program design and what structures and processes universities can employ to facilitate innovation must be addressed at the institutional level. [56]

A potential benefit from this study for post-graduate research students and supervisors are greater internal formative improvements in research course delivery, master classes and doctoral supervision. Better understanding the barriers doctoral students experience when adopting the use of technology can benefit supervisors in the implementation of improvements in the design of professional development training and the delivery of research courses to overcome these barriers. Calls for improvements in practice recognize that among the skills considered to be essential in doctoral education are analysis skills, critical thinking skills, and technological skills (EVERS, 2016; MANATHUNGA & WISSLER, 2003; SCHMITZ, BABER, JOHN & BROWN, 2000; SILVER & RIVERS, 2015; SILVER & WOOLF, 2015). Of particular significance in this study is the influence that instructional design decisions potentially have upon mastery of research methodology and, ultimately, improvement in doctoral instructional delivery and doctoral student engagement. Greater awareness of the potential barriers to technological acceptance will benefit new users who are confronting the challenges of the steep learning curve found in advanced qualitative analysis software. Recognizing these barriers upfront allows the new user to more effectively contend with challenges in the merging of technology and methodology. [57]

As discussed above, advances in the use of qualitative data analysis software are encouraging doctoral students to critically explore methodological issues. Refinements in the methodology of a study will ultimately lead to broader improvements within doctoral education social science research. We can further consider the critical role of higher degree research, curriculum reform and doctoral supervision in post-graduate research training together with their interconnected relationships promoting high quality inquiry. While there remains a need to strengthen student conceptualizations of quality in qualitative research, there is evidence that teachers and supervisors are actively engaged in the promotion of high quality doctoral research. Much more, though, is required in understanding the process of applying philosophical criteria to the assessment of qualitative research. In this study, doctoral candidates expressed a theme of need in applying alternative frameworks of quality to qualitative research. What is meant by need? In this study, need encompassed the importance of moving beyond superficial considerations of methods, the significance of researcher transparency, and the ability to teach students how to critically self-assess their work. We ascertain that need echoes the complex heterogeneity of qualitative research paradigms. Transparency gained from the use of such technological tools as NVivo, or the increasing use of cloud based analysis programs such as Dedoose, are changing how we assess quality in research. As the use of technology gains ground in qualitative research future qualitative researchers must respond. Further exploration into these changes in practice, including the development of solutions and strategies for practitioners with suggestions for instructional design, may be a highly fruitful direction for future research. [58]

1) Research question 1: How do doctoral students incorporate technology into qualitative data analysis? <back>

2) Research question 2: What helps or hinders this process? <back>

3) Research question 3: How do doctoral students conceptualize quality in data analysis? <back>

AERA Task Force on Reporting of Research Methods in AERA Publications (2006). Standards for reporting on empirical social science research in AERA publications. Educational Researcher, 35(6), 33-40.

Alavi, Maryam & Leidner, Dorothy E. (2001). Research commentary: Technology-mediated learning—a call for greater depth and breadth of research. Information Systems Research, 12(1), 1-10.

Anfara, Jr. Vincent A.; Brown, Kathleen M. & Mangione, Terri L. (2002). Qualitative analysis on stage: Making the research process more public. Educational Researcher, 31(7), 28-38.

Austin, Ann (2011). Preparing doctoral students for promising careers in a changing context: Implications for supervision, institutional planning, and cross-institutional opportunities. In Vijay Kumar & Alison Lee (Eds.), Doctoral education in international context: Connecting local, regional and global perspectives (pp.1-18). Selangor Darul Ehsan: Putra Malaysia Press.

Bagozzi, Richard P. (2007). The legacy of the technology acceptance model and a proposal for a paradigm shift. Journal of the Association for Information Systems, 8(4), 244-254.

Berg, Bruce L. (2004). Qualitative research methods for the social sciences. Boston, MA: Pearson Education.

Biggs, John (1999). Teaching for quality learning at university. Maidenhead: Society for Research into Higher Education & Open University Press.

Biggs, John (2003). Teaching for quality learning at university (2nd ed.). Maidenhead: Society for Research into Higher Education & Open University Press.

Boud, David (1988). Developing student autonomy in learning. London: Kogan Page Limited.

Boud, David; Keogh, Rosemary & Walker, David (1985). Reflection, turning experience into learning. London: Kogan Page Limited.

Bowen, William G. (2013). Walk deliberately, don't run, toward online education, The Chronicle of Higher Education, http://chronicle.com/article/Walk-Deliberately-Dont-Run/138109/ [Accessed: December 10, 2015].

Bredo, Eric (2009). Getting over the methodology wars. Educational Researcher, 38(6), 441-448.

Bromley, Helen; Dockery, Grindi; Fenton, Carrie; Nhlema, Bertha; Smith, Helen; Tolhurst, Rachel & Theobald, Sally (2002). Criteria for evaluating qualitative studies: Qualitative research and health working group. Liverpool School of Tropical Medicine, UK, http://www.liv.ac.uk/lstm/download/guidelines.pdf [Accessed August 25, 2003].

Brookfield, Stephen D. (1990). The skillful teacher: On technique, trust and responsiveness in the classroom. San Francisco, CA: Jossey-Bass.

Candy, Philip C. (1991). Self-direction for lifelong learning. San Francisco, CA: Jossey-Bass.

Charmaz, Kathy (2014). Constructing grounded theory (2nd ed.). London: Sage.

Chuttur, Mohammad Y. (2009). Overview of the technology acceptance model: Origins, developments and future directions. Sprouts Working Papers on Information Systems, 9(37), http://aisel.aisnet.org/cgi/viewcontent.cgi?article=1289&context=sprouts_all [Accessed: February 16, 2016].

Cisneros Puebla, Cesar A.; Davidson, Judith & Faux, Robert (Eds.) (2012). Qualitative computing: Diverse worlds and research practices. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 13(2), http://www.qualitative-research.net/index.php/fqs/issue/view/40 [Accessed: January 30, 2016].

Clark, Burton R. (1997). The modern integration of research activities with teaching and learning. The Journal of Higher Education, 68(3), 241-255.

Cobb, Ann K. & Hagemaster, Julia N. (1987). Ten criteria for evaluating qualitative research proposals. Journal of Nursing Education, 26(4), 138-142.

Creswell, John W. (2003). Research design: Qualitative, quantitative, and mixed methods approaches (2nd ed.). Thousand Oaks, CA: Sage.

Davidson, Judith (2012). The journal project: Qualitative computing and the technology/aesthetics divide in qualitative research. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 13(2), Art. 15, http://nbn-resolving.de/urn:nbn:de:0114-fqs1202152 [Accessed: March 10, 2016].

Davidson, Judith & Di Gregorio, Silvana (2012). Qualitative research and technology: In the midst of a revolution. In Norman K. Denzin & Yvonna S. Lincoln (Eds.), Collecting and interpreting qualitative material (4th ed., pp.481-516). Thousand Oaks, CA: Sage.

Davis, Fred D. (1986). A technology acceptance model for empirically testing new end-user information system: Theory and results. Doctoral thesis, Sloan School of Management, MIT, USA, http://hdl.handle.net/1721.1/15192 [Accessed: February 12, 2104].

Davis, Fred D. (1989). Perceived usefulness, perceived ease of use and user acceptance of information technology. MIS Quarterly, 13(3), 319-339.

Davis, Fred D. & Venkatesh, Viswanath (1996). A critical assessment of potential measurement biases in the technology acceptance model: Three experiments. International Journal of Human-Computer Studies, 45(1), 19-45.

Di Gregorio, Sylvana & Davidson, Judith (2008). Qualitative research design for software users. Berkshire: Open University Press.

Evers, Jeanine C. (2016). Elaborating on thick analysis: About thoroughness and creativity in qualitative analysis. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 17(1), Art. 6, http://nbn-resolving.de/urn:nbn:de:0114-fqs160163 [Accessed: February 8, 2016].

Evers, Jeanine C.; Mruck, Katja; Silver, Christina & Peeters, Bart (Eds.) (2011). The KWALON Experiment: Discussions on qualitative data analysis software by developers and users. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1), http://www.qualitative-research.net/index.php/fqs/issue/view/36 [Accessed: February 8, 2016].

Freeman, Melissa; deMarrais, Kathleen; Preissle, Judith; Roulston, Kathryn & St. Pierre, Elizabeth A. (2007). Standards of evidence in qualitative research: An incitement to discourse. Educational Researcher, 36(1), 25-32.

Gage, Nathaniel L. (1989). The paradigm wars and their aftermath: A "historical" sketch of research on teaching since 1989. Educational Researcher, 18(7), 4-10.

Gibbs, Graham R.; Friese, Susanne & Mangabeira, Wilma C. (Eds.) (2002). Using technology in the qualitative research process. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 3(2), http://www.qualitative-research.net/index.php/fqs/issue/view/22 [Accessed: June 20, 2016].

Kaczynski, Dan (2006). Assessing the quality of the qualitative dissertation: Applying qualitative data analysis software methods. In Margaret Kiley & Gerry Mullins (Eds.), Quality in postgraduate research: Knowledge creation in testing times (pp.103-112). CEDAM, The Australian National University, Canberra, http://qpr.edu.au/2006/kaczynski2006.pdf [Accessed August 10, 2014].

Kaczynski, Dan & Kelly, Melissa (2004). Curriculum development for teaching qualitative data analysis online. Proceedings of the International Conference on Qualitative Research in IT & IT in Qualitative Research, Brisbane, Australia, November 24-26, http://eric.ed.gov/?id=ED492010 [Accessed: October 16, 2014].

Kaczynski, Dan; Salmona, Michelle & Smith, Tom (2014). Qualitative research in finance. Australian Journal of Management. 39(1), 127-135.

Kiley, Margaret & Pearson, Margot (2008). Research education in Australia: Expectations and tensions. Symposium paper presentation at the Annual Conference of the American Educational Research Association, New York, USA, March 24-28.

Koshy, Valsa (2005). Action research for improving practice: A practical guide. Thousand Oaks, CA: Sage.

Ladkin, Donna; Case, Peter; Wicks, Patricia G. & Kinsella, Keith (2009). Developing leaders in cyber-space: The paradoxical possibilities of on-line learning. Leadership, 5(2), 193-212.

Laurillard, Diana (1997). Chapter 11. Learning formal representations through multimedia. In Ference Marton, Dai Hounsell & Noel Entwistle (Eds.), The experience of learning: Implications for teaching and studying in higher education (pp.172-183). Edinburgh: Scottish Academic Press.

Laurillard, Diana (2002). Rethinking university teaching. New York: Routledge.

Lewins, Ann & Silver, Christina (2009). Choosing a CAQDAS Package (6th ed.). CAQDAS QUIC Working Papers, http://www.surrey.ac.uk/sociology/research/researchcentres/caqdas/resources/workingpapers/ [Accessed: March 16, 2015.

Lincoln, Yvonna S. (1995). Emerging criteria for quality in qualitative and interpretive research. Qualitative Inquiry, 1(3), 275-289.

Lincoln, Yvonna S. & Guba, Egon G. (1985). Naturalistic inquiry. Beverly Hills, CA: Sage.

Lovitts, Barbara E. (2008). The transition to independent research: Who makes it, who doesn't, and why. The Journal of Higher Education, 79(3), 296-325.

Manathunga, Catherine & Wissler, Rod (2003). Generic skill development for research higher degree students: An Australian example. International Journal of Instructional Media, 30(3), 233-246.

Martelo, Maira L. (2011). Use of bibliographic systems and concept maps: Innovative tools to complete a literature review. Research in the Schools, 18(1), 62-70.

Moss, Pamela A.; Phillips, Denis C.; Erickson, Frederick D.; Floden, Robert E.; Lather, Patti A. & Schneider, Barbara L. (2009). Learning from our differences: A dialogue across perspectives on quality in education research. Educational Researcher, 38(7), 501-517.

Neshyba, Steven (2013). It's a flipping revolution. The Chronicle of Higher Education, http://chronicle.com/article/Walk-Deliberately-Dont-Run/138109/ [Accessed: June 10, 2014].

Ó Dochartaigh, Niall (2012). Internet research skills (3rd ed.). Thousand Oaks, CA: Sage.

Patton, Michael Q. (2015). Qualitative research and evaluation methods (4th ed.). Thousand Oaks, CA: Sage.

Piccoli, Gabriele; Ahmad, Rami & Ives, Blake (2001). Web-based virtual learning environments: A research framework and a preliminary assessment of effectiveness in basic IT skills training. MIS Quarterly, 25(4), 401-426.

Price, Linda & Kirkwood, Adrian (2014). Using technology for teaching and learning in higher education: A critical review of the role of evidence in informing practice. Higher Education Research & Development, 33(3), 549-564.

Ramsden, Paul (2003). Learning to teach in higher education (2nd ed.). London: Routledge.

Richards, Lyn (2015). Handling qualitative data: A practical guide (3rd ed.). London: Sage.

Richards, Lyn & Richards, Tom (1991). Computing in qualitative analysis: A healthy development? Qualitative Health Research, 1(2), 234-262.

Richards, Lyn & Richards, Tom (1994a). From filing cabinet to computer. In Alan Bryman & Robert Burgess (Eds.), Analysing qualitative data (pp.146-172). London: Routledge.

Richards, Tom & Richards, Lyn (1994b). Using computers in qualitative research. In Norman K. Denzin & Yvonna S. Lincoln (Eds.), Handbook of qualitative research (pp.445-462). Thousand Oaks, CA: Sage.

Saldana, Johnny (2009). The coding manual for qualitative researchers. Thousand Oaks, CA: Sage.

Salmona, Michelle (2009). Engaging casually employed teachers in collaborative curriculum and professional development: Change through an action research enquiry in a higher education "pathways" institution. Doctoral thesis, University of Technology, Sydney, Australia, http://hdl.handle.net/10453/20298 [Accessed: June 20, 2015].

Salmona, Michelle; Kaczynski, Dan & Smith, Tom (2015). Qualitative theory in finance: Theory into practice. Australian Journal of Management (Qualitative Finance Special Issue), 40(3), 403-413.

Schmitz, Charles D.; Baber, Susan J.; John, Delores M. & Brown, Kathleen S. (2000). Creating the 21st century school of education: Collaboration, community, and partnership in St. Louis. Peabody Journal of Education, 75(3), 64-84.

Silver, Christina & Rivers, Christine (2015). The CAQDAS postgraduate learning model: An interplay between methodological awareness, analytic adeptness and technological proficiency. International Journal of Social Research Methodology, Art. 13/126, 1-17.

Silver, Christina & Woolf, Nicholas H. (2015). From guided-instruction to facilitation of learning: The development of five-level QDA as a CAQDAS pedagogy that explicates the practices of expert users. International Journal of Social Research Methodology, 18(5), 527-543.

Silverman, David (2004). Qualitative research: Theory, method and practice (2nd ed.). Thousand Oaks, CA: Sage.

Stringer, Ernest, T. (2007). Action research in education (3rd ed.). Upper Saddle River, NJ: Pearson Education.

Venkatesh, Viswanath; Morris, Michael G.; Davis, Gordon B. & Davis, Fred D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425-478.

Walker, George E.; Golde, Chris M.; Jones, Laura; Bueschel, Andrea C. & Hutchings, Pat (2008). The formation of scholars: Rethinking doctoral education for the twenty-first century. San Francisco, CA: Jossey-Bass.

Waycott, Jenny; Bennett, Sue; Kennedy, Gregor; Dalgarno, Barney & Gray, Kathleen (2010). Digital divides? Student and staff perceptions of information and communication technologies. Computers & Education, 54(4), 1202-1211.

Michelle SALMONA is President of the Institute for Mixed Methods Research (IMMR) and an adjunct associate professor at the University of Canberra. With a background as a project management professional and a senior fellow of the Higher Education Academy, UK, she is a specialist in research design and methods. Michelle is a co-founder of IMMR, building global collaborations for grant development, and customized training and consultancy services for individuals and groups engaged in mixed-methods and qualitative analysis. Michelle works as an international consultant in: program evaluation; research design; and mixed-methods and qualitative data analysis using software. Her research focus is to better understand how to support doctoral success and strengthen the research process; and build data-driven decision making capacity in the corporate world. Michelle's particular interests relate to the relationship between technology and doctoral success. Recent research includes exploring the changing practices of qualitative research during the dissertation phase of doctoral studies, and investigates how we bring learning into the use of technology during the research process. Michelle is currently working on different projects with researchers from education, information systems, business communication, leadership, and finance.

Contact:

Dr. Michelle Salmona

PresidentInstitute for Mixed Methods Research1100 Pacific Coast Highway, Suite DHermosa Beach, CA 90254, USA

E-mail: msalmona@immrglobal.org

Dan KACZYNSKI is a professor of educational leadership at Central Michigan University and an adjunct professor at the University of Canberra, Australia. Dan is a senior research fellow at the Institute for Mixed Methods Research. His publications and presentations promote technological innovations in qualitative data analysis, professional development for doctoral supervision, and is actively engaged in applied research and program evaluation.

Contact:

Professor Dan Kaczynski

Department of Educational Leadership

Central Michigan University

Mount Pleasant, MI 48859

USA

E-mail: dan.kaczynski@cmich.edu

Salmona, Michelle & Kaczynski, Dan (2016). Don't Blame the Software: Using Qualitative Data Analysis Software Successfully

in Doctoral Research [58 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 17(3), Art. 11,

http://nbn-resolving.de/urn:nbn:de:0114-fqs1603117.