Volume 20, No. 3, Art. 6 – September 2009

The Analysis of Qualitative Data With Peer Researchers: An Example From a Participatory Health Research Project

Ina Schaefer, Gesine Bär & the Contributors of the Research Project ElfE

Abstract: Even as experience in participatory research is increasing in German-speaking countries, participatory data analysis is still relatively new. In the context of the project ElfE—Parents Asking Parents, part of the PartKommPlus—Research Consortium for Healthy Communities, we describe the application of a participatory data analysis in which we use grounded theory methodology (GTM). GTM is compatible with participatory approaches to research: Even peer researchers without formal training in the social sciences can actively participate, due to the focus on concrete data and the process-oriented coding procedure. Other components of GTM present a challenge for participatory research.

The focus of ElfE was addressing issues of health equity for families with preschool children. In two communities, parents were recruited as peer researchers. The entire research process was developed and implemented in a participatory manner in research workshops. The research resulted in developing core messages for community members, practitioners, and parents. In this article, we describe a participatory analysis of qualitative data. We demonstrate that GTM can support the further development of participatory research. The use of GTM in participatory analysis requires limiting the data collection, proactively monitoring the analysis process, and defining the tasks conducted by academic researchers and peer researchers.

Key words: qualitative data analysis; grounded theory methodology; participatory health research; peer research

Table of Contents

1. Introduction

2. Data Analysis in Participatory Research Projects

3. Developing a Method for Participatory Data Analysis in the Project ElfE

3.1 Data analysis in non-participatory qualitative social research

3.2 Data analysis in participatory research

3.2.1 Data analysis involving all members of the research team in all steps

3.2.2 Data analysis using a division of labor

3.3 Examples of GTM in participatory research

4. Participatory Data Analysis in the ElfE Project

4.1 Data collection and preparation in ElfE

4.2 Participatory data analysis in ElfE

4.2.1 Concept for the data analysis

4.2.2 Implementation process and the division of tasks between the peer researchers and the academic researchers

4.2.3 Finalizing and presenting the results

4.2.4 Critical appraisal of the data analysis process

5. Discussion

5.1 Limitations

5.2 Recommendations for participatory data analysis

1. Introduction1)

In participatory research, the occurrence of participation is less common during the data analysis phase of projects (CASHMAN et al., 2008; FLICKER & NIXON, 2014; JACKSON, 2008). Reviews of participatory research methods also include little detail on participatory data analysis. According to BERGOLD and THOMAS (2010, 2012), the challenge for participation is greatest during this phase due to differences in time resources and level of knowledge on the part of the participants. With few exceptions (e.g., FLICKER & NIXON, 2014; VAN LIESHOUT & CARDIFF, 2011; VON UNGER, 2012), we agree with the conclusion of CASHMANN et al. (2008) that descriptions of procedures for mutual data analysis are rare (see also ACCESS ALLIANCE MULTICULTURAL HEALTH AND COMMUNITY SERVICES, 2011). [1]

Participatory health research developed out of action research (VON UNGER, 2014). Action researchers focus on working with communities—groups of people who are, for example, connected through their living environments or their social identity. Internationally, participatory health research is known under many different labels, including community based participatory research (CBPR). [2]

ElfE: Parents Asking Parents is a project of PartKommPlus—Research Consortium for Healthy Communities, which was funded by the Ministry of Education and Research (FKZ: 01EL1423D) in two phases (February 2015 to January 2018 and February 2018 to January 2021). ElfE was so designed that the entire research process—from the definition of the research question to the analysis of the data—be organized and implemented in partnership with those who lives are the subject of the research (PARTNET, 2017). ElfE has the goal of improving health equity for parents and their preschool children who face multiple challenges (e.g., single parents and parents with low income). [3]

The starting point for ElfE were the results of the mandatory school entry health examinations which show increasing health disparities in children based on social status, as seen in differences with regard to language skills, motor skills, and social development (BEZIRKSAMT MARZAHN-HELLERSDORF VON BERLIN, n.d.; BEZIRKSAMT MITTE VON BERLIN, 2013). The examinations also clearly show that attending daycare, particularly over a period of several years, can have a positive effect on childhood development (GEENE, RICHTER- KORNWEITZ, STREHMEL & BORKOWSKI, 2016; ROSBACH, KLUCZNIOK & KUGER, 2008). Although daycare cannot completely compensate for social determinants of poor health, some families can benefit greatly from this opportunity (ANDRESEN & GALIC, 2015). We wanted to examine the potential benefits of daycare more closely together with parents who could most profit from this service. In both funding phases, parents with preschool children served as peer researchers, sharing in all decisions regarding the project. This put into practice the principle of shared decision making common in participatory health research. [4]

We used qualitative methods2) (PRZYBORSKI & WOHLRAB- SAHR, 2014) to guide the process. Research questions were formulated, interview guidelines were developed, interviews were conducted and transcribed, and the transcripts were analyzed using grounded theory methodology (GTM) coding as described by STRAUSS and CORBIN (1990). The coding was supplemented by the DEPICT procedure of FLICKER and NIXON (2014) which was developed specifically for analyzing qualitative data in the context of participatory research. [5]

The focus of our article is on the qualitative data analysis process. Based on a description of the challenges in participatory data analysis (Section 2), we discuss the suitability of established analysis methods of qualitative social research as well as the participatory analysis procedures documented in the literature (Section 3). We then describe the process in the project ElfE (Section 4). Finally, we summarize the challenges in light of our experience and discuss the need for further research (Section 5). [6]

2. Data Analysis in Participatory Research Projects

The literature on participatory research emphasizes the advantages of integrating different forms of knowledge and different perspectives in the data analysis process (BERGOLD & THOMAS, 2010; VON UNGER, 2012, 2014; BERGOLD & THOMAS, 2012 provide an overview). The core principle of the participatory approach is research with and not on people (INTERNATIONAL COLLABORATION FOR PARTICIPATORY HEALTH RESEARCH [ICPHR], 2013). The integration of the perspectives of peer researchers is therefore a mark of quality in participatory data analysis (CASHMAN et al., 2008). Peer researchers contribute actively to data interpretation and to the acquisition of new knowledge (BERGOLD & THOMAS, 2012). [7]

Thus, participation in the research process must be implemented in such a manner that all perspectives can be taken into account (VON UNGER, 2014), so that experience and knowledge from everyday life, as well as that from academic research, are treated equally (BEHRISCH & WRIGHT, 2018). This results in the co-creation of new knowledge, which is also referred to as transformative knowledge (REASON & TORBERT, 2001). BEHRISCH and WRIGHT (2018) differentiated between the practical knowledge generated by taking action in concrete situations and everyday knowledge which is gained in an unsystematic manner. MARENT, FORSTER and NOWAK (2015) made a distinction between knowledge gained through training and professional practice (professional knowledge) and knowledge gained through everyday life (lay knowledge) (see for further differentiation variants REASON & TORBERT, 2001). From a methodology point of view, however, it remains undefined what specific types of knowledge are produced in participatory research as compared to other forms of research (BEHRISCH & WRIGHT, 2018). This research gap regarding the process of mutual data analysis is the focus of our article. [8]

MOSER (1995) described how the interlinking of various perspectives can be achieved for action research. He considered an iterative process to be a central characteristic, developing the action model for action research. In this model, he regarded knowledge acquisition to be a spiral-shaped, repetitive (cyclical) process of planning, acting, information collection, and subsequent discourse, with the goal of reflection on practice. Thus, the experiences of the persons participating in the research are processed to create a more generalizable knowledge. MOSER primarily tailored the action research approach to practitioner-led research. LEDWITH (2017) also presented a cyclical model over different phases of emancipatory action research (EAR), in which knowledge intended to create change is gradually derived from everyday experiences. Academic, professional, and everyday knowledge are of equal value in these models. Both MOSER and LEDWITH attempted to juxtapose a dialogical knowledge model against the primacy of specialized scientific or professional knowledge (BEHRISCH & WRIGHT, 2018). This also seems to be appropriate for participatory research. From a research practice point of view, this raises numerous challenges, which we will address in the following. [9]

The cyclical process requires a dynamic research design allowing for the continuous adaptation of the methods used. Thus, standardizing the research process, including the participatory data analysis, is not possible (BERGOLD & THOMAS, 2012). The linking of various forms of knowledge also calls into question the traditional separation between prior knowledge/experiences of the researchers and the data at hand (MEINEFELD, 2010 [2000]). The data material should be enriched by the life experience and expertise of the peer researchers (ABMA et al., 2019). This requires a more extensive methodological discussion regarding questions of validity in participatory research (ICPHR, 2013). The quality criteria that are in effect for qualitative research should also be considered for participatory research. According to BERGOLD and THOMAS (2012), particular attention should be paid to the appropriateness of the method for the subject of the research study and for the context, considering process, coherence, and how well the methods can be integrated into the overall research project (FLICK, 2012 [1995]). [10]

There are additional challenges: In participatory research, the peer researchers usually lack knowledge about scientific methods (BERGOLD & THOMAS, 2010). This specific knowledge is provided by the academic researchers. As a result, their role is in no way limited to the facilitation of the process; co-determination of the course of the research presupposes that the peer researchers also have knowledge of possible methods, which they learn from the academic partners. This parallel building of knowledge within a participatory process requires effective and ongoing communication and adequate resources, which often do not exist. Finally, the peer researchers themselves often describe the data analysis as the most uninteresting part of the participatory research process: "Some community partners have argued that their involvement—particularly in data analysis—is not always the best use of their time" (CASHMAN et al., 2008, p.1407). For this reason, there should always be communication during the course of data analysis regarding the purpose of the process, and motivation to take part should be strengthened. [11]

3. Developing a Method for Participatory Data Analysis in the Project ElfE

Summarizing the above, a process for the participatory analysis of qualitative data needs to address three challenges:

making a cyclical, iterative research process possible, the goal of which is the generation of transformative knowledge and the inclusion of different forms of knowledge. For this, interpretative processes are especially important (BERGOLD & THOMAS, 2010; VON UNGER, 2014);

ensuring that the approach is suitable for the data material and also that it is process-oriented (BERGOLD & THOMAS, 2012; FLICK, 2012 [1995]);

providing that the approach is doable. According to VON UNGER (2014), this includes aspects such as transparency and practicability for all participants. BERGOLD and THOMAS (2010) made the case for "the application of pragmatic participatory processes using pared down forms of data analysis" (p.341). [12]

We followed BERGOLD and THOMAS (2012) who emphasized the use of established methods of data analysis from non-participatory forms of research, in this case qualitative social research. Therefore, in addition to relevant methods in participatory research, we include methods from data analysis in non-participatory qualitative social research. [13]

3.1 Data analysis in non-participatory qualitative social research

There is a multitude of methods for the analysis of interview data in non-participatory qualitative social research (an overview is provided by MRUCK with the cooperation of MEY, 2000). Qualitative content analysis and grounded theory methodology are often used in German-speaking countries. Both are coding processes which can be applied to various forms of data (FLICK, 2012 [1995]). Qualitative content analysis encompasses data reduction using several steps of categorization. There are different basic forms of codings and variation within these forms (an overview is provided by SCHREIER, 2014). Commonly, the process is deductive in nature, using existing theory as a foundation. The interpretation is separate from the data analysis and therefore plays a subordinate role (FLICK, 2012 [1995]). The reproducibility of an analysis and the intercoder reliability are among the specific quality criteria for content analysis (MAYRING, 2015 [1983]). [14]

Qualitative content analysis is recommended by some participatory researchers from a practical perspective (VAN DER DONK, VAN LANEN & WRIGHT, 2014). VAN DER DONK et al. referred to primary textbooks by FLICK (2012 [1995]), LAMNEK (2010 [1988]) and MAYRING (2010 [1983]), including both inductive and deductive approaches. The resulting interpretations and conclusions were a direct result of the data analysis and thus excluded the personal experience of those conducting the analysis. [15]

The latter stands in contrast to the principles of participatory research, particularly where peer researchers are involved. GTM offers a different basis for data analysis, given that interpretation lies at the core of the research process (FLICK, 2012 [1995]) and personal experience is explicitly valued (STRAUSS, 1987). GTM was introduced as a method by GLASER and STRAUSS (1967) in order to generate systematically theories from qualitative data. Over the last several decades, there have been myriad further developments (an overview is provided by BRYANT & CHARMAZ, 2007 as well as MEY & MRUCK, 2011 [2007]). We made use of the pragmatic approach described by STRAUSS and CORBIN (1990) that takes into account the context and the subject under study and allows for consolidating the process (LEGEWIE & SCHERVIER-LEGEWIE, 2004).

STRAUSS and CORBIN (1990) defined the three core elements: Theoretical sampling involving several iterations of coding and data collection until a theoretical saturation is reached. Theoretical saturation means that additional data is collected and coded until no new insights are produced.

Constant comparison and theoretical sensitivity are supported by the writing of memos (writing down all thoughts regarding the data). The research process is conducted in iterative loops composed of data collection, data analysis, and the formation of theory (HILDENBRAND, 2010 [2000]). Everything that can contribute to the formation of the theory is considered to be data, meaning that analysis is not limited to written records, such as interview transcripts.

Constant reflection is required during each step of the data analysis (STRAUSS, 1987). [16]

According to STRAUSS and CORBIN, the coding process needs to be flexible, consisting of three steps that follow each other chronologically, but are simultaneously intertwined with one another and merge (HILDENBRAND, 2010 [2000]):

In open coding, the data is first broken down by defining terms based on the units of analysis (e.g., a sentence or paragraph) (STRAUSS & CORBIN, 1990).

In axial coding, these terms are grouped into categories. The categories are increasingly consolidated into larger and larger groups for the purpose of building a theory (HILDENBRAND, 2010 [2000]). The list of categories remains open until the conclusion of the analysis, meaning that they can be changed again and again (MUCKEL, 2011 [2007]. The coding paradigm supports the development of connections between categories by grouping concepts according to causes, context and intervening conditions, consequences, and action strategies (BÖHM, 2010 [2000]).

The last step is selective coding during which the central or core category is selected that connects all the various categories to each other and lies at the center of the formulated theory (BERG & MILMEISTER, 2008). [17]

GTM has several characteristics in common with participatory research and thus can be easily integrated into the participatory research process. Data collection and analysis are responsive, flexible, and process-oriented. Existing theories are applied in order to discover something new. Various data sources can be analyzed in one project. And the expertise of all participants is an important part of the process so as to increase the diversity of perspectives. GTM also seeks to create change in the research field (HILDENBRAND, 2010 [2000]). This can be seen especially in the form of GTM known as grounded action (OLSON, 2007), where the focus is on the development of action strategies that are systematically derived from the grounded theory and are thus based on data. Finally, GTM is also characterized by the "messiness" described in participatory research (WRIGHT, 2013, p.129; see also COOK, 2009). The dialogue of the participants and thus the exchange of different opinions and forms of knowledge leads to conflicts, as prior thinking regarding the research subject is called into question. This conflict requires confronting different perceptions and interpretations while opening the door to new ideas. "The chaos of this messiness can be seen as a communicative space, in which the participants dare to formulate new, individual, and collective realizations and thus replace old knowledge" (WRIGHT, 2013, p.1303); see also COOK, 2009). [18]

In summary, GTM is more appropriate for participatory research as compared to rule-based approaches of content analysis because both GTM and participatory research are:

Characterized by cyclical, iterative and dialectical processes (and thus create spaces for "messiness");

Flexible in their implementation, making it possible to adapt to the context;

Based on an appreciation of the participants and their specific experiences;

Oriented toward action and change. For GTM, this is particularly emphasized in the variant known as grounded action (OLSON, 2007; SIMMONS & GREGORY, 2003). [19]

With GTM, the focus is on the reflection and interpretation of the material by means of a coding process. With the aid of GTM, the expertise of the peer researchers can be utilized extensively. Theoretical knowledge and existing concepts can thus be integrated into the research process, the formation of theories can be supported, and transparency regarding the process can be created by using GTM in participatory research (DICK, 2007). For all of its stated potential, the application of GTM in participatory research is complex and therefore requires a great deal of resources. The terminology alone, such as the coding steps, requires training to ensure that it can be understood and implemented by peer researchers (VON UNGER, 2014). It also requires a learning process on the part of those facilitating the research project so as to include the experiential knowledge of the peers. [20]

3.2 Data analysis in participatory research

In the international literature, one finds reports of participatory data analysis applied to written data material (CASHMAN et al., 2008; JACKSON, 2008). The approaches differ with regard to the distribution of labor, the depth of the analysis, and whether all or only some of the material was analyzed. Often the initial analysis is prepared by the academic partners, which is then discussed with the peer researchers, for example by means of feedback loops, concept mapping, or other visualizations of the relationships found in the material (JACKSON, 2008). In such cases, the participation of the peer researchers is generally limited to the interpretation of the results. In the following, we first present an example in which the peer researchers were involved in the entire data analysis process. This is followed by an example in which the analysis was conducted using a division of labor. [21]

3.2.1 Data analysis involving all members of the research team in all steps

JACKSON presented a four-stage process within the scope of a facilitated workshop over two days in three Canadian research projects. The data were coded in small groups. For the interpretation, the main categories were compiled and interpreted by all members of the research team, including the peer researchers. The academic researchers then reported the results. VAN LIESHOUT and CARDIFF (2011) described data analysis using art-based methods. They developed the seven-phase CCHA-process (critical creative hermeneutic analysis). The peer researchers created individual understandings of the text through reading the data, which had already been subjected to an initial analysis. Or they were asked to structure the data themselves intuitively and to express its meaning with the aid of collages, poems, images, or sculptures. This was done individually or in a group. The interpretations were then discussed by everyone taking part. The result was a mutually derived thematic framework. Passages were identified in the data which illustrate and support the framework. [22]

3.2.2 Data analysis using a division of labor

CASHMAN et al. (2008) explicated four examples of which one refers only to qualitative data and the others to qualitative and quantitative data in the same study. In analyzing the qualitative data, there was a strong division of labor. For example, in a research project in which the health care of indigenous people was studied, the material was first prepared by the academic researchers to be discussed with those involved in the project (e.g., health care staff, school staff, police, and other stakeholders). This was followed by a discussion in an expanded circle of stakeholders, leading to recommendations for taking action. In a project focused on health promotion for Latino men in North Carolina, the transcripts from focus group discussions were coded together with Latino men and subsequently analyzed by all of the peer researchers over the course of four meetings. Whereas a more compact process was utilized during the first example above, the second example required more time due to the mutual coding of the material. [23]

FLICKER and NIXON (2014) developed the DEPICT model, which can be used by academic researchers and peer researchers who divide the various tasks among themselves, or by the peer researchers alone. The model has been used in projects focused on the health care sector and HIV prevention. It consists of six sequential steps: Dynamic reading, engaged codebook development, participatory coding, inclusive reviewing and summarizing of categories, collaborative analyzing, and translating. By means of DEPICT, all of the interview material is analyzed in a collaborative process with the aid of a coding guideline in a manner similar to qualitative content analysis. [24]

The examples above illustrate a spectrum of possibilities for participatory data analysis. The example presented by CASHMANN et al. (2008) with Latino men and DEPICT are clearly based on methods found in non-participatory qualitative research. In the other examples, additional methods for engaging peer researchers and stakeholders in the interpretative process are applied. For VAN LIESHOUT and CARDIFF (2011) the goal of data analysis in participatory research projects is the explication of specific forms of knowledge. Creative methods aid the expression of knowledge that cannot (yet) be put into words (REASON & TORBERT, 2001). The academic researchers act as facilitators and do not prepare the data material in advance. To what degree the prior knowledge of the academics has affected the process is often unclear. However, the role of the academics' knowledge should be made explicit, given that participatory research requires the combination of various forms of knowledge, not just that of the peer researchers (COOK, 2009; MARENT, FORSTER & NOWAK, 2012; REASON & TOBERT, 2001). [25]

An important task of the academic researchers is facilitating the research process (ABMA et al., 2019). They should stimulate dialogue and interaction by conveying to the participants that their knowledge is important. This is the only way that new knowledge can be created that is based on the different forms of knowledge found among the participants. If, as recommended by MAY (2008), one follows the cyclical action research model of MOSER (1995), the advantages of a division of labor among academic and non-academic researchers becomes apparent. [26]

3.3 Examples of GTM in participatory research

As described above, participation is found less in the data analysis phase of participatory research projects than in other phases of the work. This is often due to time constraints and the knowledge necessary for analyzing the data. The application of GTM is resource-intensive. To save on resources, not all participants are required to perform all steps of preparing and analyzing the data, but rather the labor is shared (ABMA et al., 2019; VON UNGER, 2014). The division of labor takes place through dialogue and by designating individuals and small groups with specific tasks (COOK, 2009). Drawing on COOK, it is crucial to base the collective discourse on the complete material. Therefore, the possibilities for dividing labor are limited. A decisive factor is the willingness of the peer researchers to be involved in the data analysis, as illustrated by OSPINA, DODGE, FOLDY and HOFMANN-PINELLA (2008) in an example from an American research program: "from full participation and engagement in some cases, to willing collaboration in others, to partial and at times reluctant cooperation in yet others, to non-participation in a few" (p.424). The practical implementation of participation in data analysis needs to adapt to the motivation of the peers, which may change over the course of the process. In the following examples we show various forms of participatory data analysis using GTM in a division of labor, describing the potential as well as the challenges. [27]

Examples for GTM in participatory research can be found in ACKERMANN and ROBIN (2017), DICK (2007), GREENALL (2006), as well as in TERAM, SCHACHTER and STALKER (2005). Whereas GREENALL (2006) did not go into detail regarding the coding process, the examples of ACKERMANN and ROBIN (2017) as well as TERAM et al. (2005) demonstrated a strong division of labor in their approach. TERAM et al. reported an initial analysis by the academic researchers and a group discussion of the results. In the process presented by ACKERMANN and ROBIN (2017), interviews conducted and transcribed within the scope of residential youth welfare services were read completely and coded by the participating academic researchers in the first step. This coding was then discussed together with the youths. In addition, sequences of the interviews were coded with all participating parties (for this, the text was projected on a screen). The youths were able to add key points and new concepts to the analysis, including identifying the core category. However, important decisions, such as how to further incorporate the input of the youths in the analysis remained in the hands of the academic researchers. Another approach was developed by DICK (2007). He suggested a structured discussion based on decision questions in place of coding (for both interviews as well as other data sources). These questions were designed to identify specific situations, desired effects, and actions, while revealing the relationships between the various responses found in the data. [28]

All examples in the literature show how academic researchers can strengthen debate and mutual reflection among peer researchers while avoiding overtaxing them with methodological issues. Confining the input of the peers to certain parts of the process restricts, however, possibilities for interconnecting the various forms of knowledge found among the participants, because their influence over the process is limited. We chose the process of GTM for our work, taking into account the interests and the prior knowledge of the peer researchers as well as the available time and financial resources, while making room for the expected "messiness." We therefore involved the peers as directly as possible in all stages of the data analysis. [29]

In the following description, we present the ElfE project. We begin by explaining from a practical perspective our specific adaptation of GTM. Second, we state how and why individual elements of the DEPICT-process ("dynamic reading" and "collaborative analyzing") were added. Third, we describe the steps we were not able to implement. [30]

4. Participatory Data Analysis in the ElfE Project

In the following, we provide an overview of the structure and organization of the research process in the ElfE project. There are many important aspects that are beyond the scope of this article, such as the motivation of the participating parents, questions of empowerment regarding the participants, and the interlinking of peer and collaborative research. Further information can be found (in German) on the PartKommPlus website. [31]

The project structure of both ElfE case studies (the district of Marzahn-Hellersdorf in Berlin, and the rural community of Lauchhammer in the county of Oberspreewald-Lausitz in the state of Brandenburg) includes two core elements (BÄR & SCHAEFER, 2016). The first is a municipal steering group consisting of various stakeholders from the daycare sector for the purpose of collaborative research. Secondly, there were research teams in both case studies consisting of parents of daycare-age children (total n=19) and the academic researchers who are the authors of this article. More than half of the 19 participating parents had no previous research experience. Three research teams were formed that met regularly in research workshops in which ongoing participation in project decisions and training in research methods were made possible. [32]

4.1 Data collection and preparation in ElfE

At the end of the first research phase, all three research teams chose semi-structured interviews as the research method. This decision reflects the desire of the parents to conduct "real" research, meaning that they wanted to work with established social science methods (as opposed to using less common visual or performative approaches as found in participatory research). Fourteen of the parents were interested in data collection. An interview guide was the basis for following a mutually designed framework of questions. In total, 27 semi-structured interviews were performed. Two peer researchers conducted a total of four interviews in Russian (their native language). All interviews were transcribed externally. The length varied between 4 and 18 pages, with most being 10 pages long. Although the interview guide was very comprehensive, the interviews were sometimes very short. This demonstrates how difficult it is to strike a balance between holding to an interview protocol while achieving depth in the responses (ACKERMANN & ROBIN, 2017). We reflect on this challenge in Section 5. [33]

The transcripts were sent to the interviewers for corrections. In three cases, the interview partner was also a member of the research team. In those cases, the interview partner reviewed the transcript him/herself. In one of these cases, the interview partner refused to grant approval for the use of the interview in the analysis; reasons for the refusal were not given. A total of 26 interviews, of which two were with participating peer researchers, were included in the analysis. Following the suggestions of ROCHE, GUTA and FLICKER (2010, p.11) one of the academic researchers conducted a follow-up conversation by telephone (debriefing) with the interviewer, the follow-up taking place no later than two days after each interview. It was also possible to answer the questions in writing for those who did not want to do so by phone. From a research ethics point of view, the follow-up conversation was useful for the emotional processing the interview experience. Due to time constraints, we were unable to include the data gathered during the debriefing in our analysis. [34]

4.2 Participatory data analysis in ElfE

The requirements for a participatory data evaluation are complex and are dependent on the specific characteristics of the research project, as described in Section 3.4. In the following, we present the concept for and implementation of our data analysis which entailed the process of dynamic reading and collaborative analysis from DEPICT, and a coding process based on GTM. [35]

4.2.1 Concept for the data analysis

We began by testing the dynamic reading technique as described by FLICKER and NIXON (2014). This enabled us all to learn how to work together: the peer researchers gained an initial understanding of qualitative data analysis and the academic researchers gained an understanding of how to facilitate the collaborative process. This step led to "labels" in the sense of "headlines" or paraphrasing for the content of the interviews that was considered to be important. These phrases were written on note cards and pinned to a bulletin board. Examples for labels are "getting used to daycare" or "providing information to parents." [36]

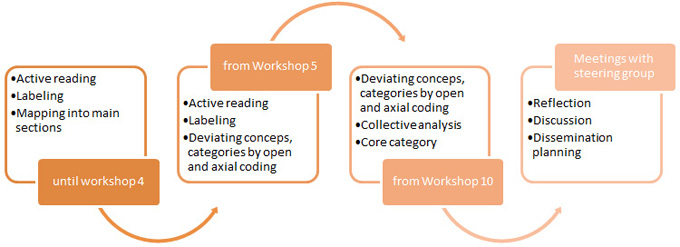

Each team coded their own data openly and axially. For open coding, also called descriptive analysis4) in the context of the research workshops, the teams transferred the contents of the selected text passages in abbreviated form into a higher-level code as intended by GTM: These codes not only summarized the text passages, but also interpreted their possible meaning, which was then written on a note card. For example, the interpretation for the label "getting used to daycare" was "starting the cooperation between parents and daycare staff," and for the label "providing information to parents" it was "transparency." After that, the researchers created relationships between the codes and grouped them into categories. In this way, the teams came to the conclusion that for "getting used to daycare" communication between parents and staff regarding the educational approach is useful, and that the parents also require acclimatization to the daycare setting. The code "transparency" was assigned to the category "basis for trust" which parents gain by receiving specific information regarding their child from the staff. This step was referred to as interpretive analysis in the workshops and was intended to make the axial coding comprehensible for the peer researchers. Figure 1 shows the order in which these steps in the analysis were introduced. Following the interpretive analysis for all interviews, the research teams spent three workshop sessions on a collaborative analysis process based on DEPICT. The codes and categories formed up until that point were visualized on flipcharts and discussed based on the question "What have we found out?"

Figure 1: ElfE analysis process [37]

The next step was bringing all three research teams together in one workshop which we called the Big Discussion Day in accordance with M'BAYO and NARIMANI (2015). The research teams presented their results to one another and then created overarching core categories to describe their findings. On that basis, they answered the common research questions in accordance with the selective coding as found in GTM, thereby connecting the majority of the other categories to each other. [38]

4.2.2 Implementation process and the division of tasks between the peer researchers and the academic researchers

All coding was performed by the peer researchers and us working together. The research teams organized the dynamic reading of the transcripts primarily in the form of small group work in the research workshops, dividing the text into sections. For example, one person from the group read a section aloud and the group decided together on the coding, paragraph by paragraph. Or each member of the small group first read and marked the same section alone, then the group came together to compare, and discuss, and come to an agreement on their codings. As a "short cut" (VON UNGER 2014, p.62), the peer researchers at times prepared a portion of the transcripts in advance at home. They brought their results to the next workshop to discuss with the others. For the interviews in Russian, the small group work took place only among the Russian-speaking peers who had also conducted the interviews. [39]

The researchers wrote down all levels of coding on note cards and posted them on bulletin boards. To facilitate the process, they grouped the cards according to the three main sections of the interview: "daycare," "staff," and "parents." During the discussion process, this initial grouping was at times abandoned in order to form new groupings that the participants felt best reflected the data. Within the scope of the Big Discussion Day and the subsequent preparation of results, we used the three sections of the interview as an organizing principle. Parallel to the research workshops, we transferred all codes into MAXQDA, a software program for computer-aided qualitative data analysis. This made it possible to link the coding to the original text passages. The result was an overview that enabled easier access to the original material during the collaborative work. [40]

The progress of the data analysis varied in the three research teams as a result of team size and the number of interviews conducted. Another factor was the limited number of workshops in Lauchhammer, due to the distance from our offices in Berlin (once a month as compared to every to every 14 days in Marzahn-Hellersdorf). Supplementing the workshops in Lauchhammer with Skype proved not to be practicable, given that not all peer researchers had a stable internet connection. Because only four interviews were conducted in Lauchhammer, the lower frequency of workshops was not a problem; however, we were not able to complete the step of collaborative analysis as recommended in DEPICT. [41]

During the Big Discussion Day, the three research teams first developed models from their analysis. This exercise proved to be too abstract, so we moved to identifying examples of "good" and "bad" daycare practices from the interviews using the MAXQDA overview, consolidating the examples based on the coding paradigm of STRAUSS and CORBIN (1990). This intermediate step made it possible for the teams to formulate a mutual core category by which they could combine their results. Due to time restrictions, we were not able to complete this cross-team analysis, a factor that should be taken into account when attempting this in future participatory research projects (Section 5). [42]

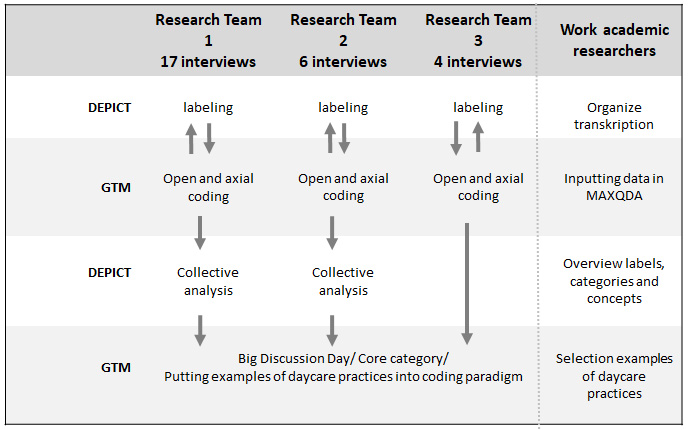

We assisted the research teams by inputting the data into MAXQDA. A peer researcher translated the codes from the Russian interviews. We also provided regularly updated overviews of all codes for each research team. In addition, we provided training support in the form of three pamphlets: "How do you analyze interviews?"; "Analyzing interview transcripts, Step 1: Open coding"; and "Analyzing interview transcripts, Steps 2 and 3: Axial and selective coding." Furthermore, we provided a visual overview of all steps in the analysis in the form of a logic model for coding (Figure 2 shows an overview of the work steps for data analysis).

Figure 2: Visual overview of coding steps [43]

4.2.3 Finalizing and presenting the results

For the preparation of the results, the research teams first examined the entire analysis process, the overview of all categories formed, and the case examples from the interviews. The examples were intended to strengthen the practical application of the findings from ElfE. In participatory research, bringing about change is part of the research process by linking knowledge and action (VON UNGER, 2014) and is one of the most important characteristics of participatory research (WRIGHT, 2013). [44]

At the wish of the steering groups, we focused on the core category of communication between parents and daycare staff, with recommendations for parents, staff, and daycare facilities. These were presented to the daycare providers in Marzahn-Hellersdorf within the scope of a routine meeting of the district workgroup "Daycare for Children"5). In addition, the results were presented at a public meeting of the district parents committee "Daycare" (BEAK) in Marzahn-Hellersdorf. A film by parents for parents and a set of cards for daycare staff were collaboratively developed to transfer the research results into practice settings, using excerpts from the interviews. Other forms of transfer are planned. [45]

4.2.4 Critical appraisal of the data analysis process

It was easy to identify and code statements considered to be important by way of the dynamic reading process within the research workshops. Lively discussions frequently took place regarding the marked statements, relating to the personal experience of the peers. This was ultimately decisive for implementing the process of GTM in accordance with STRAUSS and CORBIN (1990) in a reduced form. It was possible to integrate the knowledge and personal experience of the peers in a comparative and complementary manner; to shape the data analysis process dynamically and reflexively; and to facilitate the different constellations of participants at the research workshops. As a result, we were able to contain the abovementioned "messiness" of participatory research (COOK, 2009; WRIGHT, 2013) in a way that the peer researchers could see that they were making progress. [46]

A central challenge in the workshops was separating the various levels of coding. In particular, the separation between concepts and categories only took place during the research workshops at the suggestion of a maximum of two people, often only one person, who was also involved in the facilitation of the meeting. It would have been better had one additional facilitator concentrated exclusively on the content of the process. We discussed editing the spontaneously assigned concepts and categories as a follow-up to the workshops, but we rejected this so as not to unduly disrupt the interpretations of the peers. [47]

Using MAXQDA was very helpful for developing the core categories, as well as for grounding the categories in examples from the interviews. The Big Discussion Day format was very effective for compiling the results and determining the core category. By being able to cite concrete examples from the interviews, the generalized findings from the research were seen to be relevant to the practice of the daycare centers. However, there was not sufficient time to relate all the categories identified by each research team to the core category. Thus we were not able to reach the goal of GTM; namely, generating a theory based on a complete analysis of the data. [48]

Even though STRAUSS and CORBIN (1990) accepted pragmatic adaptations to GTM, we were not able to implement several basic elements. The organization of the step-by-step data collection within the scope of theoretical sampling did not fit with the peers' wanting to arrange their own interviews. And it did not fit with their assumption that a greater number of interviews would bolster the significance of the study. As a result, so many interviews were conducted at the beginning of the research that further data collection was no longer possible, due to a lack of resources. Therefore, it was not possible to refine the defined concepts and categories by way of theoretical saturation. [49]

In addition, STRAUSS and CORBIN envisaged integrating other data in addition to the interviews. In our case, this would have meant data from the debriefing of the interviewers, the relevant research literature on cooperation between parents and daycare staff, and the use of the visual data generated at the research workshops (photos and videos, see MEY & DIETRICH, 2016). Lack of time and the heterogeneity of the data collection for the debriefings prevented us from using this data. The writing of memos during the research process is a common additional source of data. Due to the lack of time, we were not able to implement this form of data collection. However, the research teams created a flipchart for each research workshop in which they recorded important content from their discussions along with organizational questions. Although we did not include the flipcharts in the data analysis per se, we did use them in the introduction to the subsequent workshop. [50]

Participatory data analysis is desirable in participatory health research because it is consistent with the approach and has epistemological advantages. The core idea is to generate transformative knowledge for social change, such as addressing issues of health equity. The accompanying "messiness" is not only unavoidable, but it also represents a sign of quality in collaborative work. In ElfE, we supplemented the coding process of GTM with elements from the DEPICT process for participatory data analysis. The driving principle was shared decision-making among the academic researchers and peer researchers. We intended a greater influence of the peers early in the analysis, as compared to the previous participatory research studies using GTM described in Section 3.3 (ACKERMANN & ROBIN, 2017 and DICK, 2007). [51]

We chose to use GTM for several reasons. It allowed us to fulfill the desire of the peers to do "real" research by using a standard qualitative research approach. GTM is also a process that is well documented in the literature. Although participatory research does not aspire to a rigorous adherence to methods (ABMA et al., 2019), falling back on proven methods provides guidance in the often difficult to understand, cyclical process of data analysis. GTM is also very compatible with participatory processes, especially due to its action orientation, its focus on interpretation, and its cyclical approach. It supports the opening up of a space for discussion and interpretation by all participants, which is a constitutive element of participatory research. In the following, we will examine our experience with the use of GTM, including the adaptations we made. [52]

The cooperative data analysis that took place in the research workshops functioned well overall. The peers engaged with this time-intensive, iterative process and participated in it continuously (even if the level of participation was less pronounced than in other phases of the research). Instead of training the peers beforehand, it proved advantageous to provide training as needed over the course of the workshops, making it possible to interlink the information on method with the knowledge of the peers. The use of GTM enabled the explicit and systematic integration of the peers' knowledge into the data analysis process. [53]

The coding process was participatory, thus ensuring the participatory validity (WRIGHT, 2013) of the analysis. Reading together all interviews in the research workshops was an important aspect. In spite of the cyclical approach and the often dynamic discussion process, the delineation of the steps in coding made it possible for all participants to see that progress was being made. Being able to see progress was an important element in the parents' experience of satisfaction with the work process. The peers demonstrated a high degree of willingness to participate in what for them was sometimes an unusual process of reading and editing long texts. But they saw the process as finished with the reading and coding in the research workshops. Whereas the academic researchers wanted to continue with several iterative loops of interpretation. From our point of view, this tension posed a limitation in the analysis process and represents a dilemma regarding the reliability of participatory research (McCARTAN, SCHUBOTZ & MURPHY, 2012). A similar tension existed with regard to theoretical sampling. The peer researchers considered the summary of findings for the purpose of identifying the need for further knowledge as being a repetition of previous discussions. Our intent was to make plain which aspects from the interview material could be additionally refined with the everyday knowledge of the peers. [54]

Considering the work of CREAN (2018), we could have expected the peers to add their perspectives throughout the research process, especially during the data analysis, so then to be able to analyze the transcripts with a greater distance from their personal experiences. On the contrary, we had the impression that the interpretation of the data material remained strongly tied to the experiences of the peers. Thus, case-specific analyses and the contrasting of interviews was not possible. [55]

The process of participatory coding required a considerable amount of time (30 research workshops for the three teams). This time was missing later for working together in publicizing the findings, resulting in a lower level of participation in this final phase. We observed over time less regular participation in the research workshops. This may have been due to the short time intervals between the workshops and/or the lengthiness of the overall process and the families' "growing out" of the daycare life phase. It may also indicate flagging interest in the process of consolidating the findings. Regarding the adaptations of GTM and DEPICT, we see that the various levels of coding could not be implemented in a clear-cut manner in the research workshops. There was also not sufficient time for a greater consolidation of the material by way of constant comparison or the inclusion of additional material (especially memos). [56]

We had a very good experience with the cross team compilation of the categories considered to be particularly important by the peers, as well as in deriving a core category. However, the coding process could not be completed for all the categories identified. Some questions from the interview guidelines could also not be included in the compilation. For that reason, we also did not use all identified categories for the presentation of the ElfE results. This experience suggests that a more sparing approach would have been more expedient, particularly by reducing the amount of data collected. [57]

STRAUSS and CORBIN (1990) posed questions to guide the research process according to GTM principles. Because GTM could only be partially implemented, we were not able to use the questions to reflect on the quality of our work. We used general criteria for qualitative research instead (FLICK, 2012 [1995]; STEINKE, 2010 [2000]) which show how ElfE benefited from the coding process. The intersubjective comprehensibility was increased. The process also profited from detailed description. The methodical approach was easy to communicate, and it made possible the comprehensive integration of the knowledge and experience of the peer researchers, thus promoting the generation of transformative knowledge. The peers and the professionals considered the examples from the interviews, focused on the challenges of cooperation between parents and daycare staff, to be particularly valuable. Thus, it was less the generalizing statements but rather the examples from everyday practice that were seen to have the most benefit. Although all findings from the studies could be validated in a communicative and comprehensive manner, they only referred to the categories considered particularly important by the participants. Further analysis of the results will be undertaken at a later time. [58]

Participatory research creates local evidence, meaning the focus is on a small-scale local level. Our project can be seen as a case study without the goal of creating generalizable knowledge (WRIGHT, 2013). The project showed that participatory research can be conducted with novice peer researchers in both the data collection and analysis phases. The brevity of the interviews are a sign that a longer pretest phase might have been useful for building competency as interviewers. The full potential of the peer researchers was not fully realized and the data were lacking in depth. [59]

The data analysis was not completed as specified by GTM. Substantiated findings were generated that offer a basis for action recommendations, but no theory was developed. There was also no specific analysis for special target groups. Parents with daycare-age children from the Berlin district of Marzahn-Hellersdorf and the community of Lauchhammer were approached as peers. The professional and educational backgrounds of the participating parents were, however, diverse. Also, with regard to cultural background, parents with Russian as their native language were included in the Marzahn-Hellersdorf case study. And in Lauchhammer, parents of children with special educational needs were interviewed also. But these differences were not taken into account in the analysis, due to lack of time. [60]

The coding process of GTM supported the goals of participatory research, specifically to make possible the collective generation of knowledge and to produce locally valid knowledge. However, the research teams were only able to produce theory in a rudimentary fashion. Basic GTM elements such as theoretical sampling, memo writing, and the inclusion of other data sources could not be incorporated sufficiently. This was due to a lack of means to implement these elements, limited time, and the varying interests of us as academic researchers as compared to the interests of the peer researchers. Finding ways to incorporate these elements is important for future participatory research projects. [61]

One approach would be a division of labor among the participants, which may involve more input by the academic researchers during the analysis process. Theoretical sampling would better support the development of concepts and categories (STRAUSS & CORBIN, 1990); however, this presupposes that the peer researchers see the relevance of this process. Our experience is that peer researchers assume that as much data as possible should be collected. To keep the data analysis manageable, only a selected group of peers can participate in data collection. Therefore, a decision should be made as to which peers will participate in data collection. [62]

5.2 Recommendations for participatory data analysis

We recommend the following for participatory data analysis:

The use of GTM makes it possible to integrate the everyday knowledge and experience of peer researchers into the data analysis process and to interlink that with the knowledge of the academic researchers. This supports an intensive exchange among all participants, resulting in the generation of new knowledge. From an epistemological perspective it is therefore more suitable as a basis for data analysis in participatory research than qualitative content analysis.

Data collection should be performed sparingly, even if this stands contrary to the wishes of the peer researchers. An iterative approach as recommended for GTM would be more useful, building the amount of data gradually. Theoretical saturation should be the criterion for deciding whether to collect more data.

The extent of the data collection should also be considered in regard to practical questions resulting from the organization of numerous research teams with a variety of research questions.

The implementation of the coding as well as the other core elements of GTM require prior experience on the part of the academic researchers, planning for the participatory work, as well as sufficient staff resources. For this, at least two staff are required in addition to the staff facilitating the research workshops and other meetings.

Any editing of the results from the research workshops should be discussed together with the peer researchers. Time and staff resources must be available for this step.

Debriefing following the interviews is important for alleviating the emotional impact of the interview experience as well as for examining ethical questions. The debriefing should be based on memo discussions as described in GTM. The importance and benefit of the debriefings should be made clear to all participants.

A collaborative approach to data analysis has proven successful, including the process of entering coding into data analysis software. It is important that all steps of the process are managed jointly with the input of the various participant groups.

The incorporation of dynamic reading from DEPICT to the GTM process provides easy access to the process of data analysis. The collaborative analyzing aspect, as well as the specifics of GTM coding, need to be presented clearly.

An important task of the academic researchers during the data analysis is to promote the comparison of data, particularly by recognizing and drawing attention to the differences between interviews and advocating for an iterative approach in data collection (BERG & MILMEISTER, 2008).

Although it is difficult to estimate the time required for a participatory data analysis process, the available resources should be calculated in advance. This calculation should be used as a basis for keeping the process manageable in terms of available resources. [63]

1) We wish to thank the research consortium PartKommPlus for assuming the costs of this English translation and Prof. Michael T. WRIGHT for his careful editing of the manuscript. <back>

2) The research process was designed by three groups of researchers made up of parents, service providers, and academic partners. In this article, the term "we" refers to the academic partners who facilitated the use of research methods. In accordance with the publication agreement of the project, other ElfE collaborators are also listed as authors. <back>

3) Translation of this and further German citations are ours. <back>

4) The terms used are a translation of the usual GTM terminology into everyday language so as to increase access for the parents and to promote the mutual interpretation of the material. The analysis extended beyond the summary description commonly found in a content analysis. <back>

5) The workgroup "Daycare for Children" fulfills the regulatory requirement that the work of all daycare providers be coordinated under the direction of the district youth welfare office: §78 Child and Youth Welfare Law (KHJG) of the district of Marzahn-Hellersdorf (AG 78). <back>

Abma, Tineke; Banks, Sarah; Cook, Tina; Dias Sónia; Madsen, Wendy; Springett, Jane & Wright, Michael (2019). Participatory research for health and social well-being. Cham: Springer.

Access Alliance Multicultural Health and Community Services (2011). Community-based research toolkit: Resource for doing research with community for social change. Toronto: Access Alliance Multicultural Health and Community Services.

Ackermann, Timo & Robin, Pierinne (2017). Partizipation gemeinsam erforschen: Die reisende Jugendlichen-Forschungsgruppe (RJFG) – ein Peer-Research-Projekt in der Heimerziehung. Hannover: Schöneworth.

Andresen, Sabine & Galic, Danijela (2015). Kinder. Armut. Familie. Alltagsbewältigung und Wege zu wirksamer Unterstützung. Gütersloh: Bertelsmann.

Bär, Gesine & Schaefer, Ina (2016). Partizipation stärkt integrierte kommunale Strategien für Gesundheitsförderung. Public Health Forum, 24(4), 255-257.

Behrisch, Birgit & Wright, Michael (2018). Die Ko-Produktion von Wissen in der Partizipativen Gesundheitsforschung. In Stefan Selke & Anette Treibel (Eds.), Öffentliche Gesellschaftswissenschaften. Öffentliche Wissenschaft und gesellschaftlicher Wandel (pp.307-321). Wiesbaden: Springer VS.

Berg, Charles & Milmeister, Marianne (2008). Im Dialog mit den Daten das eigene Erzählen der Geschichte finden. Über die Kodierverfahren der Grounded-Theory-Methodologie. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 9(2), Art. 13, http://dx.doi.org/10.17169/fqs-9.2.417 [Accessed: February 2, 2018].

Bergold, Jarg & Thomas, Stefan (2010). Partizipative Forschung. In Günter Mey & Katja Mruck (Eds.), Handbuch Qualitative Forschung in der Psychologie (pp.333-345). Wiesbaden: Springer VS.

Bergold, Jarg & Thomas, Stefan (2012). Participatory research methods: A methodological approach in motion. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 13(1), Art. 30, http://dx.doi.org/10.17169/fqs-13.1.1801 [Accessed: February 2, 2018].

Bezirksamt Marzahn-Hellersdorf von Berlin (n. d.). Ergebnisse der Einschulungsuntersuchungen 2016/17. Report, https://www.berlin.de/ba-marzahn-hellersdorf/suche.php?q=ergebnisse+Einschulunsguntersuchungen+/ [Accessed: January 18, 2021].

Bezirksamt Mitte von Berlin (2013). Einrichtungsbesuch und Kindergesundheit im Bezirk Berlin Mitte, https://www.berlin.de/ba-mitte/politik-und-verwaltung/service-und-organisationseinheiten/qualitaetsentwicklung-planung-und-koordination-des-oeffentlichen-gesundheitsdienstes/publikationen/index.php/detail/19 [Accessed: December 7, 2017].

Böhm, Andreas (2010 [2000]). Theoretisches Codieren: Textanalyse in der Grounded Theory. In Uwe Flick, Ernst von Kardorff & Ines Steinke (Eds.), Qualitative Forschung. Ein Handbuch (8th ed., pp.475-485). Reinbek: Rowohlt.

Bryant, Antony & Charmaz, Kathy (Eds.) (2007). The Sage handbook of grounded theory. London: Sage.

Cashman, Suzanne B.; Adeky, Sarah; Allen, Alex J.; Corburn, Jason; Israel, Barbara A.; Montaño, Jaime; Rafelito, Alvin; Rhodes, Scott D.; Swanston, Samara; Wallerstein, Nina & Eng, Eugenia (2008). The power and the promise: Working with communities to analyze data, interpret findings, and get to outcomes. American Journal of Public Health, 98(8), 1407-1417.

Cook, Tina (2009). The purpose of mess in action research: Building rigour through a messy turn. Educational Action Research, 17(2), 227-291.

Crean, Mags (2018). Minority scholars and insider-outsider researcher status: Challenges along a personal, professional and political continuum. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 19(1), Art. 17, http://dx.doi.org/10.17169/fqs-19.1.2874 [Accessed: March 13, 2018].

Dick, Bob (2007). What can grounded theorists and action researchers learn from each other? In Antony Bryant & Kathy Charmaz (Eds.), The Sage handbook of grounded theory (pp.398-416). London: Sage.

Flick, Uwe (2012 [1995]). Qualitative Sozialforschung. Eine Einführung (5th ed.). Reinbek: Rowohlt.

Flicker, Sarah & Nixon, Stephanie (2014). The depict model for participatory qualitative health promotion research analysis piloted in Canada, Zambia and South Africa. Health Promotion International, 30(3), 616-624, https://doi.org/10.1093/heapro/dat093 [Accessed: February 2, 2018].

Geene, Raimund; Richter-Kornweitz, Antje; Strehmel, Petra & Borkowski, Susanne (2016). Gesundheitsförderung im Setting Kita. Ausgangslage und Perspektiven durch das Präventionsgesetz. Prävention Gesundheitsförderung, 11, 230-236.

Glaser, Barney G. & Strauss, Anselm L. (1967). The discovery of grounded theory. Strategies for qualitative research. Chicago, IL: Aldine.

Greenall, Paul (2006). The barriers to patient-driven treatment in mental health: Why patients may choose to follow their own path. Leadership in Health Services, 19(1), 11-25.

Hildenbrand, Bruno (2010 [2000]). Anselm Strauss. In Uwe Flick, Ernst von Kardorff & Ines Steinke (Eds.), Qualitative Forschung. Ein Handbuch (8th ed., pp.32-42). Reinbek: Rowohlt.

International Collaboration for Participatory Health Research (ICPHR) (2013). Position paper 1: What is participatory health research?, http://www.icphr.org/uploads/2/0/3/9/20399575/ichpr_position_paper_1_defintion_-_version_may_2013.pdf [Accessed: January 28, 2018].

Jackson, Suzanne F. (2008). A participatory group process to analyze qualitative data. Progress in Community Health Partnerships, 2(2), 161-170.

Lamnek, Siegfried (2010 [1988]). Qualitative Sozialforschung: Ein Lehrbuch (6th ed.). Landsberg: Beltz.

Ledwith, Margaret (2017). Emancipatory action research as a critical living praxis: From dominant narratives to counternarrative. In Leonie L. Rowell, Catherine D. Bruce, Joseph M. Shosh & Magaret M. Riel (Eds.), The Palgrave international handbook of action research (pp.49-62). New York, NY: Palgrave Macmillan.

Legewie, Heiner & Schervier-Legewie, Barbara (2004). "Research is hard work, it's always a bit suffering. Therefore, on the other side research should be fun". Anselm Strauss in conversation with Heiner Legewie and Barbara Schervier-Legewie. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 5(3), Art. 22, http://dx.doi.org/10.17169/fqs-5.3.562 [Accessed: December 15, 2018].

M'Bayo, Rosaline & Narimani, Petra (2015). Big Discussion Day 22.01.2015. Partizipative Strategien im Umgang mit Drogengebrauch und Zugang zum (Sucht-) Hilfesystem für Menschen mit afrikanischem Hintergrund, https://digital.zlb.de/viewer/metadata/15913664/1/ [Accessed: January 18, 2021].

Marent, Benjamin; Forster, Rudolf & Nowak, Peter (2012). Theorizing participation in health promotion: A literature review. Social Theory & Health, 10, 188-207.

Marent, Benjamin; Forster, Rudolf & Nowak, Peter (2015). Conceptualizing lay participation in professional health care organisations. Administration & Society, 47(7), 827-850.

May, Michael (2008). Die Handlungsforschung ist tot – es lebe die Handlungsforschung!. In Michael May & Monika Alisch (Eds.), Praxisforschung im Sozialraum. Fallstudien in ländlichen und urbanen sozialen Räumen (pp.207-238). Opladen: Budrich.

Mayring, Philipp (2010 [1983]). Qualitative Inhaltsanalyse: Grundlagen und Techniken (11th ed.). Weinheim: Beltz.

Mayring, Philipp (2015 [1983]). Qualitative Inhaltsanalyse: Grundlagen und Techniken (12th ed.). Weinheim: Beltz.

McCartan, Claire; Schubotz, Dirk & Murphy, Jonathan (2012). The self-conscious researcher—post-modern perspectives of participatory research with young people. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 13(1), Art. 9, https://doi.org/10.17169/fqs-13.1.1798 [Accessed: June 6, 2018].

Meinefeld, Werner (2010 [2000]). Hypothesen und Vorwissen in der qualitativen Forschung. In Uwe Flick, Ernst von Kardorff & Ines Steinke (Eds.), Qualitative Forschung. Ein Handbuch (8th ed., pp.265-275). Reinbek: Rowohlt.

Mey, Günter & Dietrich, Marc (2016). From text to image—Shaping a visual grounded theory methodology. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 17(2), Art. 2, http://dx.doi.org/10.17169/fqs-17.2.2535 [Accessed: June 6, 2018].

Mey, Günther & Mruck, Katja (Eds.) (2011 [2007]). Grounded Theory Reader (2nd ed.). Wiesbaden: VS Verlag für Sozialwissenschaften.

Moser, Heinz (1995). Grundlagen der Praxisforschung. Freiburg: Lambertus.

Mruck, Katja in collaboration with Günter Mey (2000). Qualitative research in Germany. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 1(1), Art. 4, http://dx.doi.org/10.17169/fqs-1.1.1114 [Accessed: June 9, 2018].

Muckel, Petra (2011 [2007]). Die Entwicklung von Kategorien mit der Methode der Grounded Theory. In Günter Mey & Katja Mruck (Eds.), Grounded Theory Reader (2nd ed., pp.333- 352). Wiesbaden: VS Verlag für Sozialwissenschaften.

Olson, Mitchell M. (2007). Using grounded action methodology for student intervention—driven succeeding: A grounded action study in adult education. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 9(1), Art. 9, http://dx.doi.org/10.17169/fqs-9.1.340 [Accessed: December 15, 2018].

Ospina, Sonia; Dodge, Jennifer; Foldy, Erica & Hofmann-Pinella, Amparo (2008). Taking the action turn: Lessons from bridging participation to qualitative research. In Peter Reason & Hilary Bradburg (Eds.), The Sage handbook of action research. Participative inquiry and practice (pp.420-434). London: Sage.

PartNet – Netzwerk Partizipative Gesundheitsforschung (2017). Partizipative Gesundheitsforschung – eine Definition, http://partnet-gesundheit.de/ueber-uns/ [Accessed: January 18, 2021].

Przyborski, Aglaja & Wohlrab-Sahr, Monika (2014). Qualitative Sozialforschung. Ein Arbeitsbuch (4th ed.). München: Oldenbourg.

Reason, Peter & Torbert, William R. (2001). The action turn: Toward a transformational social science. Concepts and Transformation, 6(1), 1-37.

Roche, Brenda; Guta, Adrian & Flicker, Sarah (2010). Peer research in action l: Models of practice, http://www.wellesleyinstitute.com/wp-content/uploads/2011/02/Models_of_Practice_WEB.pdf [Accessed: February 4, 2018].

Rosbach, Hans-Günther; Kluczniok, Katharina & Kuger, Susanne (2008). Auswirkungen eines Kindergartenbesuchs auf den kognitiv-leistungsbezogenen Entwicklungsstand von Kindern. In Hans-Günther Roßbach & Hans-Peter Blossfeld (Eds.), Frühpädagogische Förderung in Institutionen (pp.139-158). Wiesbaden: VS Verlag für Sozialwissenschaften.

Schreier, Margrit (2014). Varianten qualitativer Inhaltsanalyse: ein Wegweiser im Dickicht der Begrifflichkeiten. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 15(1), Art. 18, http://dx.doi.org/10.17169/fqs-15.1.2043 [Accessed: December 15, 2018].

Simmons, Odis E. & Gregory, Toni A. (2003). Grounded action: Achieving optimal and sustainable change. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 4(3), Art. 27, http://dx.doi.org/10.17169/fqs-4.3.677 [Accessed: December 15, 2018].

Steinke, Ines (2010 [2000]). Gütekriterien qualitativer Forschung. In Uwe Flick, Ernst von Kardorff & Ines Steinke (Eds.), Qualitative Forschung. Ein Handbuch (8th ed., pp.319-331). Reinbek: Rowohlt.

Strauss, Anselm (1987). Qualitative analysis for social scientists. Cambridge: Cambridge University Press.

Strauss, Anselm & Corbin, Juliet (1990). Basics of qualitative research: Grounded theory procedures and techniques. Newbury Park, CA: Sage.

Teram, Eli; Schachter, Candice & Stalker, Carol (2005). The case for integrating grounded theory and participatory action research: Empowering clients to inform professional practice. Qualitative Health Research, 15(8), 1129-1140.

Van der Donk, Cyrilla; van Lanen, Bas & Wright, Michael (2014). Praxisforschung im Sozial- und Gesundheitswesen. Bern: Huber.

Van Lieshout, Famke & Cardiff, Shaun (2011). Dancing outside the ballroom. In Joy Higgs, Angie Titchen, Debbie Horsfall & Donna Bridges (Eds.), Creative spaces for qualitative researching: Living research (pp.223-234). Rotterdam: Sense Publishers.

Von Unger, Hella (2012). Partizipative Gesundheitsforschung: Wer partizipiert woran?. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 13(1), Art. 7, http://dx.doi.org/10.17169/fqs-13.1.1781 [Accessed: February 4, 2018].

Von Unger, Hella (2014). Partizipative Forschung. Einführung in die Forschungspraxis. Wiesbaden: Springer VS.

Wright, Michael (2013). Was ist Partizipative Gesundheitsforschung? Positionspapier der International Collaboration for Participatory Health Research (ICPHR). Prävention und Gesundheitsförderung, 8(3), 122-131.

Ina SCHAEFER is a health scientist. She is currently employed as a research assistant at the Alice Salomon University of Applied Sciences Berlin. There, she conducts participative research with parents within the scope of the project ElfE – Parents Asking Parents.

Contact:

Ina Schaefer

Alice Salomon Hochschule Berlin Alice Salomon Platz 5

12627 Berlin, Germany

E-mail: ina.schaefer@ash-berlin.eu

URL: https://www.ash-berlin.eu/en/research/research-projects-from-a-z/elfe/

Prof. Dr. Gesine BÄR is a professor for participatory approaches in social and health sciences at the Alice Salomon Hochschule Berlin. She directs the project ElfE and is also a speaker in the network PartNet – Participatory Health Research.

Contact:

Prof. Dr. Gesine Bär

Alice Salomon Hochschule Berlin Alice Salomon Platz 5

12627 Berlin, Germany

E-mail: baer@ash-berlin.eu

URL: https://www.ash-berlin.eu/hochschule/lehrende/professor-innen/prof-dr-gesine-baer/

Contributors of the Research Project ElfE

Contact:

Can be reached via Prof. Dr. Gesine Bär

E-mail: baer@ash-berlin.eu

URL: http://partkommplus.de/teilprojekte/elfe/elfe-erste-foerderphase/

Schaefer, Ina; Bär, Gesine & the Contributors of the Research Project ElfE (2021). The Analysis of Qualitative Data With Peer Researchers: An Example From a Participatory Health Research Project [63 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 20(3), Art. 6, http://dx.doi.org/10.17169/fqs-20.3.3350.