Volume 6, No. 1, Art. 39 – January 2005

Using Someone Else's Data: Problems, Pragmatics and Provisions

Jo-Anne Kelder

Abstract: In the current climate of requirements for ethical research, qualitative research data is often archived at the end of each unique research project. Yet qualitative data is capable of being revisited from multiple perspectives, and used to answer different research questions to those envisaged by the original data collector. Using other people's data saves time, avoids unnecessarily burdening your research participants, and adds confidence in interpreting your own data. This paper is a case of how data from one research project was acquired and then analysed to ground the analysis of a separate project using Distributed Cognition (DCog) theory and its associated methodology, cognitive ethnography. Theoretical considerations were the benefits and difficulties of using multiple sources and types of data in creating a theoretical account of the observed situation. Methodological issues included how to use (and not misuse) other people's data and coherently integrate data collected over time and for different purposes. Current ethics guidelines come from a paradigm of control suited to experimental, quantitative research approaches. A new paradigm that recognises researchers' inherent lack of control over qualitative research contexts needs to be developed. This research demonstrates the benefits of designing an ethics application to provide for data reuse and giving participants choice over the level of protection they require.

Keywords: ethics, secondary data, distributed cognition theory, weather forecasting

Table of Contents

1. Distributed Cognition Theory and Secondary Data

2. Theoretical Considerations

3. Methodology Issues

4. Ethical Considerations

5. Conclusions

1. Distributed Cognition Theory and Secondary Data

In 2003 I commenced an ethnographic research project characterised by several external constraints (short time frame, few resources, very busy participants) that strongly influenced my methodological decisions. I was using an established theory, Distributed Cognition (DCog) theory (HUTCHINS, 1995) in a new domain: weather forecasting at the Australian Bureau of Meteorology. I did not have time or resources to deploy the usual variety of ethnographic tools and techniques over several years in a particular domain, so I adapted the DCog research process to my particular context, aiming to maintain the principle of using multiple data sources to create a chain of representations in which to ground the interpretation (HUTCHINS & KLAUSEN, 1996). [1]

Weather forecasting is complex cognitive work, which has collaborative aspects and is highly dependent on information systems to deliver, process and package weather data in appropriate formats. It is dynamic, socio-technical work embedded in a strong culture. These characteristics made it ideal for using DCog theory (HAZLEHURST, 2003, pers. comm., 11th Feb). HOLLAN, HUTCHINS, and KIRSH (2000) explicate a framework for DCog research that integrates theory, cognitive ethnography and experiment. My research is the cognitive ethnography part of that framework, the data collection and analysis guided by DCog theory. [2]

Several DCog ethnographies had already been written on the basis of several years of extensive data collection. Typically, multiple data sources are used to validate conclusions of DCog analysis including video observation, interviews, participation (for example, HOLDER (1999) learnt to fly), field notes and organisation documents (HAZLEHURST, 1994; HOLDER, 1999; HUTCHINS, 1995; HUTCHINS & KLAUSEN, 1996). [3]

I had eight months available for my research project. I did not have time to do a weather forecast training program, conduct multiple interviews, record and analyse video data and collect and absorb all possible organisation documents from the Bureau. These time and resource constraints meant I made pragmatic choices so I could realistically gain access to enough data to strengthen the validity of my analysis. [4]

Another, clearly important factor in the data choices I had to make was the work situation of my potential participants. Forecast work is very busy, and there are not enough forecasters available to cover the 24/7 roster comfortably. Thus taking forecasters out of their shift to interview them was not possible, and asking them to give up extra time to participate in research was problematic. [5]

It was important not to unnecessarily burden forecasters with extra work because the Bureau has instituted a information systems development methodology that heavily relies on forecaster involvement, and forecasters already participate in forecast process analysis research (BALLY, 2003b) as well as research conducted from a Knowledge Management perspective (LINGER & BURSTEIN, 2001; SHEPHERD, 2002; STERN, 2003). More significantly, the focus of my research was the dynamic, situated and embodied nature of forecast work, characteristics which cannot be captured in an interview. [6]

Thus I needed a good source of information from forecasters about the understanding of forecast work at a tacit level to ground the interpretations I might make from video observation. However, I did not want to burden participants who were already under pressure with increasing workloads and expectations. [7]

When I read interview transcript samples in (SHEPHERD, 2002) from a DCog theoretical perspective, it was clear that access to the full transcripts would provide the requisite information to undergird my analysis. This was a research project on weather forecasters from a Knowledge Management (KM) perspective. SHEPHERD conducted ten semi-structured interviews with senior forecasters experienced in different types of forecasting and his research aimed to elicit tacit knowledge employed by forecasters doing their work. The interview transcripts were analysed using thematic coding. However, the six emergent themes only weakly mapped to KM literature (2002) and made a limited contribution to the Bureau's need for a clear understanding of the tacit elements of the forecast process.

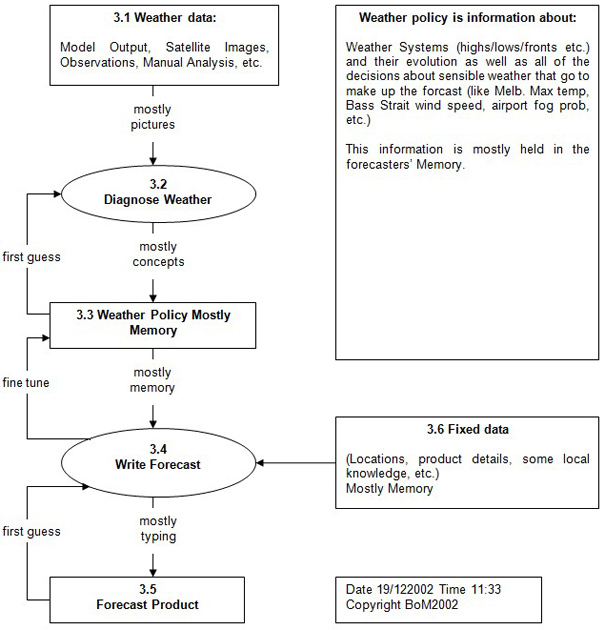

Figure 1: Forecast Process Diagram: informational perspective (BALLY, 2003b) [8]

BALLY's (2003b) work on the forecast analysis process produced 400 diagrams from various perspectives: organisational, functional and informational, but they did not model the subjective elements of forecasting, including tacit and implicit knowledge brought to bear in forecast decisions. Neither did they model aspects of forecasting where the activity is distributed across people and artefacts. I was asked to include in my research an assessment of the validity of the informational perspective (Figure 1). This diagram was also an important source of secondary data for my analysis. The forecast process articulated in Figure 1 occurs over the course of each shift (between seven and twelve hours duration). However, the video observation demonstrated that this forecast process also guides the structure and format of the briefing delivered by a senior forecaster (SF) when handing over his shift. Handover is essentially a summary of the reasoning processes and salient information used to construct the previous shift's forecast policy. On the basis of this my interpretation of mental schemas held by forecasters had to fit within its framework. [9]

The remainder of this paper focuses on how I used SHEPHERD's (2002) data, particularly the theoretical and methodological issues in relation to using DCog theory as a research approach which actively seeks out secondary data as a mechanism for validating interpretation of the primary data. It also outlines the constraints on my research generated by ethical considerations and suggests two implications for ethical research. The constraints were grounded in my own commitment to look after research participants (including ones anonymous to me) and those imposed by ethics policies as interpreted and enforced by an ethics committee. [10]

My research field is (broadly) computer supported collaborative work domains. At the theoretical level, DCog theory views collaborative work as a system of individuals interacting within their natural environment. It observes cognitive behaviour and focuses on the way artefacts mediate human cognition. DCog theory rejects the laboratory as the appropriate context for understanding and argues for studying cognition as it occurs in its natural setting (HUTCHINS, 1995). There are several key features of DCog. It uses a metaphor of cognition as computation and the unit of analysis distributes cognitive activity socially and technically across people and artefacts over time. Another feature is that DCog views cognition as essentially cultural, and defines computation as the propagation of representational states across representational media over time (HUTCHINS, 1995). [11]

From a theoretical perspective, I decided to follow the methodology and analysis set out by HUTCHINS and KLAUSEN (1996) as closely as possible to create an interpretation of the data grounded in the ethnography of the setting. They used multiple sources, types and representations of data in creating their account of cognitive work in an aeroplane cockpit in order to explicitly establish the connections between the data and its interpretation using DCog theory. [12]

DCog theory was the lens for viewing and recording observations in the forecast office, and guided the data collection. Of primary interest was data that had any association with cognitive activity. The Australian Bureau of Meteorology and in particular, the Hobart Regional Forecast Centre had many different sources and types of relevant information. My primary data source was the video observation of an entire forecast shift (6.5 hours). The setting ethnography included: the Annual Report (BUREAU OF METEOROLOGY, 2003), printouts of weather charts and forecast products, screen prints of meteorological information systems (MetIS) applications, field notes including sketches of the forecast office layout, BALLY's (2003b) forecast process diagrams and SHEPHERD's (2002) transcripts. I also emailed a questionnaire to one forecaster to check transcription of any technical language in the video segment selected for detailed analysis. Most of my data was collected with the aim of avoiding interrupting forecasters or forecast work. For example, printouts of weather charts were retrieved from recycle bins and pencilled notes by forecasters on them were used in conjunction with comments from the interview transcripts on how forecasters kept track of the forecast process to discuss aspects of artefact mediated cognition. [13]

SHEPHERD's interview transcripts were important in using DCog theory to analyse the video data. My research was trying to articulate the tacit knowledge embedded in forecasters' memories, artefacts and work practices. This knowledge is difficult and sometimes impossible to articulate, even by the person expert in his or her work (POLANYI, 1962). However where workers have been asked to articulate the tacit components of their work, that articulation forms a boundary for interpretation, grounding it in reality. [14]

SHEPHERD's interview transcripts guarded against an unbalanced interpretation of the video data. For example, in the video observation, when the outgoing senior forecaster (SF) returned from doing a radio broadcast to complete the handover of his shift, he expressed frustration that the radio announcer had left him waiting. The ensuing conversation revealed this was not an isolated occurrence. However, my interpretation of this frustration at being kept waiting was moderated by interview transcripts that showed that although an interruption, radio broadcasts are viewed as a positive aspect of forecasting. One interview participant thought it made forecasters more "accountable for our forecasts" and therefore "it makes us a little more responsible in our approach" so that "you try and do your best to make sure that you can live up to your reputation". [15]

The interview transcripts also provided reasons for otherwise unexplained actions observed in the video transcript, for example scribbling notes on printouts of weather charts. Furthermore, because there was a range of interviewees, variations or consistency in motivations and rationales were evident and able to be taken into account. A limitation however was that I only had participant consent to use four of the ten interview transcripts. [16]

Another theoretical consideration was that the interview questions were not conducted from a DCog perspective. However many responses to the interview questions were clearly relevant. This leads to the question of how congruent the research aims of different projects need to be in order to responsibly reuse data. In this case, SHEPHERD's research interviewed senior forecasters, asking them how they went about the job of creating a forecast, what role wisdom and experience played, what resources they used, and how they dealt with work pressures such as interruptions and deadlines. The aim was to articulate forecasters' tacit knowledge and make it explicit. My research observed forecasters at work interacting with each other and their environment. The aim was to articulate how they worked from a cognitive perspective, and identify the distributed aspects of their thinking processes (especially the use of artefacts to aid thinking). Both research projects were about forecasters' cognitive work and focussed on filling the Bureau of Meteorology's knowledge gap between what was already articulated about the forecast process and what was not (see Figure 1). This congruence made using SHEPHERD's data relatively straightforward. [17]

There was an additional (unexpected) benefit of analysing SHEPHERD's data in a DCog framework. SHEPHERD's data was collected from a different theoretical framework and for a different purpose. However, it could be fruitfully analysed to affirm and ground insights generated from data collected and analysed in a DCog theoretical framework. This facility added confidence in the strength and usefulness of DCog theory. This is a case where secondary data can be used to reflect on the applicability and validity of a theory. [18]

Qualitative research is committed to analysis of data that incorporates the context of data collected in its interpretation. But context is a dynamic concept for the qualitative researcher, and its delineation is a function of the designated research boundaries and locus of attention. In addition, the researcher's understanding of the research domain grows organically over time. The data record increases in value as layers of understanding and ability to comprehend and record details of a situation under observation grows. This can affect the research boundaries and locus of attention (and such effects are not necessarily intended or noticed). Then, as time passes, and circumstances change, some of that data record can become obsolete or irrelevant to current research questions. Also, the context in which the data was collected can be lost, and with it, much of its usefulness for reuse. [19]

This has general implications for use of secondary data and had specific implications for my research. Context had to be considered at two levels: the context of the researcher who created the secondary data and the new context into which the data record was being applied, and which in turn potentially becomes a source of secondary data for subsequent research. It is important for researchers to consistently keep a personal diary or collection of memos to record immediate impressions, thoughts, things that surprise and confuse (AGAR, 1986). These elements soon become familiar and "disappear" from the researcher's consciousness, and the record provides the context for the collection of the data that informs later analysis. [20]

When reusing someone else's data, it is rare to have access to this context. I had a copy of SHEPHERD's (2002) raw transcripts, complete with notes and highlighted text. However the notes and highlights coalesced around the particular themes SHEPHERD was concerned with and did not add to my understanding of the context of his research. Of more use were conversations with SHEPHERD and the written discussion of his research that provided me with information on some idiosyncrasies of the data. For example, SHEPHERD had requested participants with a range of forecast experience. He meant the variation to be across forecast work: public weather, severe weather, marine, aviation, etc. The ambiguity resulted in all his interview participants being senior forecasters who had done many of those types of forecasting. One of the consequences for my research was that some of these experienced forecasters retired at the end of the year SHEPHERD did his research, and could not be contacted for permission to reuse their interview transcripts. The more advanced age of interview participants also meant they had all been trained prior to the use of computers to support forecast work. SHEPHERD informed me that there is a difference in work practices between older forecasters and those trained in a highly technical environment: older forecasters rely on experience and judgement while younger forecasters trust the technology, and this difference was something I had to take into account in my use of the interview transcripts (2002). [21]

My research was conducted in two stages (familiarisation and video observation) and each stage involved extensive preparation to maximise opportunities for me to absorb and understand the work environment and activities, and thus create an ethnography of the research setting. Field observation was complemented by secondary data sources such as SHEPHERD's (2002) transcripts, BALLY's (2003b) forecast process analysis, the Bureau's Annual Report (BUREAU OF METEOROLOGY, 2003) and website (http://www.bom.gov.au/). [22]

The research methodology was to (iteratively) create four generations of data representations: 1) the "raw" video and audio recordings; 2) the video transcription and coding of verbal and other behaviour; 3) a description of the actions of participants related to their goals and expectations and 4) a translation of the action descriptions of events into interpretations of those events and then use these (interrelated) representations as a basis for a theoretical account of the activity using DCog theory. The four representations, the theory, and ethnography of the setting were woven together to generate insights into how forecasters do their work (HUTCHINS & KLAUSEN, 1996). [23]

Secondary data was used in analysing each data representation to inform, confirm and constrain the analysis and interpretation. Public domain information, such as contained in the Bureau's website (http://www.bom.gov.au/) and its Annual Report (BUREAU OF METEOROLOGY, 2003) provided general cultural information (for example, the titles and responsibilities of different levels of forecast work), historical information and an organisation-level articulation of the Bureau's work. [24]

SHEPHERD's (2002) transcripts provided information for analysis at work practice-level. They were very important for highlighting which observed events were fruitful for more detailed analysis. The interview transcripts also gave insight into the significance and meaning of events in focus as well as constraining the interpretation. For example, the video showed that dealing with interruptions was a major part of the handover activity. During the video observation, the outgoing senior forecaster (SF) had to answer seven telephone calls and a make a radio broadcast in the 33 minutes taken for handing over the forecast shift. At the end, he apologised to the oncoming SF for the disjointed nature of the briefing. His colleague reminded him that the unusual busyness was due to one of the staff missing that morning. [25]

SHEPHERD specifically asked his interview participants about the impact of interruptions on their thought processes and how forecasters dealt with it and the interview participants discussed phone call interruptions at length. One participant disclosed that normally the forecast process is supported by people whose job is to answer the phones, "but in the event that they are not there, it is very, very noticeable on the workload". During the familiarisation stage of my research I had recorded in my field notes that no one is assigned to answer the phones during the morning handovers. I videoed the morning shift, beginning with the handover, so I was aware that a missing staff member had added a radio broadcast to the handover workload, but that the SF having to answer telephone queries was normal. [26]

The combination of apparently incidental information recorded in my field notes and the consistent comments in the interview transcripts on interruptions being a fact of life for forecasters, constrained my interpretation of the video transcript comments that might otherwise have indicated that handovers are not normally disjointed because of interruptions. Instead, the interview transcripts pointed to interruptions as an event to highlight and examine how forecasters dealt with them. [27]

Where SHEPHERD specifically questioned forecasters on issues that could be directly identified in the video transcript it was relatively straightforward to integrate the comments of interview participants into the analysis, especially as the answers from the interview participants were usually congruent, with only minor variations at the level of detail. However care had to be taken. In the interview transcripts, forecasters described their strategies for recovering interrupted thoughts during the extended analytic process of constructing a forecast policy. Some strategies articulated in the interview transcripts (scribbled notes on screen printouts) were not observed in the video of handing over a forecast shift. Reflecting on this difference in the data sets led to understanding that the thought recovery strategies observed during handover were of a forecaster recommencing his presentation of that policy, essentially a presentation of his best coherent understanding of the current weather situation and relevant trends, justified with reasons. The cognitive load for handing over a completed forecast policy is lower than the load for constructing one, as the judgments have already been made and just have to be remembered. [28]

SHEPHERD's transcripts were also important for grounding the video data analysis that aimed to explicate implicit cultural aspects of forecast work embedded in explicitly observed work practices. A behaviour stream analysis of the video transcript (HUTCHINS & KLAUSEN, 1996), grouped actions into functional systems and sub-systems to identify actions and speech that relied on cultural models (inter-subjectively shared by participants and used as a basis of coordinating their activities). This provided a source of information for identifying their mental models or schemas (D'ANDRADE, 1995). As part of the analysis I related schemas from these data representations to schema made explicit in other ethnographic sources (field notes, Bureau documents, the interview transcripts from [SHEPHERD, 2002] and BALLY's [2003b] diagram). [29]

Analysis of video data identified forecasters' work practice of justifying each element of the forecast policy (verbally in handover, and mentally during the actual forecast process) and made visible how a culture of defensive pessimism (NORAM, 2001) is embedded in forecast work practices. Although details of how this culture was embodied in work practices had not previously been made explicit, this culture was already articulated among forecasters at folk level. My field notes record the tongue-in-cheek (context!) comment by a forecaster, "forecasters view clients and the weather as an enemy to be vanquished ... [so they] ... hedge, obfuscate, and hint to cover themselves". On one field visit to the Hobart Regional Forecast Centre, I observed pride and triumph as one forecaster reported a "Trifecta! Yes!" of accurate forecasting.1) [30]

SHEPHERD's (2002) interviews explored the impact of high profile weather events on the participants' forecast practice. There have been a few high profile severe weather events that were not forecasted, such as "Cyclone Tracey", "the Sydney hail storm" and "the Sydney to Hobart yacht race". These events had an acknowledged impact on all forecasters. One interview participant commented on particular forecast failure,

"I think it makes us a lot more conservative, a lot more conscious of the impacts of the weather on the user which then makes us much more conservative in our approach to things. We might tend to add a little to the forecast, a little bit to the wave height, a little to the swell height. I think we tend to go a little bit over the top. I say conservative, or it may be that it is a little more extreme, to cover the extreme if you like." [31]

This risk-adverse culture is rooted in the knowledge that users of forecasts can lose life or property if not properly warned. Interview participants reported that this risk adversity extends to taking into account clients of the forecast:

"A lot of thought goes into trying to tell people in a way they'll understand the consequences of the event that's occurring and in good time—time enough to allow them to take some action that will mitigate the effects of the event." [32]

Thus SHEPHERD's (2002) transcripts were an important source of information on the reasoning underlying this culture, and evidence that some of the work practices identified by the analysis of the video data were validly interpreted as culturally constituted. [33]

For this research, I created four generations of data representations (raw data, coded transcriptions, action descriptions and interpretations). Each representation highlighted different sorts of information about the setting and each was carefully grounded in independent ethnography, drawn from the setting and secondary data sources. Combined, they formed the foundation of a theoretical account of the forecast process, particularly applied to the handover activity. [34]

I constructed a DCog theoretical account of the handover (which because of the linkages also accounted for many aspects of the weather forecast process) by weaving together the four representations, setting ethnography and the theory. I then used this account to generate insights into the domain of weather forecasting. Interview transcripts from a different research project (SHEPHERD, 2002) were profitably used to inform and constrain interpretations based on my primary data source (video observation), giving confidence in the validity of my conclusions. [35]

Ethics is about creating boundaries for the nature and extent of information permissible for a researcher to acquire and limiting the use made of that information. There are limitations created by ethics that are grounded in the researcher's own commitment to look after research participants (including ones you are not allowed to meet), and limitations imposed on the researcher by formal institutions such as ethics policies and ethics committees. The main requirement is participant consent and the criterion is whether that consent is free and informed. One interesting aspect of this criterion that was highlighted by my quest to be allowed to reuse someone else's data is that someone other than the participant is invested with the power to determine whether their consent has been appropriately obtained. [36]

With this in mind, the familiarisation stage of my research was very important methodologically but problematic ethically because I was exposed to data (primary and secondary) at a stage of research with ambiguous ethical status. I prepared for it by gaining permission from relevant people to do the project, clearly explaining its purpose and the benefits to the participants that I hoped to come out of my research. I also sent an explanatory email to the Director of the Hobart Regional Forecast Centre (RFC) outlining my proposed activities and the rationale. He passed this on to all forecasters at that office. I was guest speaker at the senior forecasters' regular meeting and personally presented my preliminary research plan and answered any questions. Thus, by the time I was ready to familiarise myself with the research environment my presence was in principle both expected and accepted. My first two visits involved wandering around or sitting and talking with whoever was available and had time and willingness to show me what they were doing. [37]

This leads to the pragmatics and problems of ethical considerations in qualitative social research, the major one I faced being the order of, "which comes first? The chicken or the egg?" In order to submit a substantive ethics proposal that accurately reflected the situation in which I was going to do research, I needed to visit and familiarise myself with the context. But once part of that context, especially as a visitor who will be coming back to do more later, the participants start interacting with the researcher (at least mine did). [38]

Each time I visited, a forecaster on duty invited me to sit down with him and spent some of his slack time (up to 20 minutes) showing me the different software applications he used, telling me which ones he liked and why, work practice variations among forecasters and various issues and problems they had with their work situation. Each time I was faced with a very awkward choice of accepting the offer to engage in an interaction or being impolite:

"No, I can't talk to you now, I have to protect you and give you an information sheet (which I can't write yet because I don't know enough about your work to write something sensible) and you haven't signed a consent form." [39]

I am very concerned to protect my participants, but as a female researcher dealing primarily with professional males often a decade older than me, I was conscious of implicit social relations that I thought were important to maintain. These forecasters did not want or ask my protection and to enforce it smacked of disrespect. In addition, politeness dictates grateful acceptance of a person's offer of help, and allowing the giver to determine the timing, nature and extent of that help. Unethical politeness vs. ethical rudeness is an issue that occurs in the context of minimal risk qualitative research activities that ethics policies have yet to address. [40]

I chose to accept their offer of teaching me about their job, and trust that the context already had informed consent embedded in it. Having been polite, I then knew things that I would not have otherwise. I recorded my impressions, in part so that I would be able to track the progress of my understanding of forecast work and not improperly use information acquired without formal consent. [41]

Yet I know these interactions became part of my understanding and had a positive impact on the research process and outcomes. In particular, these preliminary contacts gave me strong indicators of what mattered to the forecasters in their context, and a sense of the complicated nature of their work and that DCog theory was a suitable lens for studying them. The chats and impromptu demonstrations provided me with essentially ephemeral data which became part of my tacit knowledge, which in turn made it easier for me to understand the codifiable, recorded data from the video observation and to prepare tools, such as a field notes sheet to facilitate more effective video data recording. However because most of the data I was exposed to in the familiarisation phase of the research was necessarily acquired prior to formal ethics approval, I had to be very careful with the contribution that phase made to my research conclusions. [42]

It was during this stage of the research that I realised how important and beneficial having permission to reuse SHEPHERD's interview transcripts would be. I was not intending to interview the forecasters. A major plank of my research plan was "not burdening the participants" and "not taking them out of the work context". Thus I crafted my ethics application to allow for contacting SHEPHERD's interview participants for permission to use the transcript of their interview. The consent form for my research gave participants' choice of different levels of anonymity and all checked the option of being identifiable in presenting the conclusions of my research project. Unfortunately, because I felt I was already pushing the boundaries of what an ethics committee might accept, I intentionally left out the provision for the reuse of my own data. In hindsight that perception may have been unfounded. [43]

Given the obvious benefits of reusing all of the ten interview transcripts I was faced with a barrier that (from my self-interested perspective) seemed obstructive to research rather than protective of participants. The reason I was only allowed to use four of SHEPHERD's transcripts was that six of the experienced forecasters he interviewed had retired or were otherwise not contactable. I would like to argue that the context in which they gave the interviews meant a decision "not for reuse" could be considered unnecessary, in the sense that it contravened the clear willingness of participants to spend time discussing their work practices in order to help the organisation where they had worked for many decades. [44]

Also, this was a situation where no possible harm could come to anonymous interview participants by their data being analysed for a similar purpose using a different methodology. I would like to argue further that because ethnographic data is enriched in comparison to other data and other analytic lenses being applied, to refuse to use it in that sort of context is to actually waste the time of participants. The forecasters were already overburdened by existing work requirements. In addition the Bureau developed its own meteorological information systems with a user centred approach that required heavy forecaster involvement. The Bureau was very concerned by the additional load this placed on forecasters as they tried to articulate the forecast process and test new software applications for usability. For me to ask for forecasters to spend an hour each answering questions similar to those already answered the previous year was not possible, because so clearly unnecessary. [45]

Additional (potentially vexatious to the researcher) difficulties included the requirement to keep the participants anonymous. This meant that SHEPHERD had to contact all the participants (an imposition on his time), send the consent forms and information sheets, and then notify me when they were returned. He then had to arrange to keep the consent forms secure (I was not allowed to know their names) which has the added complication of a research project with proof of consent kept in two separate locations. Altogether the process was complicated with potential for breaching ethical guidelines for anonymity at several points. My motivation to have access to the transcripts was the driver for working through all the steps and for requesting my own participants' permission to be identifiable. [46]

It appears a common experience that researchers must expend significant effort and time with no guarantee that will be given ethics approval (c.f. ROTH, 2004). The evolving nature of ethics policy and its applications was reflected in apparent unevenness of treatment among my fellow researchers to the extent we were unable to fathom why one project was accepted and another criticised. This situation is particularly trying when doing research under short time frames with minimal risk to participants, especially if your participants find being protected an imposition, so that it is embarrassing to force them through the protocol. [47]

Codification of ethics does not create or ensure ethical research. If the protocol is irrelevant to the context, at certain points you get researchers disengaging from the spirit of the ethics process and using successful ethics proposals as templates, regardless of unique aspects. Assurance of anonymity is standard ethical practice in research and initially I put that into my proposal. However, I later submitted a variation to allow my participants choice of being identifiable. This permission was very useful in presenting my research once it was completed. For example, I could use a video clip to demonstrate the complex socio-technical nature of the forecast work environment, which even the participants appreciated seeing. (Even though I had written consent, I emailed the participants to tell them I wanted to show the video clip as part of a presentation to the Hobart RFC forecasters and assure them it was not necessary if the thought made them uncomfortable.) [48]

It could be argued that current ethics guidelines come from a paradigm of control suited to experimental, quantitative research approaches. Thus ethics policies frequently hinder legitimate qualitative social research in unnecessary ways and create a context where the research participant is viewed as passive object rather than co-participant in the research project. The laudable aim of "protection" can also be construed as taking power from the researcher and instead of giving it to the participant, giving it to the ethics committee. [49]

For minimal risk research where participants in qualitative social research are not vulnerable or passive objects of observation needing protection, a better paradigm should be developed which allows for control to be given to the research participants to be flexible in how they participate as the research progresses. This is particularly important because it is not possible to predict or control the trajectory of qualitative social research in the way a quantitative experiment in a laboratory can be controlled. For example, what do you do when someone walks across the field of your video observation, chats with your participant and then leaves before you have time to stop your note taking and climb down off your step ladder—not to mention give them the information sheet to read and ask them to sign the consent form? [50]

However, it could also be argued that taking careful thought and planning to make an ethics application that is flexible enough to accommodate most contingencies is worthwhile. It is important to protect our participants and a good ethics committee will work with researchers to ensure that protection, without creating unnecessary barriers to conducting our work. [51]

My research at the Bureau validated DCog theory in a new domain and demonstrated that several insights reported in the literature on collaborative computer supported work situations also apply to weather forecasting. The use of secondary data sources, including data collected and analysed by other researchers, enriched the data analysis and gave a substantial basis for confidence in the validity of the analysis. The fact that DCog theory was a very useful lens for understanding secondary data sources collected using a different theoretical perspective gave substance to claims for the theory's validity. Using secondary data sources also avoided unnecessarily burdening the participants with time-consuming interviews. [52]

The forecasters who participated in my research were willing to be identifiable. Having their permission made presenting the results of video-based research much easier. However I still took care to give the participants choice at the point where their was potential for discomfort (video data viewed by their peers in their presence). Forecasters who participated in SHEPHERD's research were not given that choice, which complicated the process of gaining access to his data. SHEPHERD's participant forecasters' consented to spend over an hour discussing their work, understanding their knowledge was being sought for the benefit of future forecast work practice. In that case the ethics process failed to accommodate the possibility of implicit consent by making it impossible to use the results of that effort more than once without explicit permission for a specific research project. [53]

My research aimed to provide useful insights into forecast work that would inform design of better meteorological information systems to support forecasters in their work. After my presentation to my participants and their colleagues, the forecasters commented that they had never thought of their work "that way" before, but they could see the insights were true and worth articulating. BALLY (2003a) and LINGER & AARONS (2004) have subsequently referred to and applied some of those insights to the ongoing project of designing meteorological information systems for the Australian Bureau of Meteorology. This is an example of a research context in which the reuse of secondary data sources (including the data generated by my research project) is of direct benefit to the participants as well as the researcher. [54]

There are two implications for ethical research that I can envisage. The first is that the paradigm for ethics approval needs to change. The current paradigm seems to rest on the assumption that researchers have control over the research domain and therefore the ability to guarantee prior informed consent from every participant. This ignores the reality that in a social research context, it is common for unexpected people to participate in unpredictable ways. In addition, it is not possible to interrupt unexpected interactions being observed without destroying them. [55]

Thus, the second implication is that control of the nature and degree of consent currently invested in the ethics committee should be changed to give control to the participants. This requires researchers to think ahead very carefully. It is not possible to predict all the possible uses for data that is collected. Even if it were, the consent form could become an unmanageable checklist. However, consent forms can embed choice for participants so that they can specify the level of protection they require. There are some research contexts were anonymity is paramount. At the Bureau, all my participants checked the option of willing to be identifiable. This research demonstrates the benefits of designing an ethics application to provide for data reuse and giving participants choice over the level of protection they require. It also demonstrates the need for ongoing discussion on the role and authority of formal structures such as policies mediated by ethics committees in authorising qualitative research projects. [56]

1) "Trifecta" is a betting term. It means: "A system of betting in which the bettor must pick the first three winners in the correct sequence" (dictionary.com). In the case of the forecasters, it meant they had picked the weather correctly three times in a row. <back>

Agar, Michael (1986). Speaking of Ethnography (Vol. 2). Beverley Hills: Sage.

Bally, John (2003a). Applications Required to Support the Forecast Task. Melbourne: Bureau of Meteorology.

Bally, John (2003b). Forecast Information Flows Analysis: Bureau of Meteorology.

Bureau of Meteorology (2003). Annual Report 2002-2003: Bureau of Meteorology, Department of the Environment and Heritage.

D'Andrade, Roy (1995). The Development of Cognitive Anthropology. Cambridge: Cambridge University Press.

Hazlehurst, Brian (1994). Fishing For Cognition: An Ethnography of Fishing Practice in a Community on the West Coast of Sweden. Retrieved 28-01-03, 2003, from http://dcog.net/~brian/dissertation/dissertation.html.

Holder, Barbara (1999). Cognition in Flight: Understanding Cockpits as Cognitive Systems. Unpublished Doctoral Dissertation, University of California, San Diego.

Hollan, James; Hutchins, Edwin & Kirsh, David (2000). Distributed Cognition: Toward A New Foundation For Human-Computer Interaction Research. ACM Transactions on Computer-Human Interaction, 7(2), 174-196.

Hutchins, Edwin (1995). Cognition in the Wild. Cambridge, Mass: The MIT Press.

Hutchins, Edwin & Klausen, Tove (1996). Distributed Cognition in an Airline Cockpit. In Yrjö Engeström & David Middleton (Eds.), Cognition and Communication at Work (pp.15-34). Cambridge: Cambridge University Press.

Linger, Henry & Aarons, Jeremy (2004). Filling the Knowledge Management Sandwich: An Exploratory Study of a Complex Work Environment. Paper presented at the 13th International Conference on Information Systems Development: Advances in Theory, Practice and Education, Vilnius, Lithuania.

Linger, Henry & Burstein, Frada (2001). From Doing to Thinking in Meteorological Forecasting: Changing Work Practice Paradigms with Knowledge Management. Paper presented at the twenty-Second International Conference on Information Systems, New Orleans, Indiana.

Noram, Julie (2001). The Positive Power of Negative Thinking. New York: Basic Books.

Polanyi, Michael (1962). Personal Knowledge: Towards a Post-critical Philosophy. Chicago: University of Chicago Press.

Roth, Wolff-Michael (2004, September). UnpPolitical Ethics, EUnethical Politics [49 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research [On-line Journal], 5(3), Art. 35. Retrieved September, 10, 2004, from http://www.qualitative-research.net/fqs-texte/3-04/04-3-35-e.htm.

Shepherd, Kevin (2002). A Knowledge Based Approach to Meteorological Information Systems. Unpublished Honours Dissertation, University of Tasmania, Hobart.

Stern, Harvey (2003). Progress On Streamlining the Forecast Process Via a Knowledge-Based System. Retrieved 29th April, 2003, from http://www.bom.gov.au/bmrc/basic/events/seminars_2003.htm#23APR03.

Jo-Anne KELDER is a PhD candidate with the School of Information Systems at the University of Tasmania and the Smart Internet Technology Collaborative Research Centre. She has joined the SITCRC as part of the User Centred Design project, particularly its ongoing methodology developments. She is interested in complex work environments that are highly dependent on information systems and where there are lots of social, cultural and technical issues that need to be identified and understood if new technologies and work processes are to fit the natural work environment.

She plans to investigate innovative research methodologies that capture user experiences and insights; then to deploy those methods in a manner that is conducive to generating a process of user-centred or participative design. The particular focus can enable the translation of user insights and experiences into design requirements for intelligent Internet technology solutions for current and future complex systems.

Contact:

Jo-Anne Kelder

School of Information Systems

University of Tasmania

GPO Box 252-87

Hobart Tasmania 7001

Australia

Phone: +613 6226 6200

E-mail: jo.kelder@utas.edu.au

URL: http://www.ucd.smartinternet.com.au/Kelder.html

Kelder, Jo-Anne (2005). Using Someone Else's Data: Problems, Pragmatics and Provisions [56 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 6(1), Art. 39, http://nbn-resolving.de/urn:nbn:de:0114-fqs0501396.

Revised 6/2008